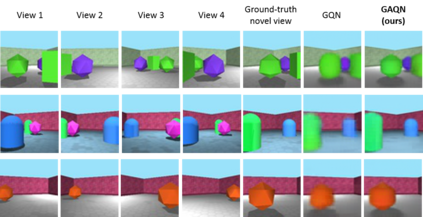

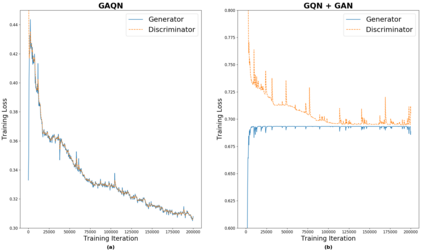

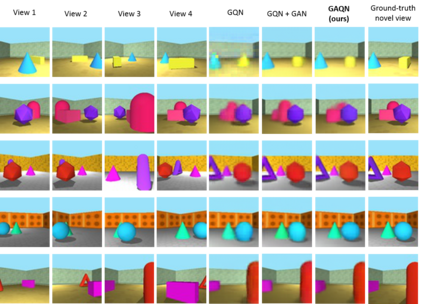

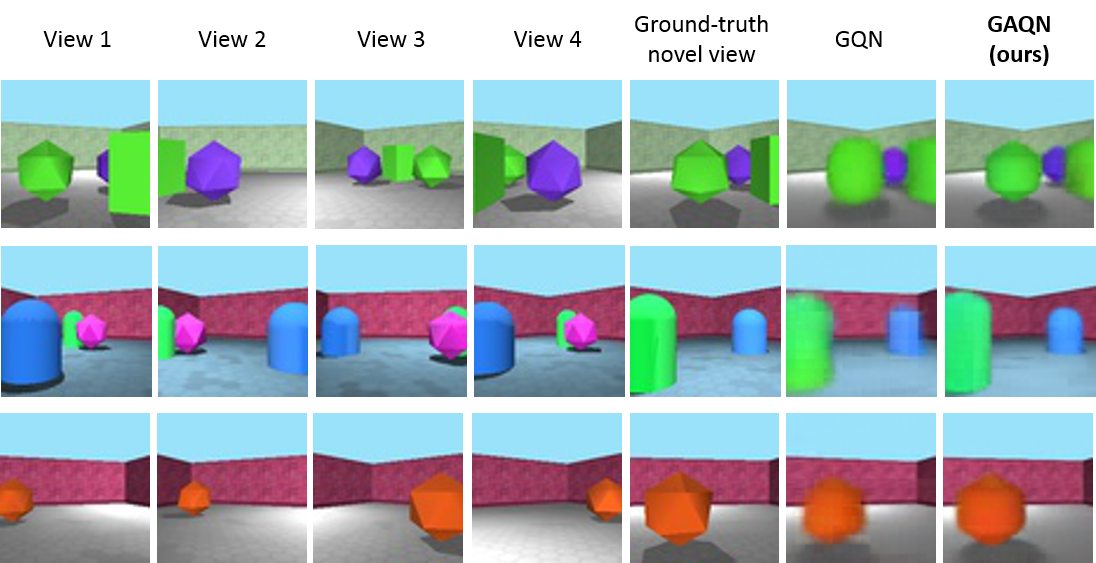

The problem of predicting a novel view of the scene using an arbitrary number of observations is a challenging problem for computers as well as for humans. This paper introduces the Generative Adversarial Query Network (GAQN), a general learning framework for novel view synthesis that combines Generative Query Network (GQN) and Generative Adversarial Networks (GANs). The conventional GQN encodes input views into a latent representation that is used to generate a new view through a recurrent variational decoder. The proposed GAQN builds on this work by adding two novel aspects: First, we extend the current GQN architecture with an adversarial loss function for improving the visual quality and convergence speed. Second, we introduce a feature-matching loss function for stabilizing the training procedure. The experiments demonstrate that GAQN is able to produce high-quality results and faster convergence compared to the conventional approach.

翻译:使用任意数量的观测对场景预测新观点的问题是计算机和人类面临的一个挑战性问题。本文介绍GAQN,这是将General Aversarial Query Network(GAQN)和General Aversarial Network(GANs)相结合的新观点合成新观点的总体学习框架。传统的GQN将输入的观点编码成一种潜在表达方式,通过反复变换的解码器生成新观点。拟议的GAQN在这项工作的基础上又增加了两个新的方面:第一,我们扩展目前的GQN结构,以对抗性损失功能来提高视觉质量和趋同速度。第二,我们引入了一种功能匹配性损失功能来稳定培训程序。实验表明GAQN能够产生高质量的结果,并比常规方法更快地融合。