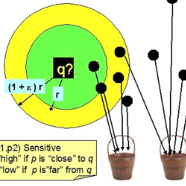

We present SLASH (Sketched LocAlity Sensitive Hashing), an MPI (Message Passing Interface) based distributed system for approximate similarity search over terabyte scale datasets. SLASH provides a multi-node implementation of the popular LSH (locality sensitive hashing) algorithm, which is generally implemented on a single machine. We show how we can append the LSH algorithm with heavy hitters sketches to provably solve the (high) similarity search problem without a single distance computation. Overall, we mathematically show that, under realistic data assumptions, we can identify the near-neighbor of a given query $q$ in sub-linear ($ \ll O(n)$) number of simple sketch aggregation operations only. To make such a system practical, we offer a novel design and sketching solution to reduce the inter-machine communication overheads exponentially. In a direct comparison on comparable hardware, SLASH is more than 10000x faster than the popular LSH package in PySpark. PySpark is a widely-adopted distributed implementation of the LSH algorithm for large datasets and is deployed in commercial platforms. In the end, we show how our system scale to Tera-scale Criteo dataset with more than 4 billion samples. SLASH can index this 2.3 terabyte data over 20 nodes in under an hour, with query times in a fraction of milliseconds. To the best of our knowledge, there is no open-source system that can index and perform a similarity search on Criteo with a commodity cluster.

翻译:我们展示了SLASH (Scheted LocAliity 敏感散列), 是一个基于 MPI (Message Passing 界面) 的分布式系统, 用于在特拉比特级数据集上大致相似的搜索。 SLASH 提供了通用 LSH (当地敏感散列) 算法的多节点实施, 通常在一台机器上实施。 我们展示了如何用重击器草图来附加 LSH 算法, 以可辨别( 高) 相似的搜索问题, 而不进行单一的距离计算。 总体而言, 我们数学显示, 根据现实的数据假设, 我们可以在亚线性( $\ll O (n)$) 中找到一个近邻的查询 $q( $q) 的近邻点搜索系统。 要使这个系统实用化, 我们提供新的设计和草图解决方案, 来减少机器之间的通信管理费用。 在可比的硬件上, SLASASH 要比普通 LSH 的 LSB 软件包快10 000x 。 PySPark 中, PillSpark 是一个广泛分布 和我们部署的Slodeal 的系统 的Slodealxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx