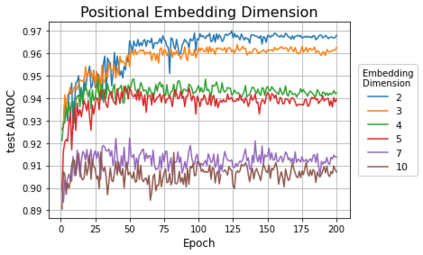

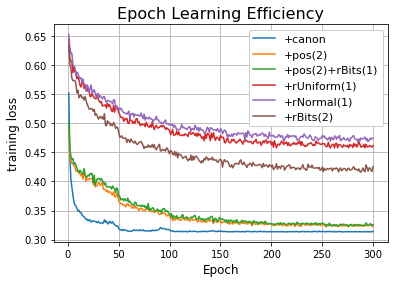

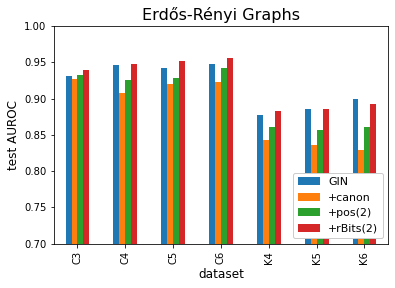

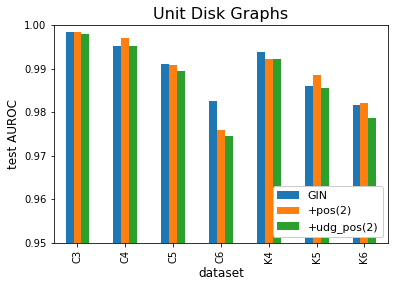

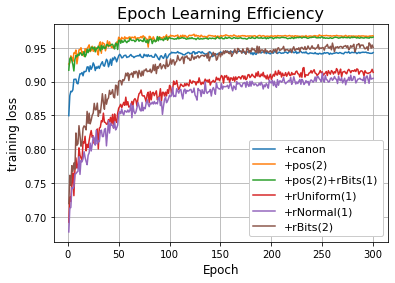

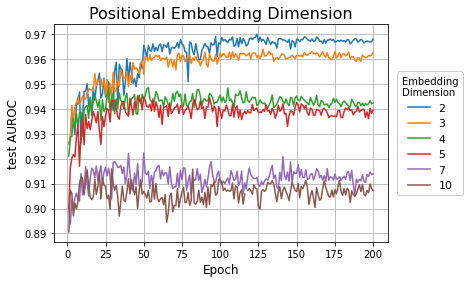

Most Graph Neural Networks (GNNs) cannot distinguish some graphs or indeed some pairs of nodes within a graph. This makes it impossible to solve certain classification tasks. However, adding additional node features to these models can resolve this problem. We introduce several such augmentations, including (i) positional node embeddings, (ii) canonical node IDs, and (iii) random features. These extensions are motivated by theoretical results and corroborated by extensive testing on synthetic subgraph detection tasks. We find that positional embeddings significantly outperform other extensions in these tasks. Moreover, positional embeddings have better sample efficiency, perform well on different graph distributions and even outperform learning with ground truth node positions. Finally, we show that the different augmentations perform competitively on established GNN benchmarks, and advise on when to use them.

翻译:大多数图形神经网络无法在图形中区分某些图表, 或甚至无法区分一些节点。 这使得无法解决某些分类任务 。 但是, 在这些模型中添加额外的节点特性可以解决这个问题 。 我们引入了几种这样的增强功能, 包括 (一) 定位节点嵌入, (二) 光子节点 ID 和 (三) 随机 。 这些扩展受理论结果的驱动, 并通过对合成子节点探测任务的广泛测试加以证实 。 我们发现定位嵌入大大优于这些任务中的其他扩展功能。 此外, 定位嵌入功能具有更好的样本效率, 在不同图表分布上表现良好, 甚至以地面真相节点位置进行不完善的学习 。 最后, 我们显示不同的增强功能在已经确立的 GNN 基准上具有竞争力, 并且建议何时使用它们 。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem