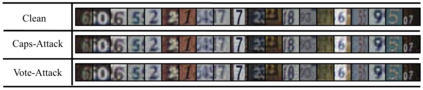

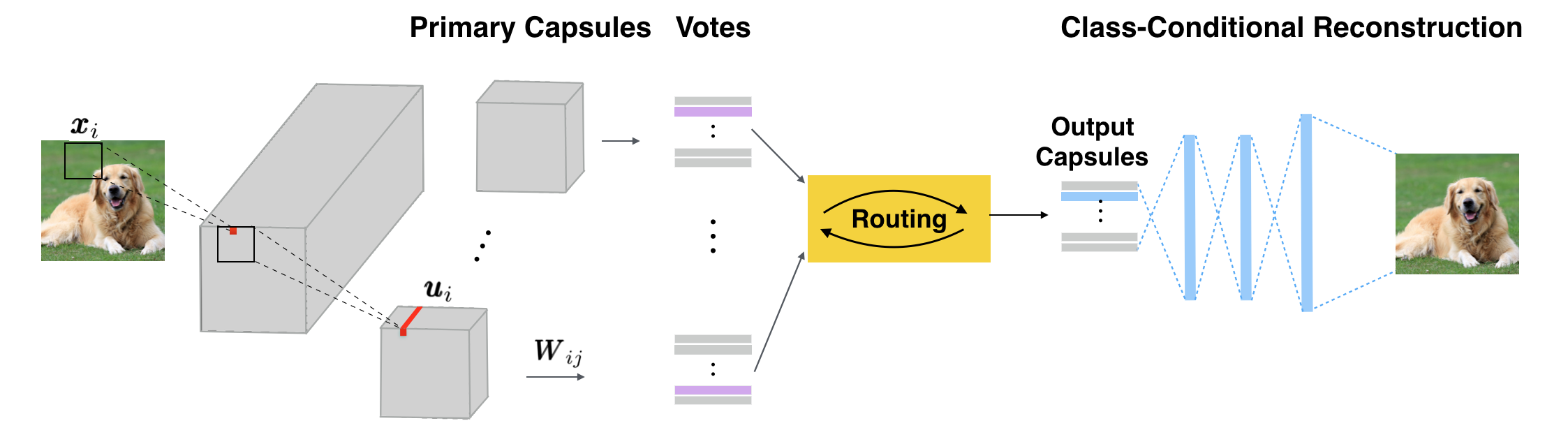

Standard Convolutional Neural Networks (CNNs) can be easily fooled by images with small quasi-imperceptible artificial perturbations. As alternatives to CNNs, the recently proposed Capsule Networks (CapsNets) are shown to be more robust to white-box attacks than CNNs under popular attack protocols. Besides, the class-conditional reconstruction part of CapsNets is also used to detect adversarial examples. In this work, we investigate the adversarial robustness of CapsNets, especially how the inner workings of CapsNets change when the output capsules are attacked. The first observation is that adversarial examples misled CapsNets by manipulating the votes from primary capsules. Another observation is the high computational cost, when we directly apply multi-step attack methods designed for CNNs to attack CapsNets, due to the computationally expensive routing mechanism. Motivated by these two observations, we propose a novel vote attack where we attack votes of CapsNets directly. Our vote attack is not only effective but also efficient by circumventing the routing process. Furthermore, we integrate our vote attack into the detection-aware attack paradigm, which can successfully bypass the class-conditional reconstruction based detection method. Extensive experiments demonstrate the superior attack performance of our vote attack on CapsNets.

翻译:标准革命神经网络(Capsule Nets) 很容易被小型准不可辨识的人工扰动图像所蒙骗。 作为CNN的替代方案,最近提出的Capsule网络(CapsNets)比CNN网络(CapsNets)对白箱攻击比受大众攻击协议约束的CNN网络(CapsNets)更强大。此外,CapsNets的分类条件重建部分也被用于检测对抗性实例。在这项工作中,我们调查了CapsNets网络的对抗性稳健性,特别是CapsNets的内部运作在产出舱受到攻击时是如何变化的。第一点观察是,对抗性的例子通过操纵主舱的选票而误导了CapsNetsNets网络。另一个观察是高计算成本,因为我们直接应用了CNNS袭击CapsNets网络的多步攻击方法,因为计算成本昂贵的路线机制。根据这两份观察,我们建议进行新的投票攻击,我们直接攻击CapsNets的投票攻击不仅有效,而且效率也通过绕绕路攻击过程。 此外,我们把Crevoremoview roviewal rodustration rodustration