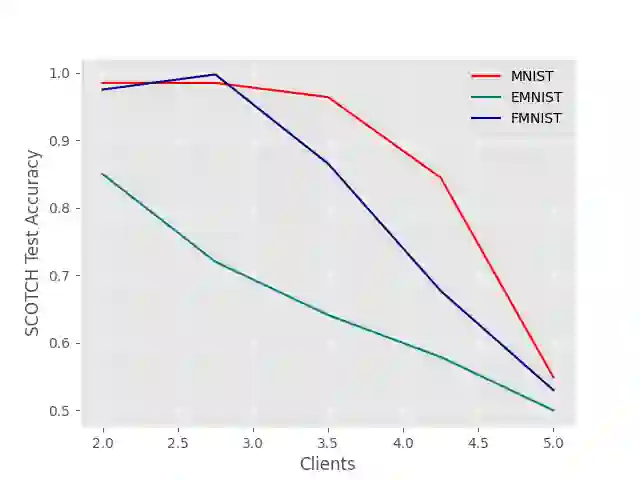

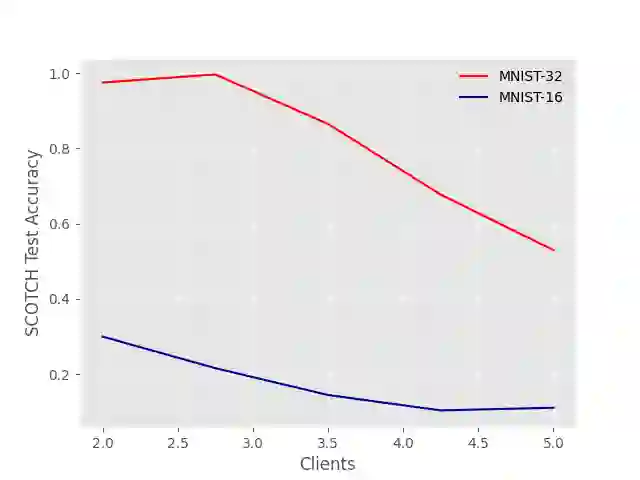

Federated learning enables multiple data owners to jointly train a machine learning model without revealing their private datasets. However, a malicious aggregation server might use the model parameters to derive sensitive information about the training dataset used. To address such leakage, differential privacy and cryptographic techniques have been investigated in prior work, but these often result in large communication overheads or impact model performance. To mitigate this centralization of power, we propose \textsc{Scotch}, a decentralized \textit{m-party} secure-computation framework for federated aggregation that deploys MPC primitives, such as \textit{secret sharing}. Our protocol is simple, efficient, and provides strict privacy guarantees against curious aggregators or colluding data-owners with minimal communication overheads compared to other existing \textit{state-of-the-art} privacy-preserving federated learning frameworks. We evaluate our framework by performing extensive experiments on multiple datasets with promising results. \textsc{Scotch} can train the standard MLP NN with the training dataset split amongst 3 participating users and 3 aggregating servers with 96.57\% accuracy on MNIST, and 98.40\% accuracy on the Extended MNIST (digits) dataset, while providing various optimizations.

翻译:联邦学习使多个数据拥有者能够在不透露其私人数据集的情况下联合培训机器学习模式。 但是,恶意聚集服务器可能会使用模型参数来获取关于所使用培训数据集的敏感信息。 为解决这种泄漏问题,在先前的工作中已经调查了不同的隐私和加密技术,但是这些技术往往导致大量的通信间接费用或影响模型性能。 为了减轻这种权力集中化,我们提议为联邦集成的组合集成企业建立一个分散化的 textsc{Scotch}(textsc{m-party- safety-computation)框架,用于部署MPC原始产品,例如\ textit{seredicret共享}。我们的协议简单、高效,并针对好奇的聚合者或串通数据拥有者提供严格的隐私保障,而与其他现有的\ textitleitalitalital{State-stat-preferation federalationforum。 我们通过对多种数据集进行广泛的实验来评估我们的框架。\ textscs{Scotch} 能够对标准的MPCNNNN(例如98.) 3参与用户之间以培训的精确度提供数据的精确度,同时提供各种数据优化的服务器。