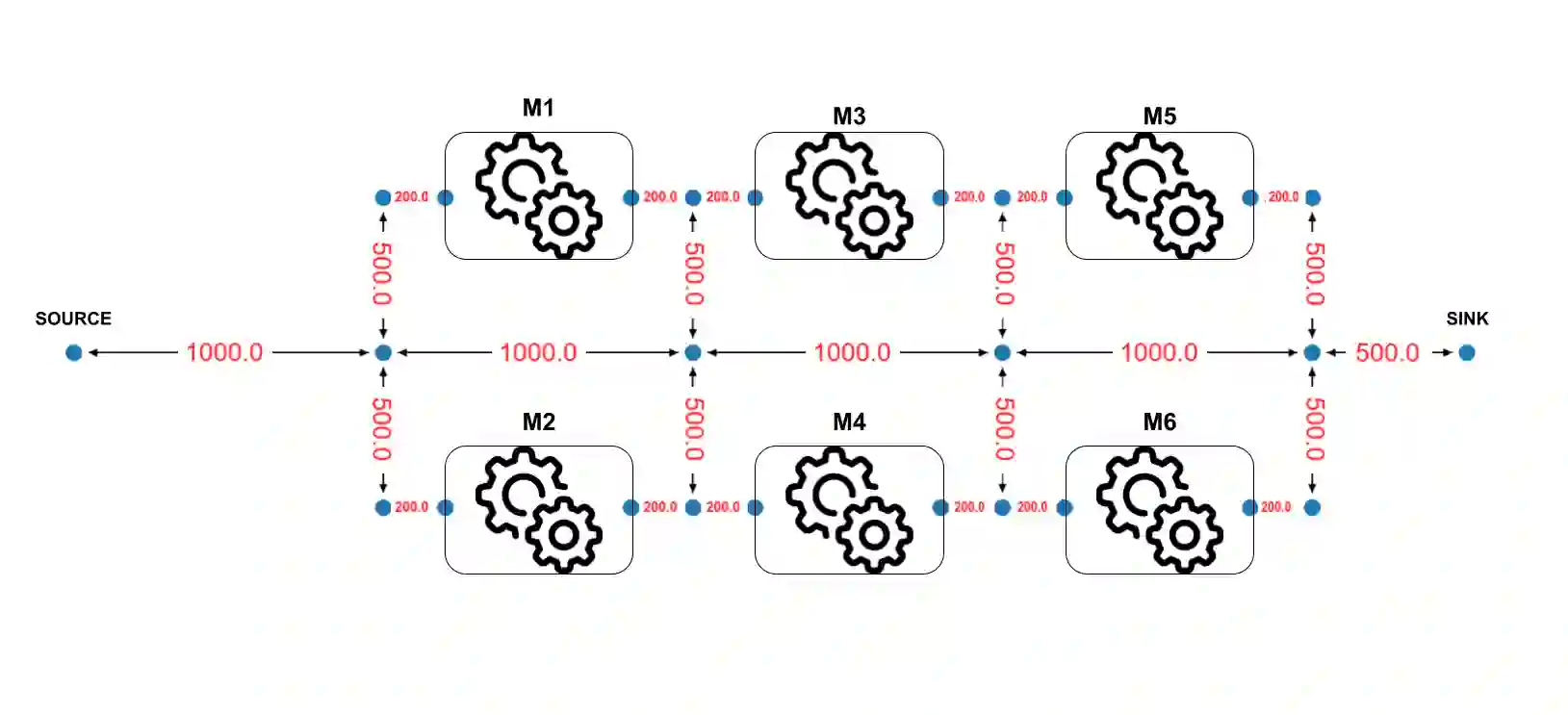

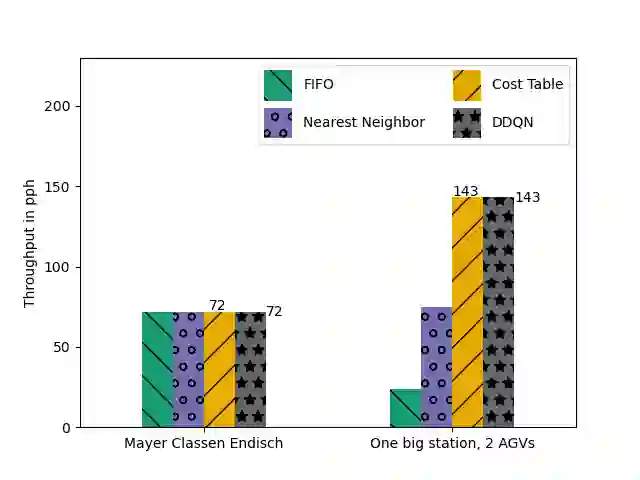

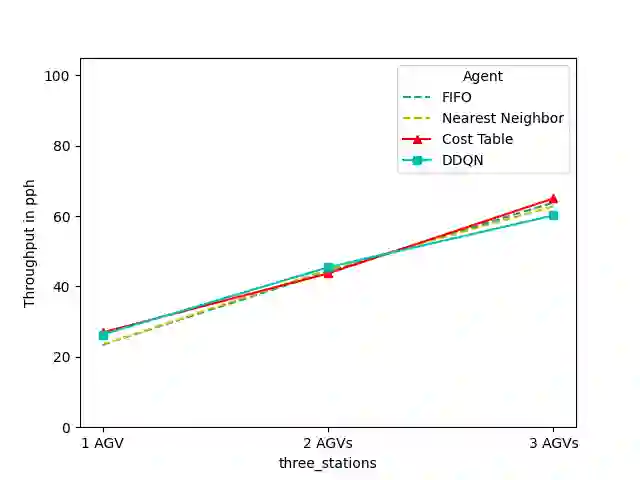

We investigate the feasibility of deploying Deep-Q based deep reinforcement learning agents to job-shop scheduling problems in the context of modular production facilities, using discrete event simulations for the environment. These environments are comprised of a source and sink for the parts to be processed, as well as (several) workstations. The agents are trained to schedule automated guided vehicles to transport the parts back and forth between those stations in an optimal fashion. Starting from a very simplistic setup, we increase the complexity of the environment and compare the agents' performances with well established heuristic approaches, such as first-in-first-out based agents, cost tables and a nearest-neighbor approach. We furthermore seek particular configurations of the environments in which the heuristic approaches struggle, to investigate to what degree the Deep-Q agents are affected by these challenges. We find that Deep-Q based agents show comparable performance as the heuristic baselines. Furthermore, our findings suggest that the DRL agents exhibit an increased robustness to noise, as compared to the conventional approaches. Overall, we find that DRL agents constitute a valuable approach for this type of scheduling problems.

翻译:我们利用环境的离散事件模拟,调查在模块生产设施中部署基于深Q的深强化学习剂的可行性,以便在模块生产设施中将工场安排为工作单位的可行性,这些环境包括处理部件的源和汇,以及(几个)工作站。这些代理人受过训练,可以以最佳的方式安排自动制导车辆在这些站点之间往返运输部件的最佳方式。从简单化的设置开始,我们增加了环境的复杂性,并将这些代理人的性能与既定的超常方法,例如先入为主的代理人、成本表和近邻方法进行比较。我们还寻求对超常方法所挣扎的环境的具体配置,以调查深Q代理受到这些挑战影响的程度。我们发现,基于深Q的代理人表现出与超常基线相当的性能。此外,我们的研究结果表明,与常规方法相比,DL代理人对噪音表现出更大的活力。总体而言,我们发现DL代理人是这类列表问题的一种宝贵方法。