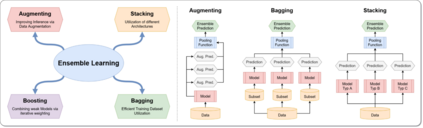

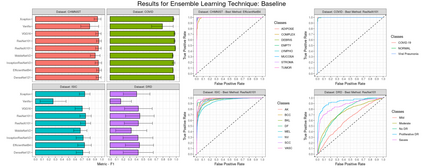

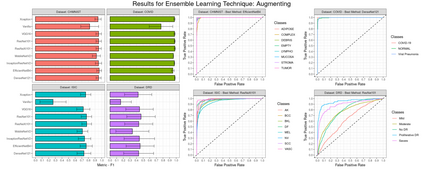

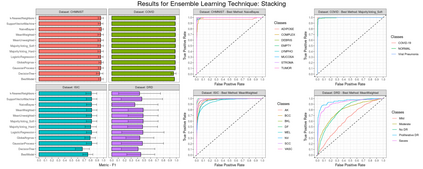

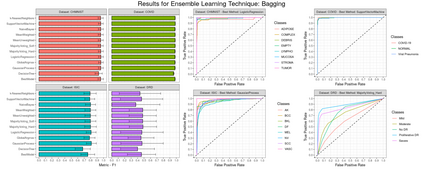

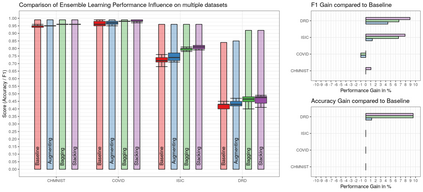

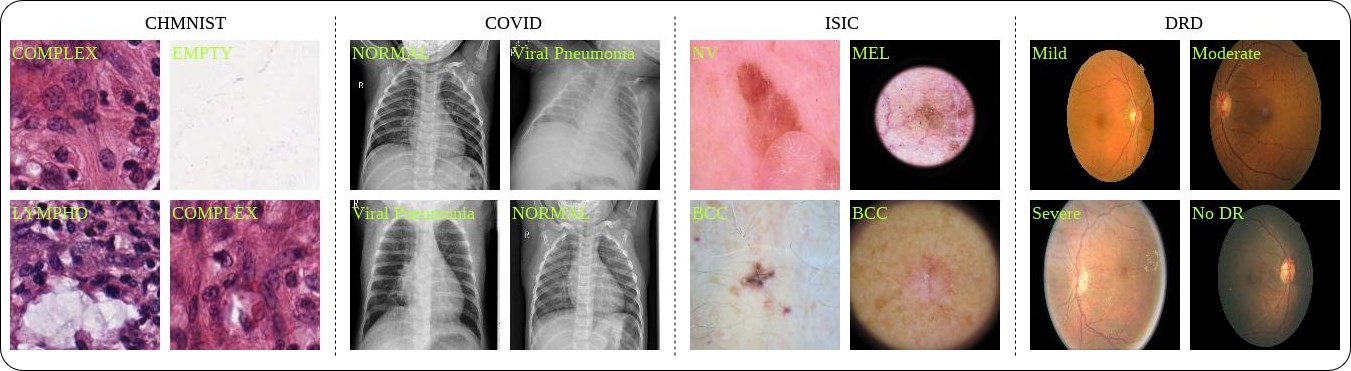

Novel and high-performance medical image classification pipelines are heavily utilizing ensemble learning strategies. The idea of ensemble learning is to assemble diverse models or multiple predictions and, thus, boost prediction performance. However, it is still an open question to what extent as well as which ensemble learning strategies are beneficial in deep learning based medical image classification pipelines. In this work, we proposed a reproducible medical image classification pipeline for analyzing the performance impact of the following ensemble learning techniques: Augmenting, Stacking, and Bagging. The pipeline consists of state-of-the-art preprocessing and image augmentation methods as well as 9 deep convolution neural network architectures. It was applied on four popular medical imaging datasets with varying complexity. Furthermore, 12 pooling functions for combining multiple predictions were analyzed, ranging from simple statistical functions like unweighted averaging up to more complex learning-based functions like support vector machines. Our results revealed that Stacking achieved the largest performance gain of up to 13% F1-score increase. Augmenting showed consistent improvement capabilities by up to 4% and is also applicable to single model based pipelines. Cross-validation based Bagging demonstrated significant performance gain close to Stacking, which resulted in an F1-score increase up to +11%. Furthermore, we demonstrated that simple statistical pooling functions are equal or often even better than more complex pooling functions. We concluded that the integration of ensemble learning techniques is a powerful method for any medical image classification pipeline to improve robustness and boost performance.

翻译:在这项工作中,我们建议了一种可复制的医疗图像分类管道,用于分析下列共同学习技术的性能影响:增加、堆叠和压轴。管道包括最先进的预处理和图像增强方法以及9个深层神经神经网络结构。它适用于四个复杂程度不同的流行医学成像数据集。此外,还分析了12个合并多种预测的集合功能,从简单的统计功能,如未加权平均到更复杂的学习功能,如支持矢量机器。我们的结果显示,堆叠式医疗图像分类管道实现了最高达13%F1级最高的业绩收益。强化显示,甚至通过4 % 的复杂预处理和图像增强方法以及9个深层神经神经网络结构,不断改进能力,用于四个复杂程度不同的流行医学成像数据集。此外,12个合并多种预测的集合功能得到了分析,从简单的统计功能,如未加权平均到更复杂的学习功能,如支持矢量机器。我们发现,Stagging the simple cal block to 13% F1-cload。我们加固化显示,甚至以4 %的精锐化的精锐化作业能力,结果显示,也显示,一个基于单一模型的精细的精细的精化方法显示,我们更精细的精细的精细的精细。