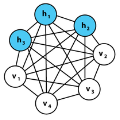

The idea of representing symbolic knowledge in connectionist systems has been a long-standing endeavour which has attracted much attention recently with the objective of combining machine learning and scalable sound reasoning. Early work has shown a correspondence between propositional logic and symmetrical neural networks which nevertheless did not scale well with the number of variables and whose training regime was inefficient. In this paper, we introduce Logical Boltzmann Machines (LBM), a neurosymbolic system that can represent any propositional logic formula in strict disjunctive normal form. We prove equivalence between energy minimization in LBM and logical satisfiability thus showing that LBM is capable of sound reasoning. We evaluate reasoning empirically to show that LBM is capable of finding all satisfying assignments of a class of logical formulae by searching fewer than 0.75% of the possible (approximately 1 billion) assignments. We compare learning in LBM with a symbolic inductive logic programming system, a state-of-the-art neurosymbolic system and a purely neural network-based system, achieving better learning performance in five out of seven data sets.

翻译:在联系系统方面代表象征性知识的想法是一项长期的努力,最近引起了人们的极大注意,目标是将机器学习和可扩缩的正确推理结合起来。早期的工作表明,假设逻辑和对称神经网络之间的对应性,尽管这些逻辑和对称神经网络的规模与变量的数量不相称,而且其培训制度效率不高。在本文中,我们引入了逻辑博尔茨曼机器(LBM),这是一个神经同义系统,能够以严格的分离的正常形式代表任何假设逻辑公式。我们证明,LBM的能量最小化与逻辑对称性之间是等的,从而表明LBM能够有正确的推理。我们从经验上进行推理,以显示LBM能够通过搜索不到0.75%的可能的(约10亿)任务来找到符合逻辑公式的所有任务。我们把LBM的学习与象征性的诱导逻辑编程系统、最先进的神经论系统和纯粹的神经网络系统进行比较,在7个数据集中的5个中取得更好的学习表现。