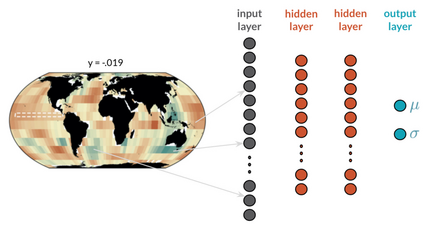

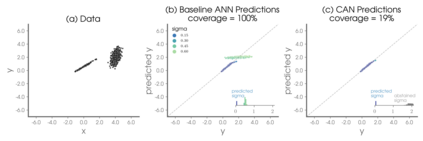

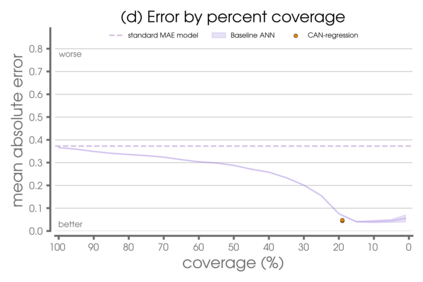

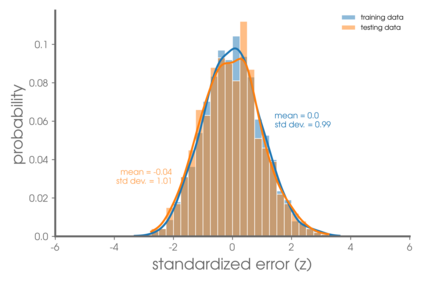

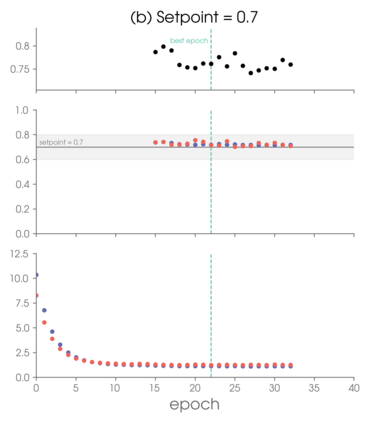

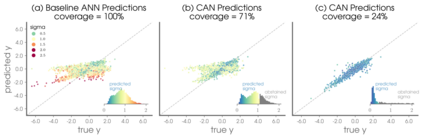

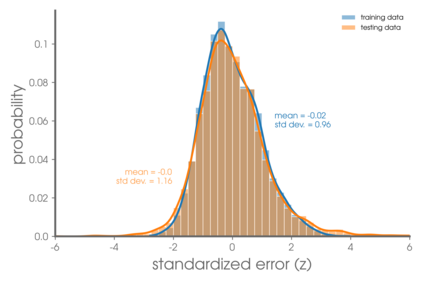

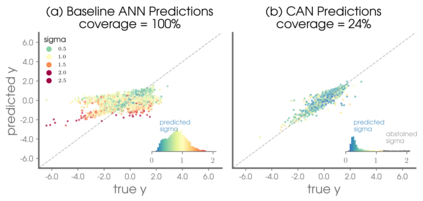

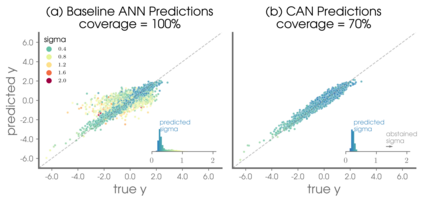

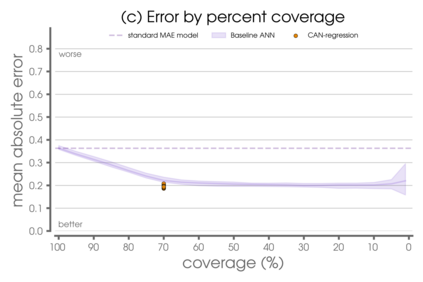

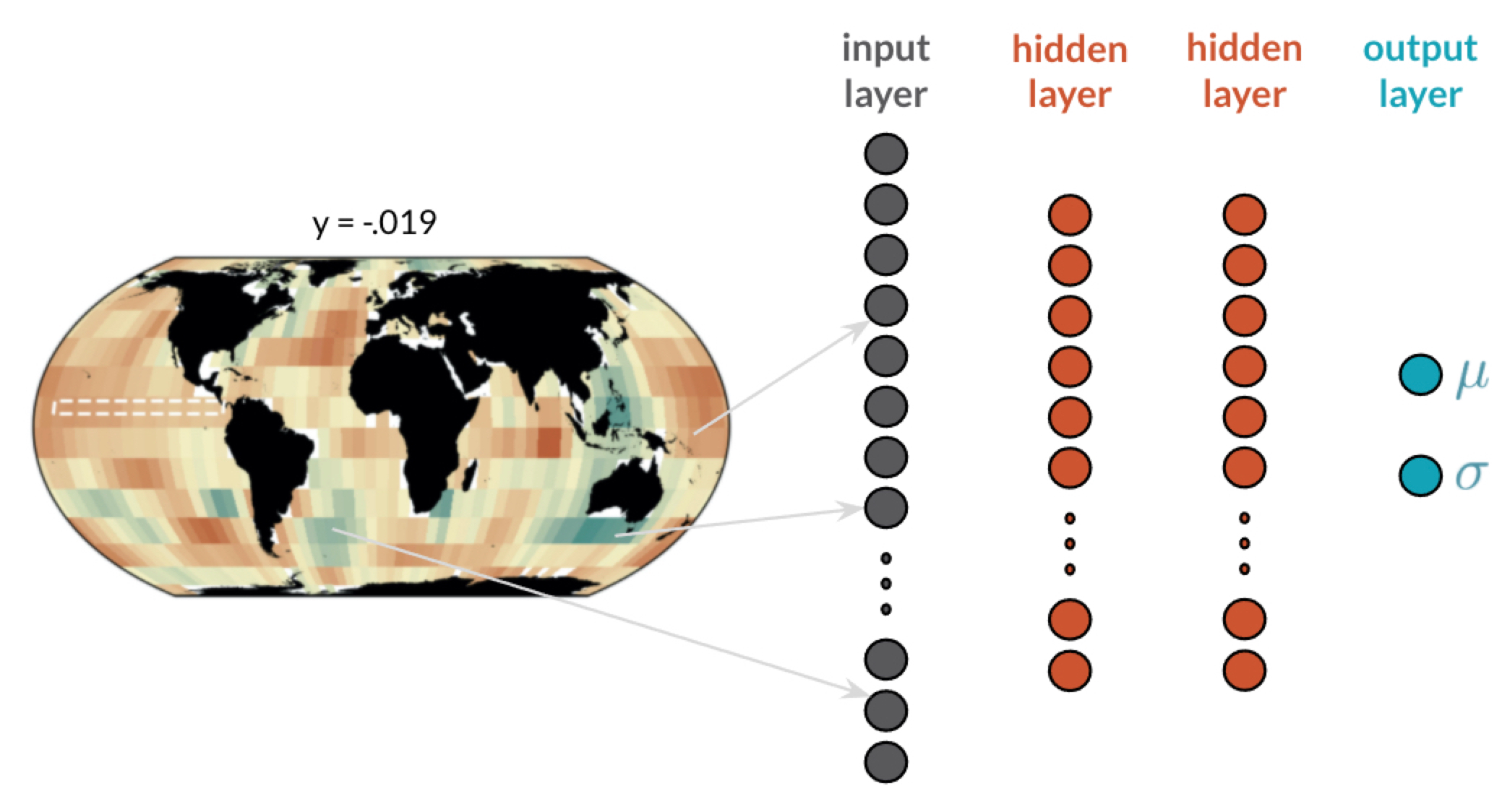

The earth system is exceedingly complex and often chaotic in nature, making prediction incredibly challenging: we cannot expect to make perfect predictions all of the time. Instead, we look for specific states of the system that lead to more predictable behavior than others, often termed "forecasts of opportunity". When these opportunities are not present, scientists need prediction systems that are capable of saying "I don't know." We introduce a novel loss function, termed "abstention loss", that allows neural networks to identify forecasts of opportunity for regression problems. The abstention loss works by incorporating uncertainty in the network's prediction to identify the more confident samples and abstain (say "I don't know") on the less confident samples. The abstention loss is designed to determine the optimal abstention fraction, or abstain on a user-defined fraction via a PID controller. Unlike many methods for attaching uncertainty to neural network predictions post-training, the abstention loss is applied during training to preferentially learn from the more confident samples. The abstention loss is built upon a standard computer science method. While the standard approach is itself a simple yet powerful tool for incorporating uncertainty in regression problems, we demonstrate that the abstention loss outperforms this more standard method for the synthetic climate use cases explored here. The implementation of proposed loss function is straightforward in most network architectures designed for regression, as it only requires modification of the output layer and loss function.

翻译:地球系统在自然界是极其复杂而且经常混乱的, 使预测变得极具挑战性: 我们无法期望在全部时间里做出完美的预测。 相反, 我们寻找系统的具体状态导致比其他系统更可预测的行为, 通常被称为“ 机会预言 ” 。 当这些机会不存在时, 科学家需要能够说“ 我不知道” 的预测系统。 我们引入了一个新的损失函数, 称为“ 隐性损失 ”, 使神经网络能够确定回归问题的机会预测。 弃权损失通过在网络预测中添加不确定性, 以识别更自信的样本, 并在不那么自信的样本中避免( 说“ 我不知道 ” ) 。 弃权损失的目的是为了确定最佳的放弃部分, 或者通过 PID 控制器不使用用户定义的碎片。 与许多将不确定性附在神经网络预测后培训中的方法不同, 我们引入了一种“ 隐性损失”, 在培训中, 优先从更自信的样本中学习。 弃权损失是建立在标准的计算机科学方法上。 标准方法本身是将不确定性纳入一个简单而有力的工具, 。 在回归问题中, 我们用最直接的计算损失的模型的模型来解释。