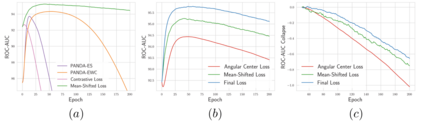

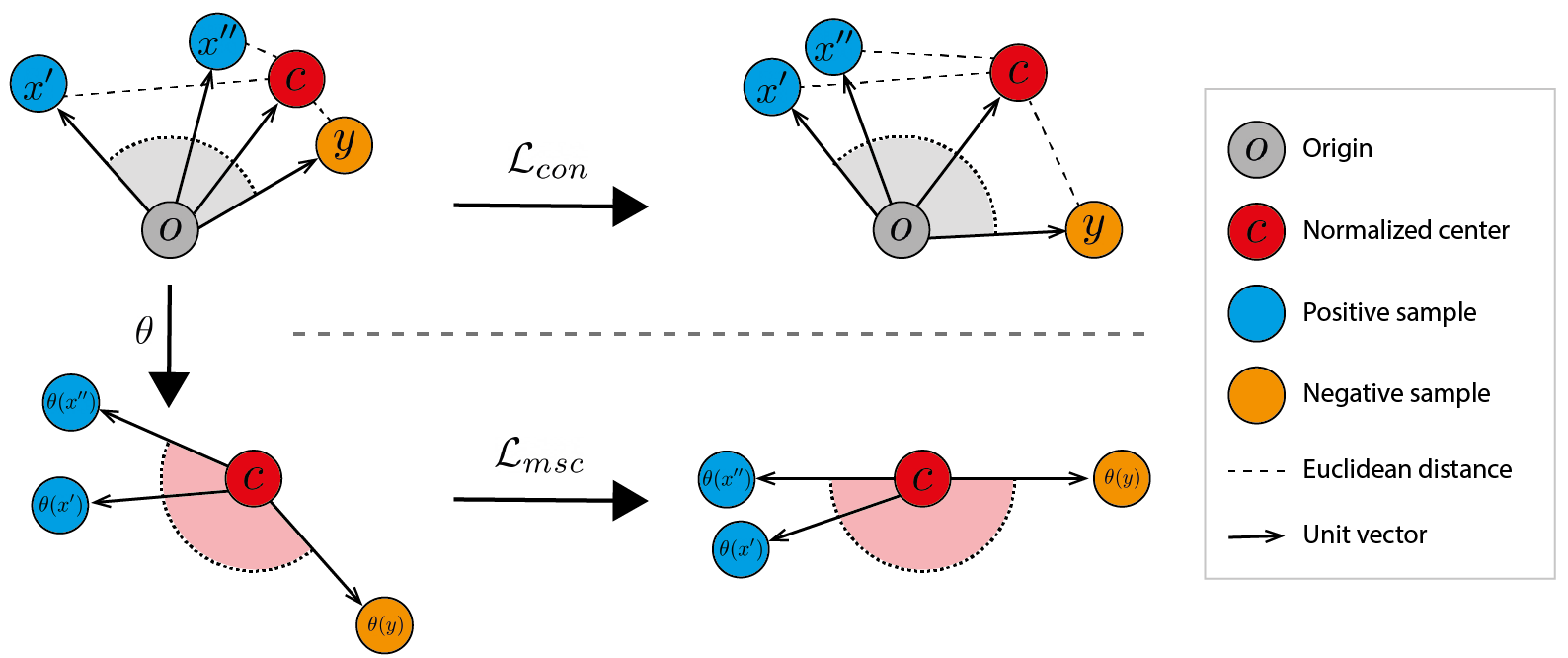

Deep anomaly detection methods learn representations that separate between normal and anomalous samples. Very effective representations are obtained when powerful externally trained feature extractors (e.g. ResNets pre-trained on ImageNet) are fine-tuned on the training data which consists of normal samples and no anomalies. However, this is a difficult task that can suffer from catastrophic collapse, i.e. it is prone to learning trivial and non-specific features. In this paper, we propose a new loss function which can overcome failure modes of both center-loss and contrastive-loss methods. Furthermore, we combine it with a confidence-invariant angular center loss, which replaces the Euclidean distance used in previous work, that was sensitive to prediction confidence. Our improvements yield a new anomaly detection approach, based on $\textit{Mean-Shifted Contrastive Loss}$, which is both more accurate and less sensitive to catastrophic collapse than previous methods. Our method achieves state-of-the-art anomaly detection performance on multiple benchmarks including $97.5\%$ ROC-AUC on the CIFAR-10 dataset.

翻译:深度异常检测方法可以区分正常和异常的样本。非常有效的表述方法是当强大的外部训练地物提取器(如ResNets在图像网络上预先培训过的RESNets)对由正常样本和无异常组成的培训数据进行微调时获得的。然而,这是一项困难的任务,可能会遭受灾难性的崩溃,即容易学习到微不足道和非特定的特点。在本文中,我们提议一种新的损失功能,可以克服中损失和反差损失方法的失败模式。此外,我们把它与信任性差异性的角形中心损失结合起来,取代了先前工作中使用的欧克莱德距离,对预测信心十分敏感。我们的改进产生了一种新的异常检测方法,以美元为基础,即对灾难性崩溃比以往方法更加准确,对灾难性崩溃的敏感。我们的方法在多个基准上取得了最先进的异常检测性表现,包括CIFAR-10数据集中的975 $ ROC-AUC。