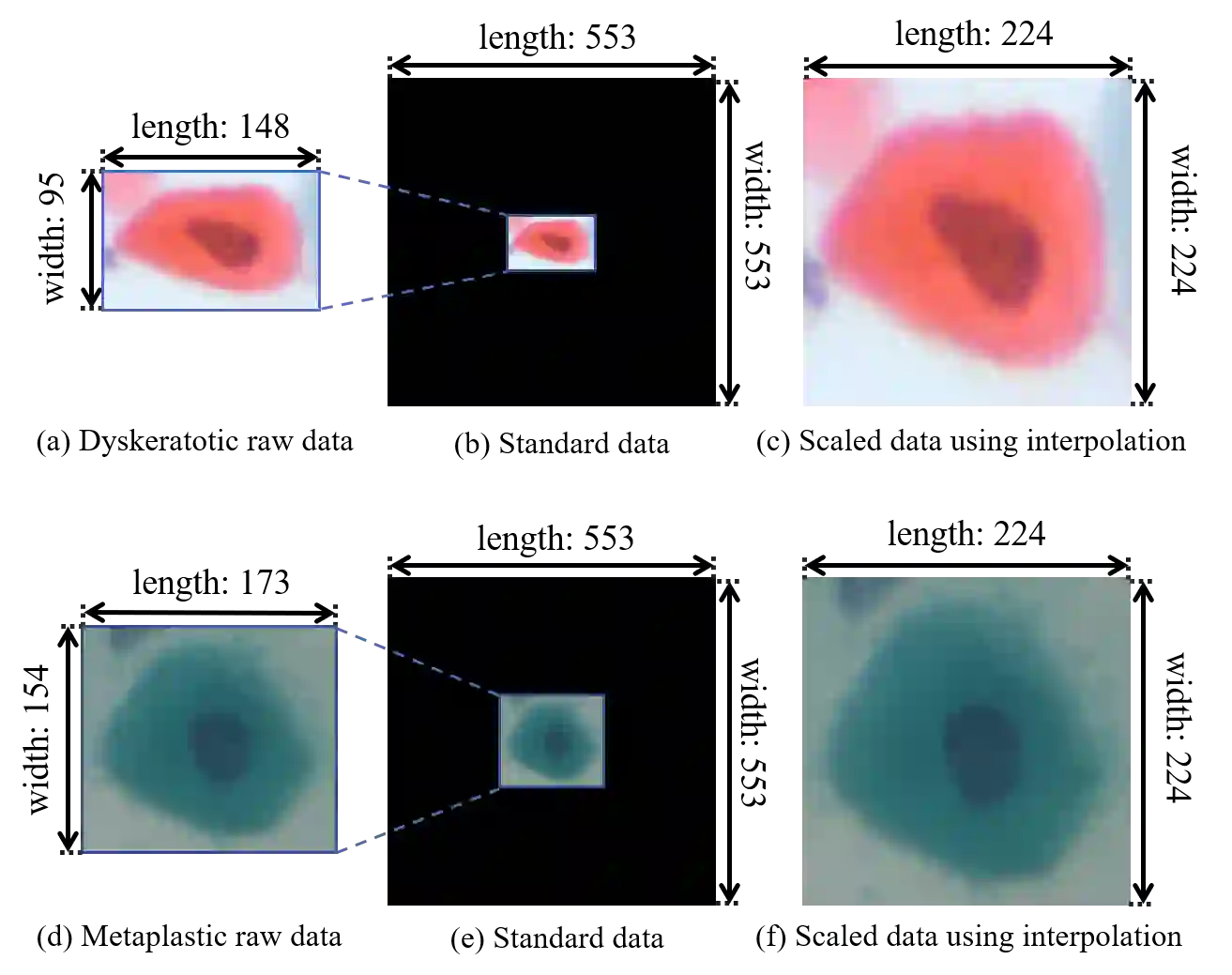

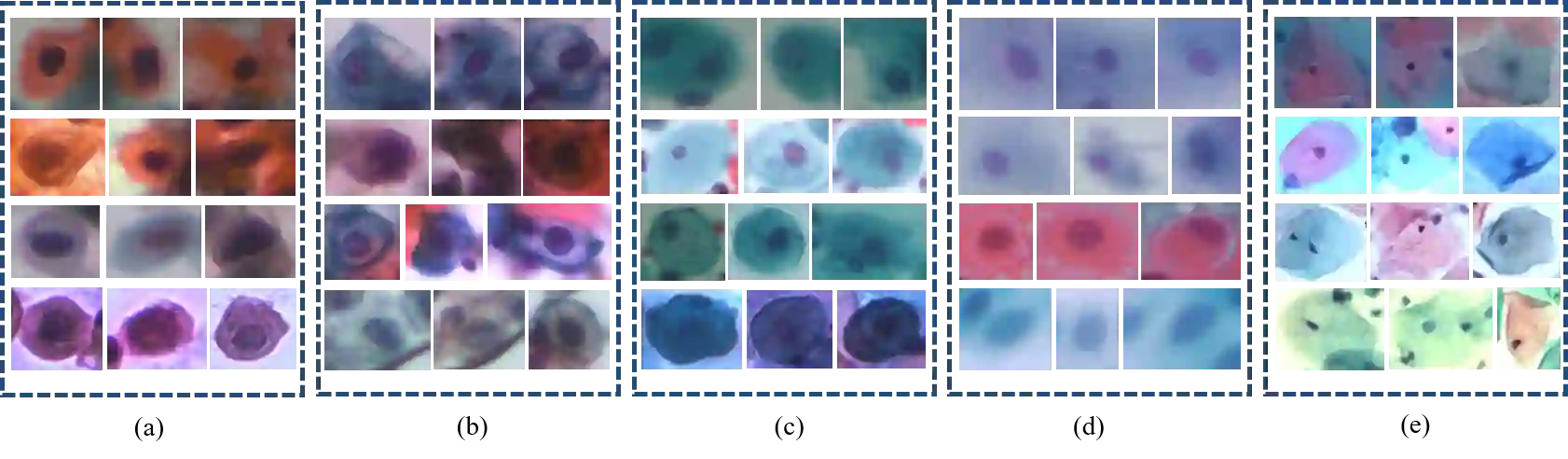

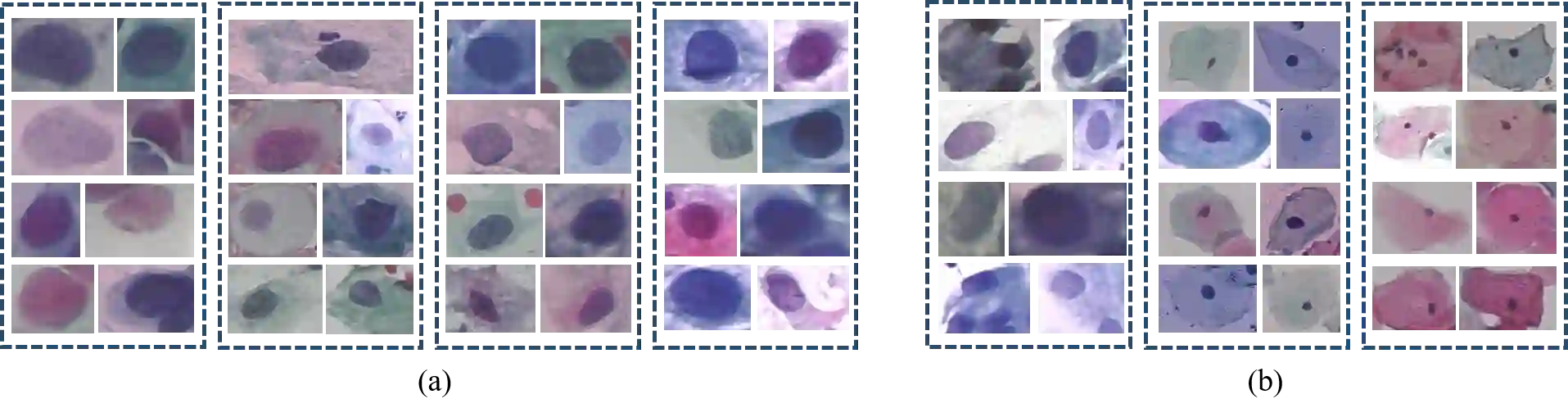

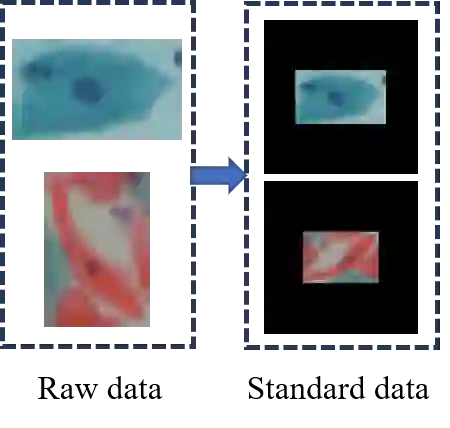

Cervical cancer is a very common and fatal cancer in women. Cytopathology images are often used to screen this cancer. Since there is a possibility of a large number of errors in manual screening, the computer-aided diagnosis system based on deep learning is developed. The deep learning methods required a fixed size of input images, but the sizes of the clinical medical images are inconsistent. The aspect ratios of the images are suffered while resizing it directly. Clinically, the aspect ratios of cells inside cytopathological images provide important information for doctors to diagnose cancer. Therefore, it is illogical to resize directly. However, many existing studies resized the images directly and obtained very robust classification results. To find a reasonable interpretation, we have conducted a series of comparative experiments. First, the raw data of the SIPaKMeD dataset are preprocessed to obtain the standard and scaled datasets. Then, the datasets are resized to 224 x 224 pixels. Finally, twenty-two deep learning models are used to classify standard and scaled datasets. The conclusion is that the deep learning models are robust to changes in the aspect ratio of cells in cervical cytopathological images. This conclusion is also validated on the Herlev dataset.

翻译:宫颈癌是妇女非常常见和致命的癌症。 细胞病理学图像经常被用来筛查癌症。 由于人工筛查中可能出现大量错误, 开发了基于深层学习的计算机辅助诊断系统。 深层次的学习方法需要固定的输入图像大小, 但临床医学图像的大小不一致。 图像的侧面比例在直接调整其大小时会受到影响。 临床中, 细胞病理学图像内的细胞的侧面比例为医生诊断癌症提供了重要信息。 因此, 直接调整比例是不合逻辑的。 但是, 许多现有研究直接调整图像大小并获得非常可靠的分类结果。 为了找到合理的解释, 我们进行了一系列比较实验。 首先, SIPAKMEMD数据集的原始数据是预先处理的, 以获得标准和缩放数据集。 然后, 数据集被重新缩放到 224 x 224 像素。 最后, 使用22 深学习模型来分类标准和缩放数据集。 结论是, 深层次的学习模型对于她宫颈细胞图象的方面比例的变化也很可靠。