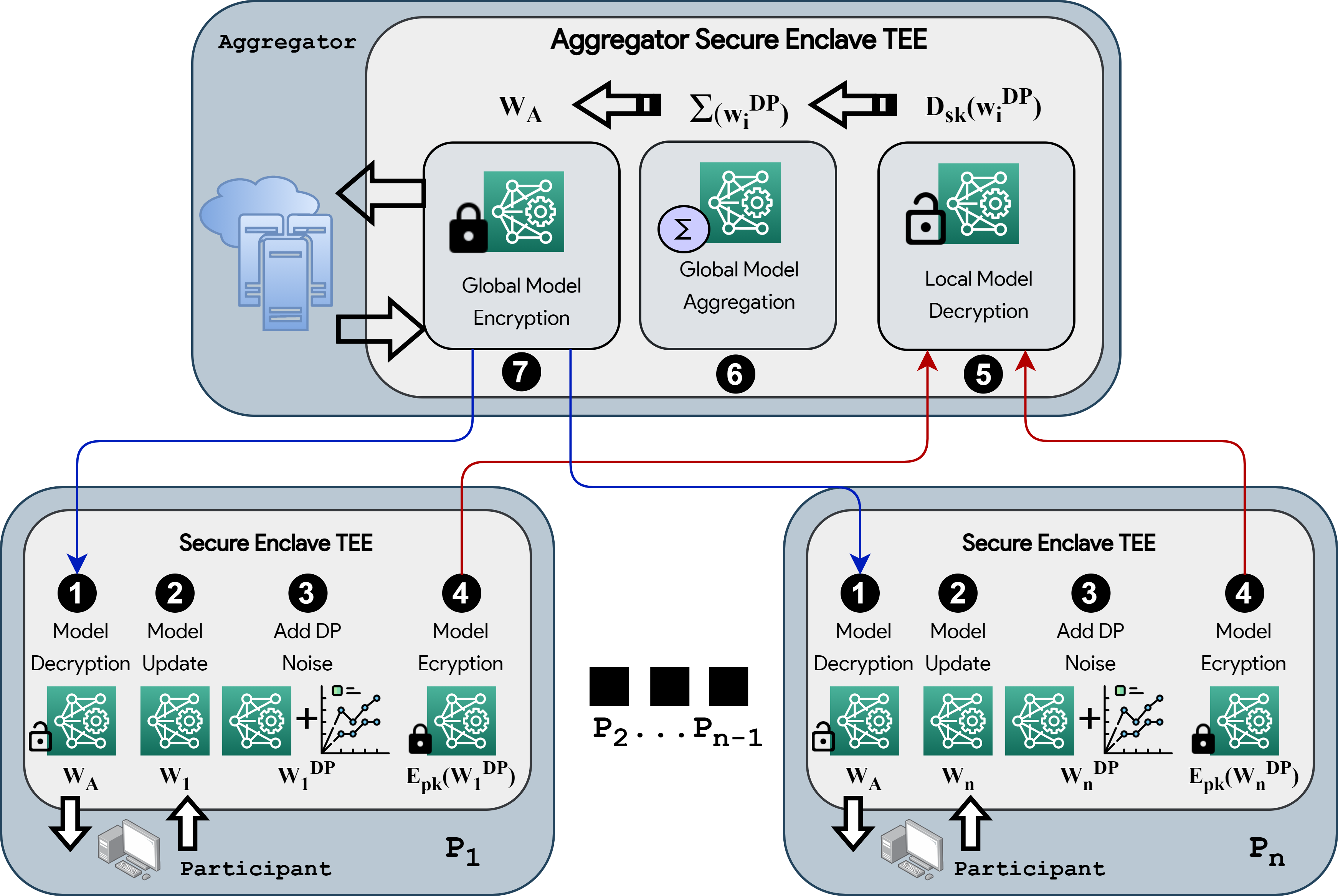

Federated learning allows us to distributively train a machine learning model where multiple parties share local model parameters without sharing private data. However, parameter exchange may still leak information. Several approaches have been proposed to overcome this, based on multi-party computation, fully homomorphic encryption, etc.; many of these protocols are slow and impractical for real-world use as they involve a large number of cryptographic operations. In this paper, we propose the use of Trusted Execution Environments (TEE), which provide a platform for isolated execution of code and handling of data, for this purpose. We describe Flatee, an efficient privacy-preserving federated learning framework across TEEs, which considerably reduces training and communication time. Our framework can handle malicious parties (we do not natively solve adversarial data poisoning, though we describe a preliminary approach to handle this).

翻译:联邦学习允许我们进行机器学习模式的分布式培训,让多方共享本地模型参数,而不共享私人数据。然而,参数交换仍然可能泄漏信息。基于多方计算、完全同质加密等,提出了克服这一问题的若干方法;许多这些协议由于涉及大量加密操作,对于现实世界来说是缓慢和不切实际的。在本文件中,我们提议为此目的使用信任执行环境(TEE),它为单独执行代码和处理数据提供了一个平台。我们描述了Flatee,一个高效的隐私保护联合学习框架,它大大缩短了培训和沟通时间。我们的框架可以处理恶意当事方(我们不会从本地解决对抗性数据中毒问题,尽管我们描述了处理该问题的初步方法 ) 。