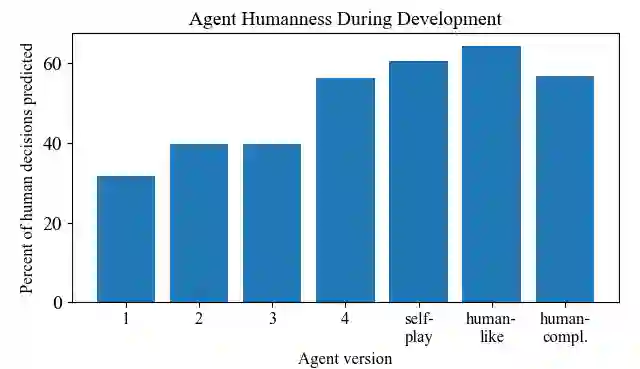

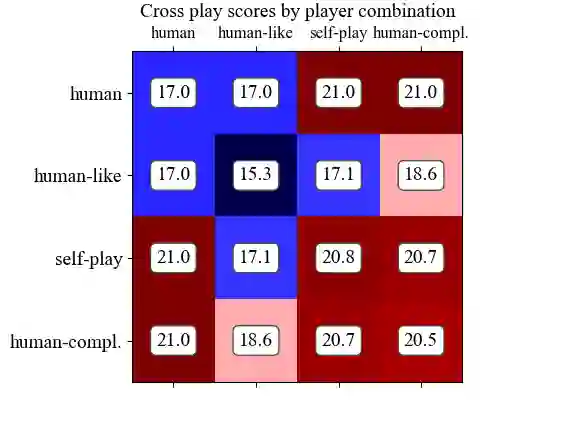

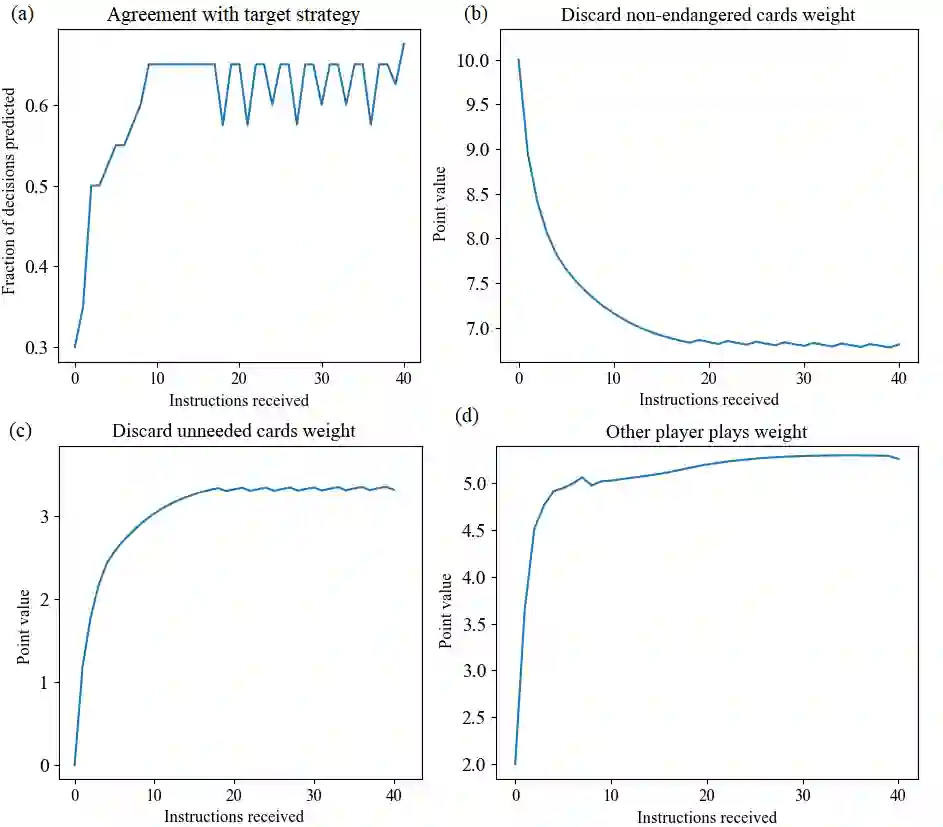

We propose a novel approach to explainable AI (XAI) based on the concept of "instruction" from neural networks. In this case study, we demonstrate how a superhuman neural network might instruct human trainees as an alternative to traditional approaches to XAI. Specifically, an AI examines human actions and calculates variations on the human strategy that lead to better performance. Experiments with a JHU/APL-developed AI player for the cooperative card game Hanabi suggest this technique makes unique contributions to explainability while improving human performance. One area of focus for Instructive AI is in the significant discrepancies that can arise between a human's actual strategy and the strategy they profess to use. This inaccurate self-assessment presents a barrier for XAI, since explanations of an AI's strategy may not be properly understood or implemented by human recipients. We have developed and are testing a novel, Instructive AI approach that estimates human strategy by observing human actions. With neural networks, this allows a direct calculation of the changes in weights needed to improve the human strategy to better emulate a more successful AI. Subjected to constraints (e.g. sparsity) these weight changes can be interpreted as recommended changes to human strategy (e.g. "value A more, and value B less"). Instruction from AI such as this functions both to help humans perform better at tasks, but also to better understand, anticipate, and correct the actions of an AI. Results will be presented on AI instruction's ability to improve human decision-making and human-AI teaming in Hanabi.

翻译:我们根据神经网络的“指令”概念,提出了一个解释性AI(XAI)的新办法。在这个案例研究中,我们展示了超人类神经网络如何可以指导人类受训者,作为XAI传统方法的替代。具体地说,AI研究人类行动,计算人类战略的变异,从而提高绩效。JHU/APL开发的Hanabi合作卡游戏的AI玩家的实验表明,这种技术在解释性方面作出了独特的贡献,同时改善了人类的绩效。指示性AI的一个重点领域是人的实际战略和他们声称使用的战略之间可能出现的重大差异。这种不准确的自我评估为XAI带来了障碍,因为对AI战略的解释可能不是由人类接受者正确理解或执行的。我们已经制定并测试了通过观察人类行动来评估人类战略的新颖的、具有教益的AI方法。通过神经网络,可以直接计算改进人类战略所需重量的变化,以便更成功地模仿AI。根据限制(例如,弹性)和他们宣称使用的战略,这些重量的调整能力变化可以更精确地解释为“B”的数值,可以更精确地解释,从人类的A值到AI。