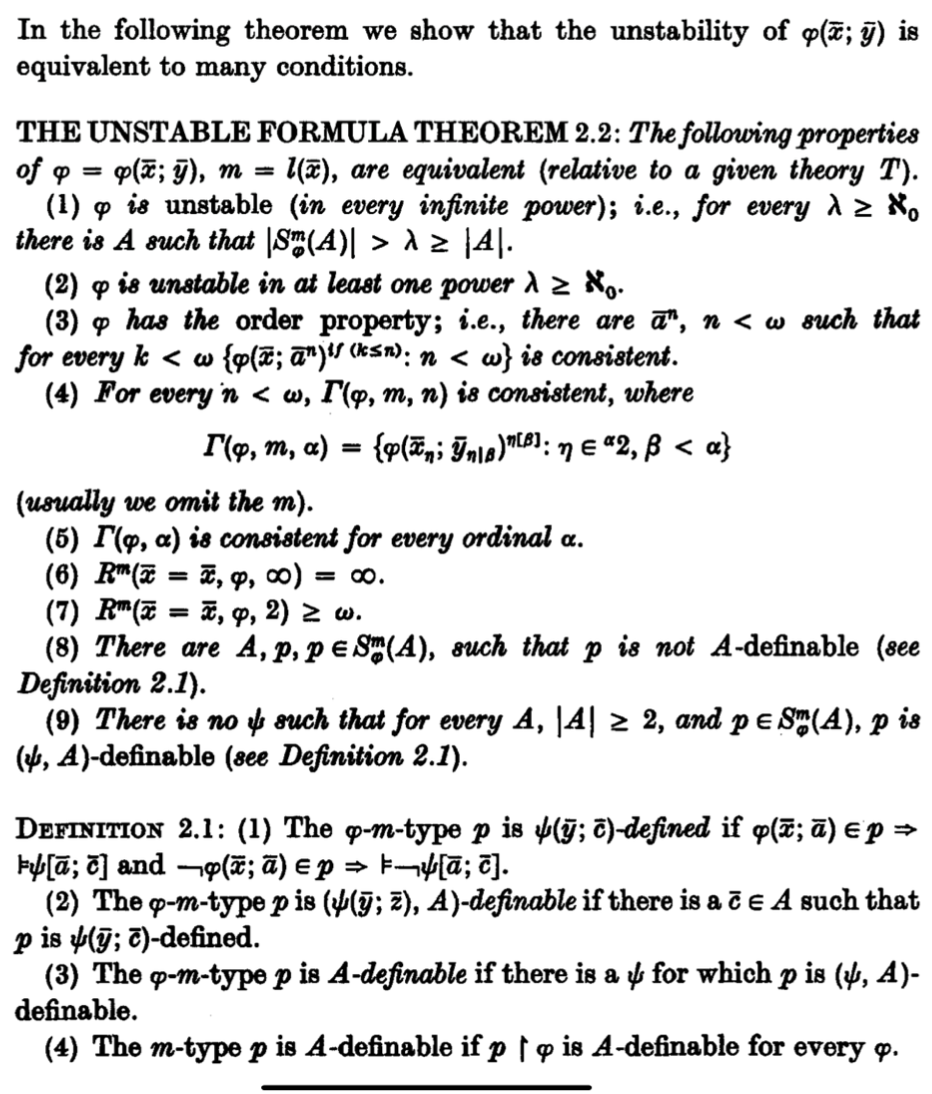

We first prove that Littlestone classes, those which model theorists call stable, characterize learnability in a new statistical model: a learner in this new setting outputs the same hypothesis, up to measure zero, with probability one, after a uniformly bounded number of revisions. This fills a certain gap in the literature, and sets the stage for an approximation theorem characterizing Littlestone classes in terms of a range of learning models, by analogy to definability of types in model theory. We then give a complete analogue of Shelah's celebrated (and perhaps a priori untranslatable) Unstable Formula Theorem in the learning setting, with algorithmic arguments taking the place of the infinite.

翻译:我们首先证明Littlestone 类,即那些模拟理论家称之为稳定的类,在新的统计模型中具有可学习性的特点:在这个新设置的产出中,一个学习者在同一个假设中,在统一界限的修改数量之后,用概率1来测量零,然后是统一界限的修改数量。这填补了文献中的某些空白,并且为以一系列学习模式来描述Littlestone类的近似理论创造了舞台,比喻模型理论中的可定义性。然后我们给Shelah所庆祝的(或许是一个先验的不可翻译的)不易变公式理论提供了一个完整的类比,在学习的设置中,以算法论为无限的替代。