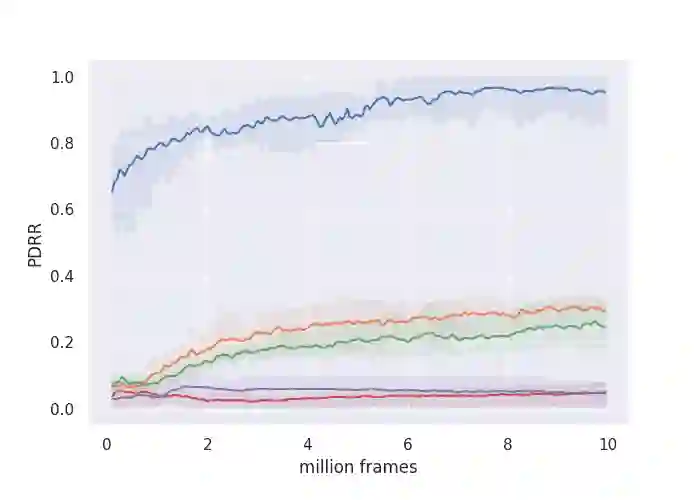

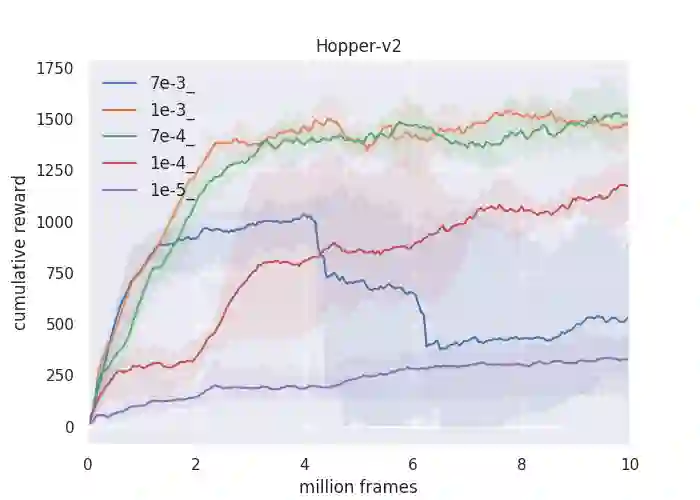

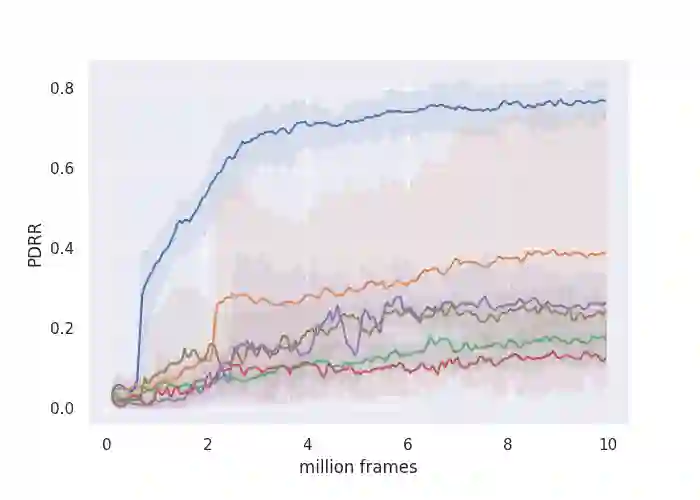

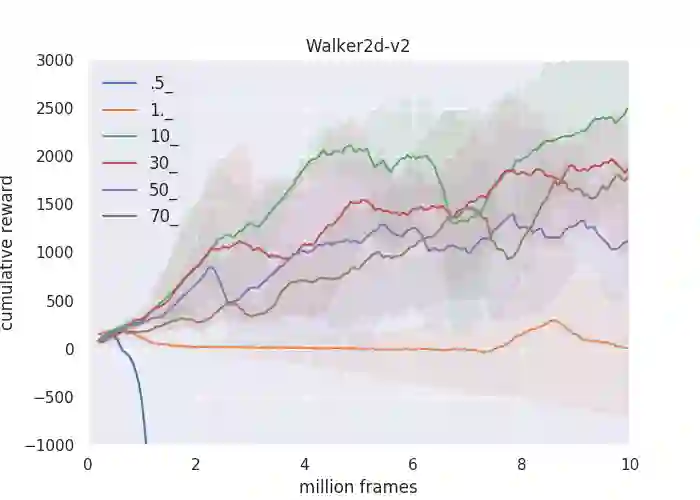

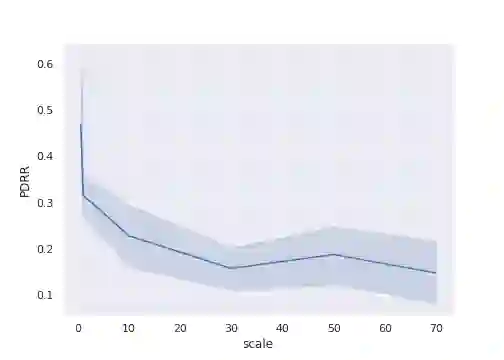

This work provides a thorough study on how reward scaling can affect performance of deep reinforcement learning agents. In particular, we would like to answer the question that how does reward scaling affect non-saturating ReLU networks in RL? This question matters because ReLU is one of the most effective activation functions for deep learning models. We also propose an Adaptive Network Scaling framework to find a suitable scale of the rewards during learning for better performance. We conducted empirical studies to justify the solution.

翻译:这项工作提供了一项全面研究,说明奖励比例如何影响深强化学习机构的业绩。特别是,我们想回答一个问题,即奖励比例如何影响RL中不饱和的RELU网络?这个问题很重要,因为RELU是深学习模式最有效的启动功能之一。我们还提议了一个适应性网络规模缩放框架,以便在学习期间找到适当的奖励规模,以取得更好的业绩。我们进行了经验性研究,以证明解决方案的合理性。

相关内容

Arxiv

4+阅读 · 2018年11月13日

Arxiv

4+阅读 · 2018年1月29日