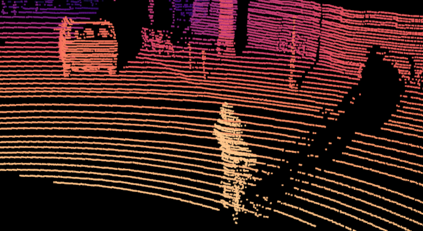

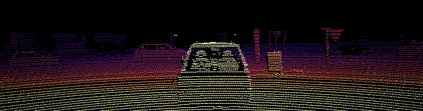

Depth perception is pivotal in many fields, such as robotics and autonomous driving, to name a few. Consequently, depth sensors such as LiDARs rapidly spread in many applications. The 3D point clouds generated by these sensors must often be coupled with an RGB camera to understand the framed scene semantically. Usually, the former is projected over the camera image plane, leading to a sparse depth map. Unfortunately, this process, coupled with the intrinsic issues affecting all the depth sensors, yields noise and gross outliers in the final output. Purposely, in this paper, we propose an effective unsupervised framework aimed at explicitly addressing this issue by learning to estimate the confidence of the LiDAR sparse depth map and thus allowing for filtering out the outliers. Experimental results on the KITTI dataset highlight that our framework excels for this purpose. Moreover, we demonstrate how this achievement can improve a wide range of tasks.

翻译:深度感知在许多领域(例如机器人和自主驾驶)都是至关重要的。 因此, 深度感知器(如LiDARs)等深度感应器在许多应用中迅速扩散。 这些感应器产生的三维点云层往往必须配有 RGB 相机来理解布置的场景。 通常, 前者是在相机图像平面上投射的, 导致深度图稀少。 不幸的是, 这一过程, 加上影响所有深度感应器的内在问题, 产生噪音和最终输出的粗外差。 本文的目的, 我们提出一个有效的、 不受监督的框架, 旨在通过学习如何估计LiDAR稀薄深度图的信任度, 从而允许过滤外部。 KITTI 数据集的实验结果突出表明, 我们的框架在这方面很有优势。 此外, 我们展示了这一成就如何能够改善广泛的任务范围。