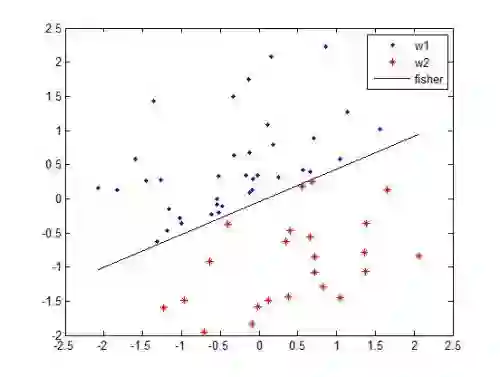

More data is expected to help us generalize to a task. But real datasets can contain out-of-distribution (OOD) data; this can come in the form of heterogeneity such as intra-class variability but also in the form of temporal shifts or concept drifts. We demonstrate a counter-intuitive phenomenon for such problems: generalization error of the task can be a non-monotonic function of the number of OOD samples; a small number of OOD samples can improve generalization but if the number of OOD samples is beyond a threshold, then the generalization error can deteriorate. We also show that if we know which samples are OOD, then using a weighted objective between the target and OOD samples ensures that the generalization error decreases monotonically. We demonstrate and analyze this phenomenon using linear classifiers on synthetic datasets and medium-sized neural networks on vision benchmarks such as MNIST, CIFAR-10, CINIC-10, PACS, and DomainNet, and observe the effect data augmentation, hyperparameter optimization, and pre-training have on this behavior.

翻译:预计更多的数据将有助于我们将任务概括化。 但真实的数据集可以包含分布外(OOD)数据; 这可能以不同种类的形式出现, 如类内变异, 但也以时间变化或概念漂移的形式出现。 我们对这种问题展示了一种反直觉现象: 任务的概括化错误可能是OOD样本数量的非移动函数; 少量 OOOD样本可以改进概括化, 但如果OOD样本的数量超过临界值, 则一般化错误会恶化。 我们还表明, 如果我们知道哪些样本是 OOD, 然后使用目标与OOD样本之间的加权目标, 就能确保一般化错误的减少单调。 我们用合成数据集的线性分类器和中等神经网络来演示并分析这一现象, 如MNIST、CIFAR-10、CINIC-10、PACS和DomainNet等视觉基准, 并观察数据增强、 超参数优化和预先培训对这种行为的影响 。