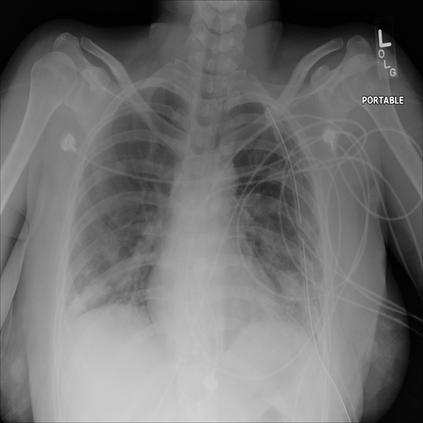

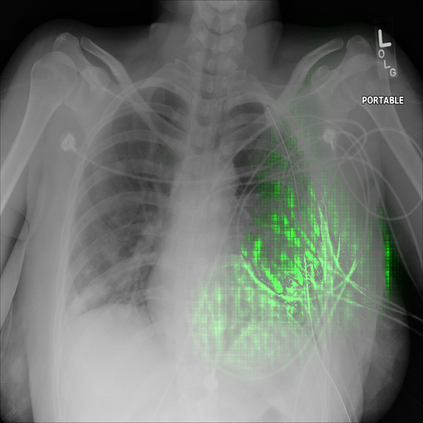

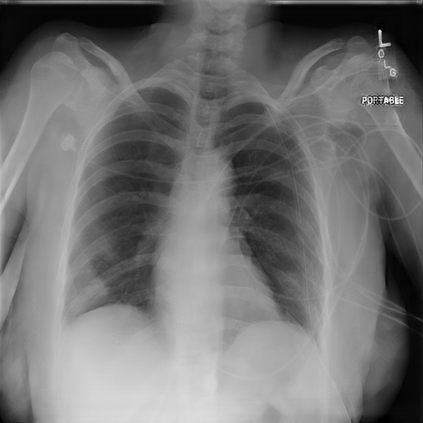

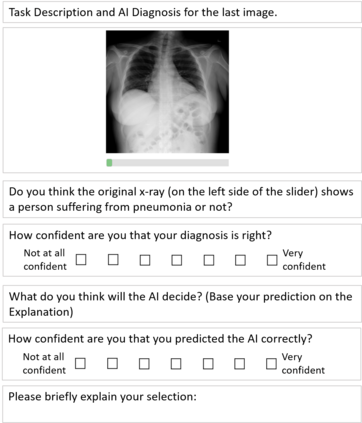

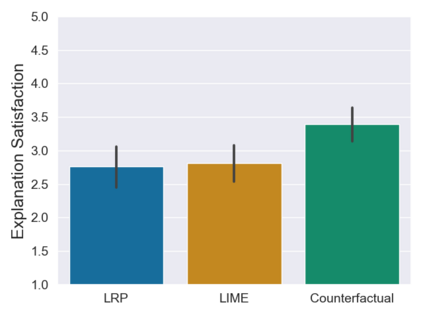

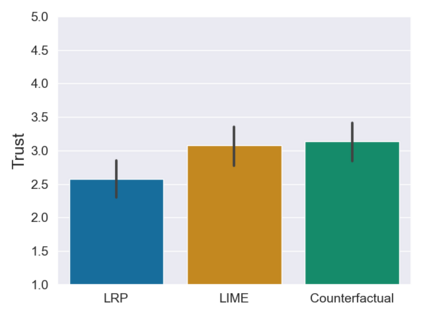

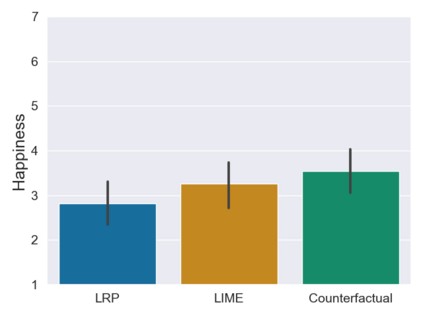

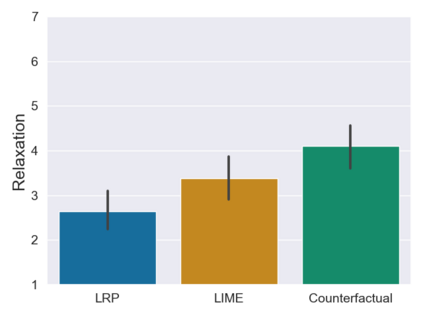

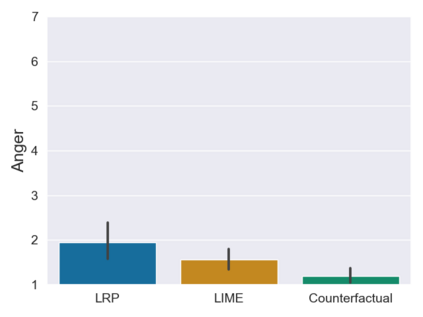

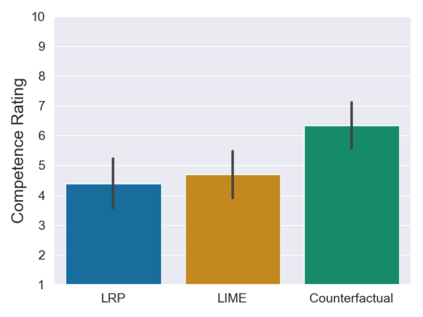

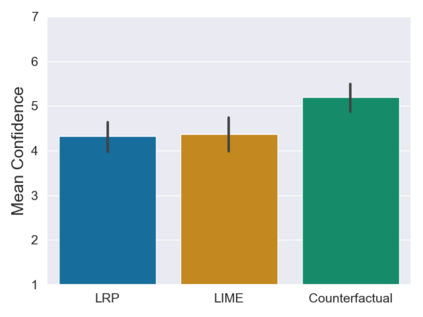

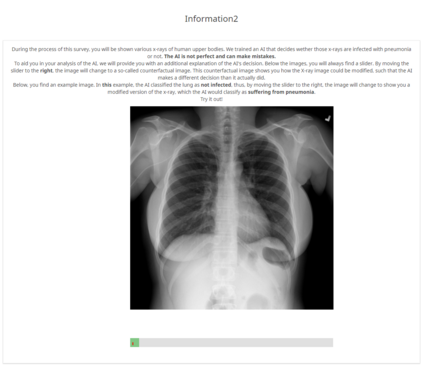

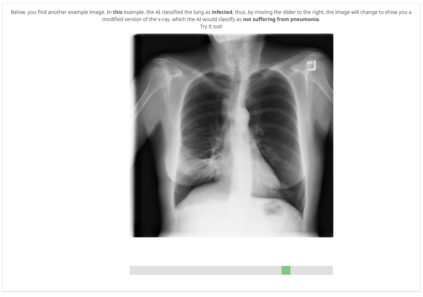

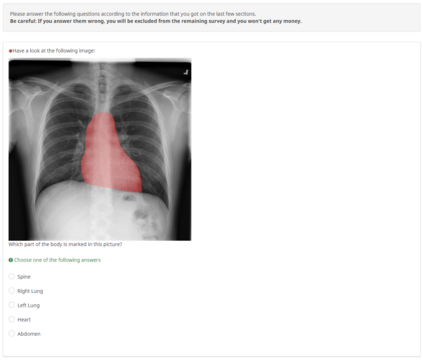

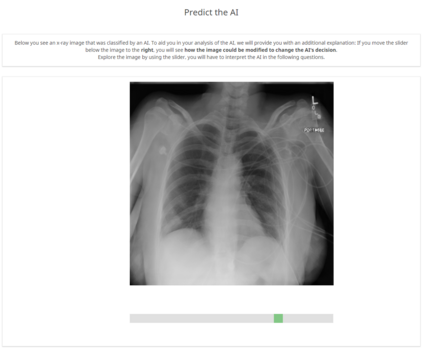

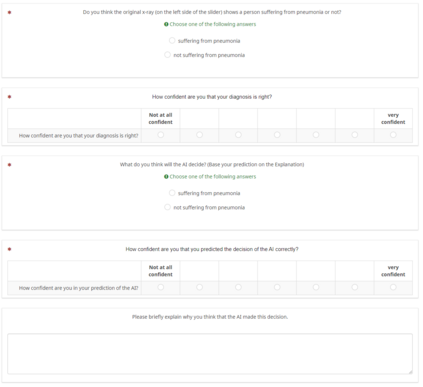

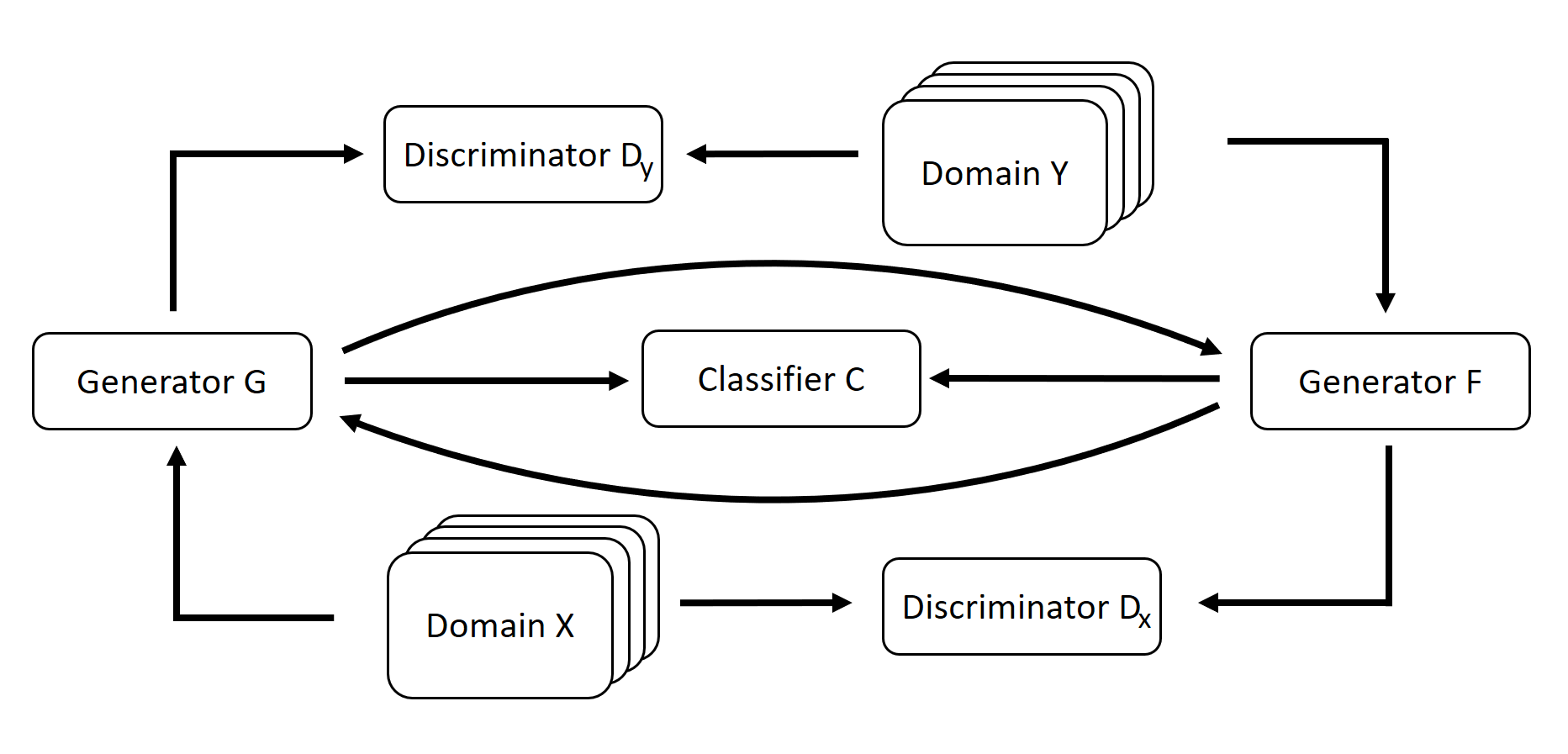

With the ongoing rise of machine learning, the need for methods for explaining decisions made by artificial intelligence systems is becoming a more and more important topic. Especially for image classification tasks, many state-of-the-art tools to explain such classifiers rely on visual highlighting of important areas of the input data. Contrary, counterfactual explanation systems try to enable a counterfactual reasoning by modifying the input image in a way such that the classifier would have made a different prediction. By doing so, the users of counterfactual explanation systems are equipped with a completely different kind of explanatory information. However, methods for generating realistic counterfactual explanations for image classifiers are still rare. Especially in medical contexts, where relevant information often consists of textural and structural information, high-quality counterfactual images have the potential to give meaningful insights into decision processes. In this work, we present GANterfactual, an approach to generate such counterfactual image explanations based on adversarial image-to-image translation techniques. Additionally, we conduct a user study to evaluate our approach in an exemplary medical use case. Our results show that, in the chosen medical use-case, counterfactual explanations lead to significantly better results regarding mental models, explanation satisfaction, trust, emotions, and self-efficacy than two state-of-the-art systems that work with saliency maps, namely LIME and LRP.

翻译:随着机器学习的不断兴起,对人工智能系统所作决定的解释方法的需要正在成为一个越来越重要的主题。特别是对于图像分类任务而言,许多最先进的解释这类分类工具依赖输入数据的重要领域的视觉突出显示。相反,反事实解释系统试图通过修改输入图像来促成反事实推理,使分类者能够作出不同的预测。通过这样做,反事实解释系统的用户配备了完全不同的解释性信息。然而,为图像分类者提供现实的反事实解释的方法仍然很少。特别是在医学方面,相关信息往往由文字和结构信息组成,高质量的反事实图像有可能为决策过程提供有意义的洞察力。在这项工作中,我们介绍了GANterfactal, 一种根据对抗图像到图像翻译技术产生这种反事实图像解释的方法。此外,我们进行了用户研究,以在典型的医疗使用案例中评价我们的方法。我们的结果显示,在所选择的医学案例中,反事实解释性解释方法往往包括文字和结构信息,高质量的反事实图像图像图像图像能够给决策过程带来更深刻的满意度,也就是两张的自我解释,而令人信得更满意。