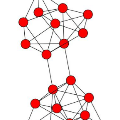

Network embedding (NE) can generate succinct node representations for massive-scale networks and enable direct applications of common machine learning methods to the network structure. Various NE algorithms have been proposed and used in a number of applications, such as node classification and link prediction. NE algorithms typically contain hyperparameters that are key to performance, but the hyperparameter tuning process can be time consuming. It is desirable to have the hyperparameters tuned within a specified length of time. Although AutoML methods have been applied to the hyperparameter tuning of NE algorithms, the problem of how to tune hyperparameters in a given period of time is not studied for NE algorithms before. In this paper, we propose JITuNE, a just-in-time hyperparameter tuning framework for NE algorithms. Our JITuNE framework enables the time-constrained hyperparameter tuning for NE algorithms by employing the tuning over hierarchical network synopses and transferring the knowledge obtained on synopses to the whole network. The hierarchical generation of synopsis and a time-constrained tuning method enable the constraining of overall tuning time. Extensive experiments demonstrate that JITuNE can significantly improve performances of NE algorithms, outperforming state-of-the-art methods within the same number of algorithm runs.

翻译:网络嵌入(NE)可为大规模网络生成简明的节点表示,并能够直接将通用机器学习方法应用于网络结构。各种NE算法已经提出,并用于若干应用,例如节点分类和链接预测。NE算法通常包含超参数,这是性能的关键,但超参数调程序可以耗时。最好在一定的时间内对超参数进行调控。虽然AUML方法已应用于对NE算法的超光谱调,但在特定时期内如何调控超参数的问题以前没有为NE算法进行研究。在本文中,我们提议JITuNE算法,这是一个对NE算法进行即时超参数调控的框架。我们的JITuNE框架能够利用对级别网络合成组合的调控调,将获得的知识转移到整个网络。在时间段段段期间如何调控调超分超分超分的超参数计数,从而能够大大地改进NEEV的运行情况。