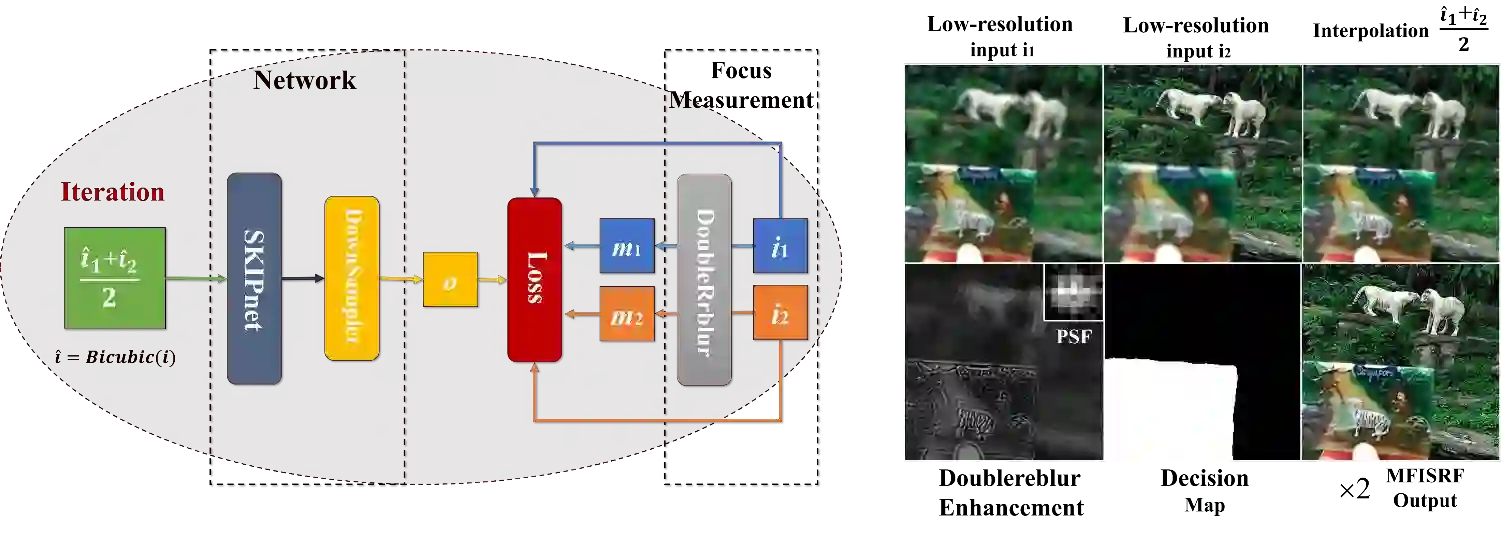

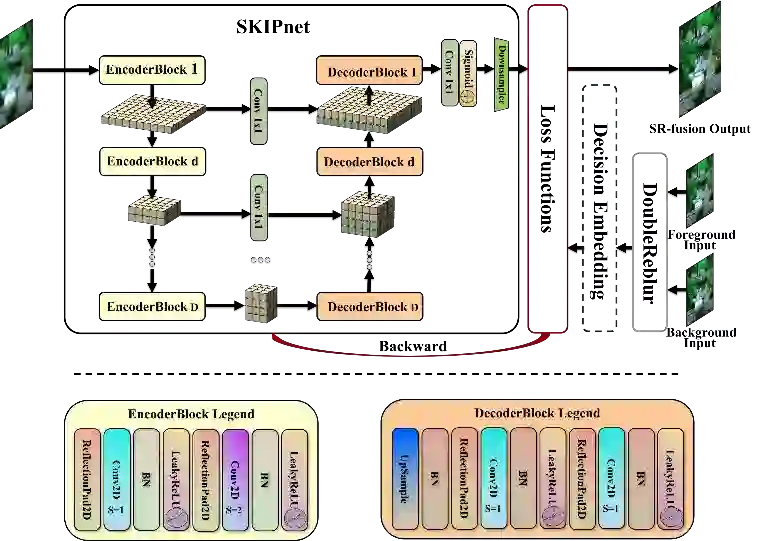

Multi-focus image fusion (MFIF) and super-resolution (SR) are the inverse problem of imaging model, purposes of MFIF and SR are obtaining all-in-focus and high-resolution 2D mapping of targets. Though various MFIF and SR methods have been designed; almost all the them deal with MFIF and SR separately. This paper unifies MFIF and SR problems in the physical perspective as the multi-focus image super resolution fusion (MFISRF), and we propose a novel unified dataset-free unsupervised framework named deep fusion prior (DFP) based-on deep image prior (DIP) to address such MFISRF with single model. Experiments have proved that our proposed DFP approaches or even outperforms those state-of-art MFIF and SR method combinations. To our best knowledge, our proposed work is a dataset-free unsupervised method to simultaneously implement the multi-focus fusion and super-resolution task for the first time. Additionally, DFP is a general framework, thus its networks and focus measurement tactics can be continuously updated to further improve the MFISRF performance. DFP codes are open source available at http://github.com/GuYuanjie/DeepFusionPrior.

翻译:多重点图像聚合和超分辨率(SR)是成像模型的反反问题,MFIF和SR的目的正在获得目标全焦点和高分辨率2D绘图,尽管已经设计了多种MFIF和SR方法;几乎所有这些方法都分别涉及MFIF和SR方法;本文将MFIF和SR问题作为多重点图像超级分辨率聚合(MFISRF)的物理角度统一为MFIF和SR问题,我们提议建立一个新的、统一的、没有监督的无数据数据集框架,称为前深层融合(DFP)基于深层图像(DIP),以便用单一模型处理MFISRF。实验证明,我们提议的DFP接近甚至超越了MFIF和SR方法的状态组合。据我们所知,我们拟议的工作是一种没有数据集的、不超超强的方法,首次同时执行多重点融合和超分辨率任务。此外,DFPF是一个总框架,因此其网络和重点测量策略可以在DFIF/FIFFF/FIFFFF/FIFO/FIFIFO/FIFFFFO/FIFFFFFFFFFO/FOFFO/FFFFFFO/FIFSOFO/FOFFFFFFFFFFFFFFFFFFF/FFFFFFFFFFFFFO/FO/FFFFFFFFOFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF/F/FFS/FSFFFFFFFFF/FS/FF/FFFFFFFFFFFF/F/FFFF/FFFF/F/F/FFFFFFFF/FFFSFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF/FFFFFFFFFFFFFF/F/F/F/F/F/F/F/F/FS/F/F/FF