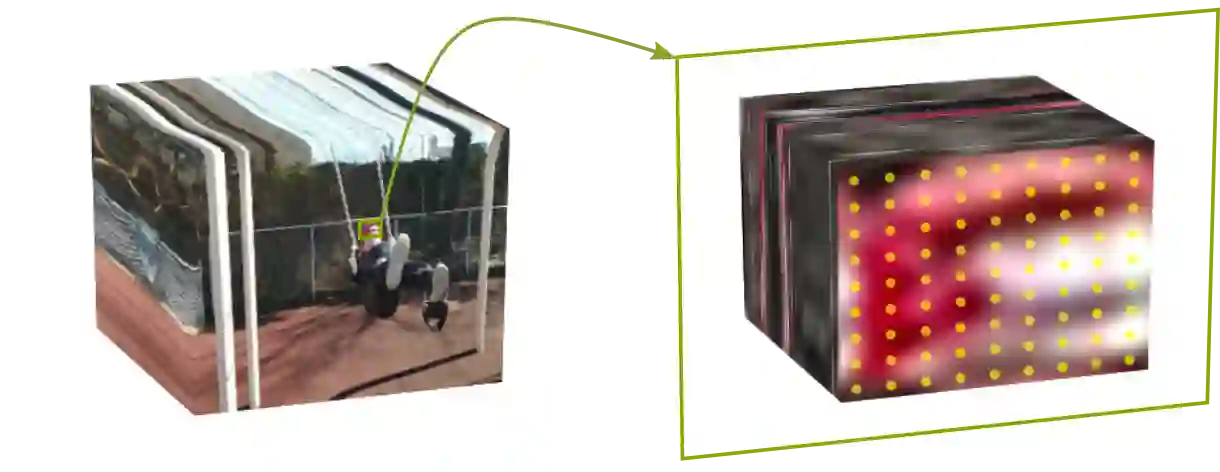

As event-based sensing gains in popularity, theoretical understanding is needed to harness this technology's potential. Instead of recording video by capturing frames, event-based cameras have sensors that emit events when their inputs change, thus encoding information in the timing of events. This creates new challenges in establishing reconstruction guarantees and algorithms, but also provides advantages over frame-based video. We use time encoding machines to model event-based sensors: TEMs also encode their inputs by emitting events characterized by their timing and reconstruction from time encodings is well understood. We consider the case of time encoding bandlimited video and demonstrate a dependence between spatial sensor density and overall spatial and temporal resolution. Such a dependence does not occur in frame-based video, where temporal resolution depends solely on the frame rate of the video and spatial resolution depends solely on the pixel grid. However, this dependence arises naturally in event-based video and allows oversampling in space to provide better time resolution. As such, event-based vision encourages using more sensors that emit fewer events over time.

翻译:随着以事件为基础的遥感的普及,需要理论理解来利用这一技术的潜力。不是通过捕捉框架录制视频,以事件为基础的照相机的传感器在输入变化时会释放事件,从而将信息编码到事件的时间安排中。这在建立重建保障和算法方面带来了新的挑战,但也提供了比基于框架的视频的优势。我们用时间编码机器来模拟以事件为基础的传感器:TEMS还用以时间编码为特点的发射事件来编码其投入,用时间编码来根据时间编码。我们考虑了时间编码带带有限视频的情况,并展示了空间传感器密度与总体空间和时间分辨率之间的依赖性。这种依赖性并不出现在基于框架的视频中,因为时间分辨率完全取决于视频和空间分辨率的框架速度完全取决于像素格。然而,这种依赖自然地出现在以事件为基础的视频中,并允许在空间上过度标注,以提供更好的时间分辨率。因此,基于事件的视觉鼓励使用更多的传感器,逐渐减少事件的发生。