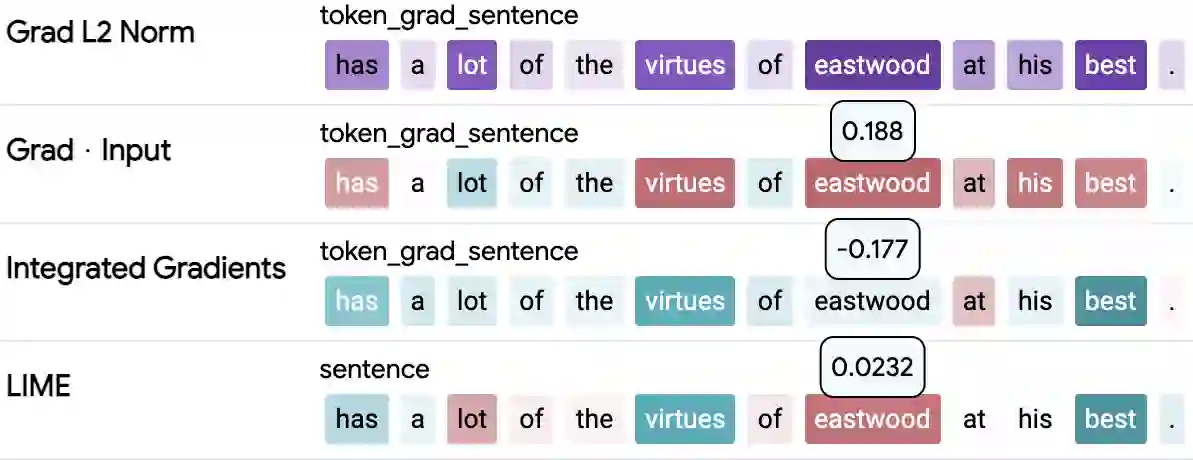

Feature attribution a.k.a. input salience methods which assign an importance score to a feature are abundant but may produce surprisingly different results for the same model on the same input. While differences are expected if disparate definitions of importance are assumed, most methods claim to provide faithful attributions and point at the features most relevant for a model's prediction. Existing work on faithfulness evaluation is not conclusive and does not provide a clear answer as to how different methods are to be compared. Focusing on text classification and the model debugging scenario, our main contribution is a protocol for faithfulness evaluation that makes use of partially synthetic data to obtain ground truth for feature importance ranking. Following the protocol, we do an in-depth analysis of four standard salience method classes on a range of datasets and shortcuts for BERT and LSTM models and demonstrate that some of the most popular method configurations provide poor results even for simplest shortcuts. We recommend following the protocol for each new task and model combination to find the best method for identifying shortcuts.

翻译:虽然假设了不同的重要定义,但预计会出现差异,但大多数方法都声称提供忠实的属性和指向与模型预测最相关的特征。关于忠诚性评价的现有工作不是结论性的,也没有提供如何比较不同方法的明确答案。我们侧重于文本分类和模型调试假设,我们的主要贡献是忠实性评价协议,它利用部分合成数据获得特征重要等级的地面真相。我们遵循协议,深入分析了四个标准突出方法类别,分别涉及BERT和LSTM模型的数据集和捷径,并表明一些最受欢迎的方法配置即使在最简单的捷径上也提供不好的结果。我们建议对每一项新任务和模型组合采用协议,以便找到最佳的捷径。