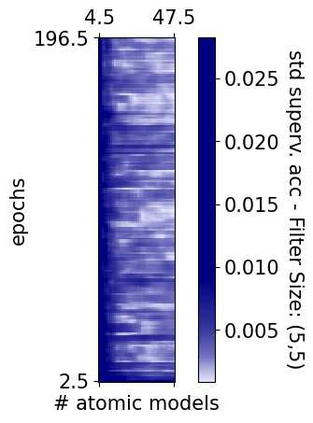

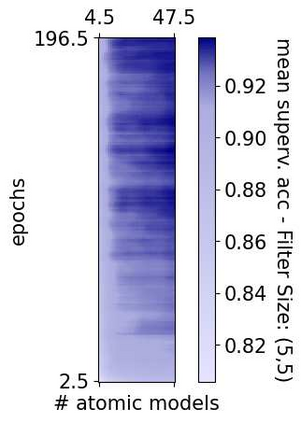

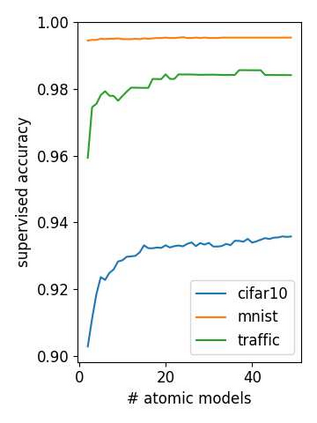

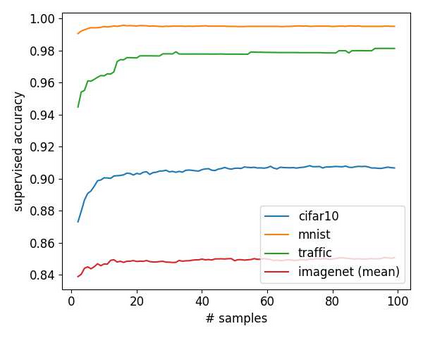

Modern software systems rely on Deep Neural Networks (DNN) when processing complex, unstructured inputs, such as images, videos, natural language texts or audio signals. Provided the intractably large size of such input spaces, the intrinsic limitations of learning algorithms, and the ambiguity about the expected predictions for some of the inputs, not only there is no guarantee that DNN's predictions are always correct, but rather developers must safely assume a low, though not negligible, error probability. A fail-safe Deep Learning based System (DLS) is one equipped to handle DNN faults by means of a supervisor, capable of recognizing predictions that should not be trusted and that should activate a healing procedure bringing the DLS to a safe state. In this paper, we propose an approach to use DNN uncertainty estimators to implement such a supervisor. We first discuss the advantages and disadvantages of existing approaches to measure uncertainty for DNNs and propose novel metrics for the empirical assessment of the supervisor that rely on such approaches. We then describe our publicly available tool UNCERTAINTY-WIZARD, which allows transparent estimation of uncertainty for regular tf.keras DNNs. Lastly, we discuss a large-scale study conducted on four different subjects to empirically validate the approach, reporting the lessons-learned as guidance for software engineers who intend to monitor uncertainty for fail-safe execution of DLS.

翻译:现代软件系统在处理复杂、无结构化的投入(如图像、视频、自然语言文本或音频信号)时依赖深神经网络(DNN)处理复杂、无结构的投入(DNN)。如果这种输入空间的规模非常庞大,学习算法的内在局限性以及某些投入的预期预测模糊不清,不仅不能保证DNN的预测总是正确,而且开发商必须安全地承担低误差概率,尽管不能忽略错误概率。一个基于故障安全的深知识系统(DLS)能够通过一个监督员来处理DNN的错误,能够识别不应信任的预测,并能够启动一个将DLS带到安全状态的愈合程序。在这份文件中,我们提出一种办法,利用DNNN的不确定性估计员来实施这样的监督员。我们首先讨论现有办法的利弊之处和缺点,为依赖这种方法的主管进行经验评估提出新的指标。我们然后描述我们公开可用的工具UNCERTY-WZARD,它能够透明地估计DLS的不确定性,从而将DLS变为常规的DNIS标准。最后,我们讨论对DNS的四级的不确定性进行大规模研究,谁为DNNS级的核查。