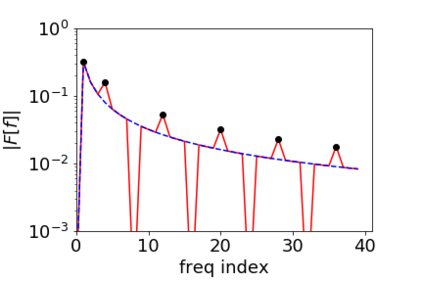

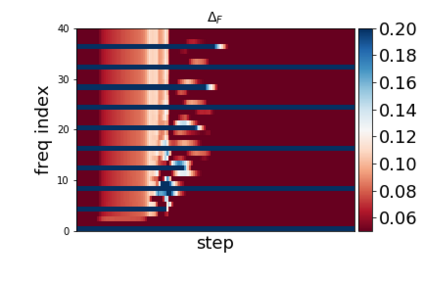

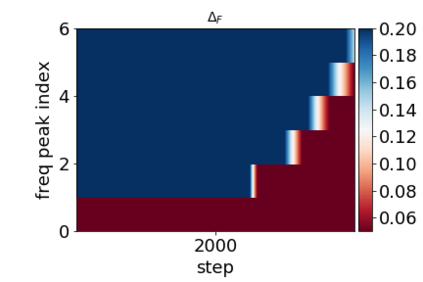

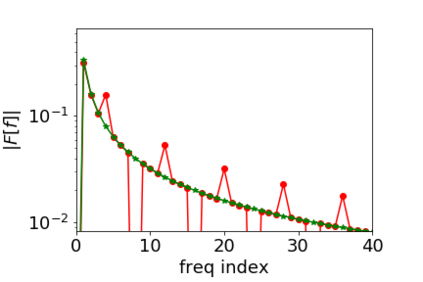

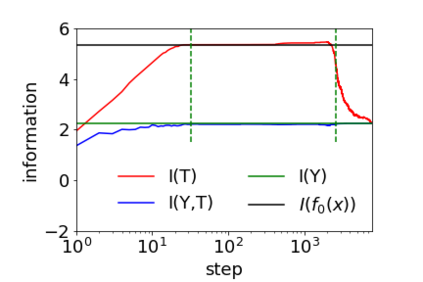

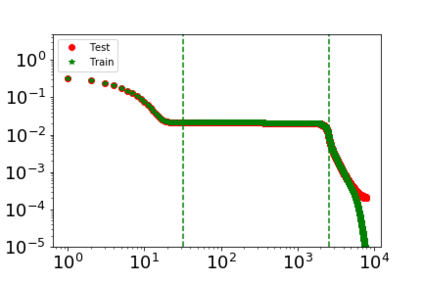

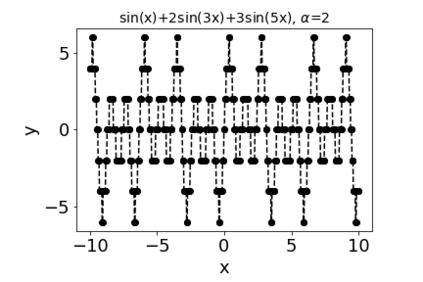

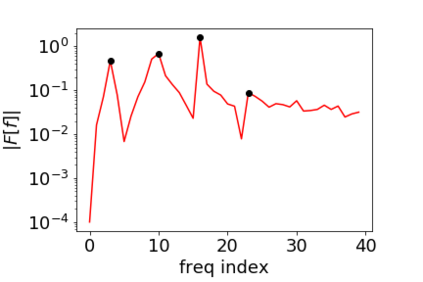

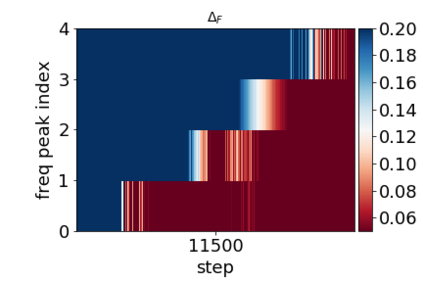

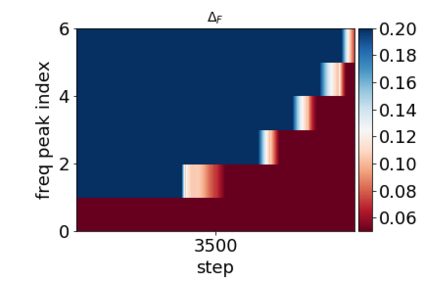

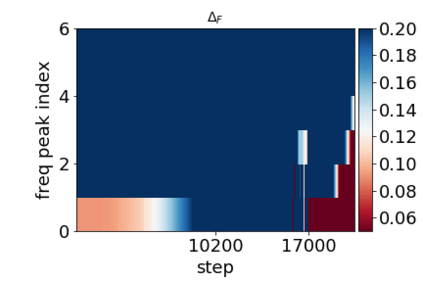

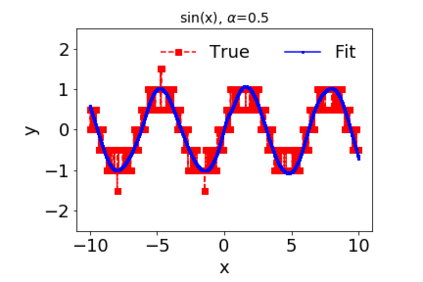

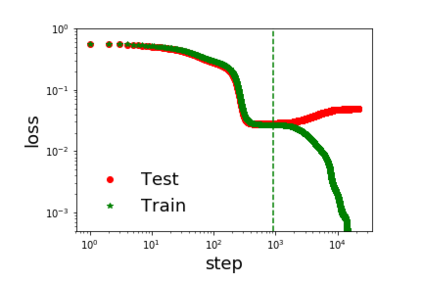

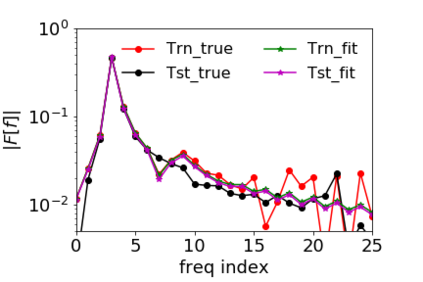

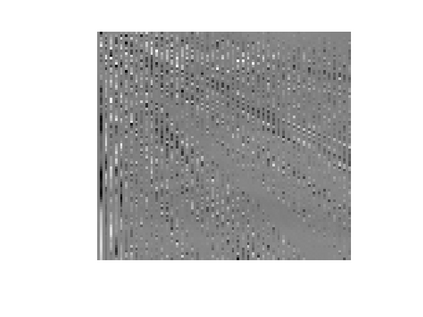

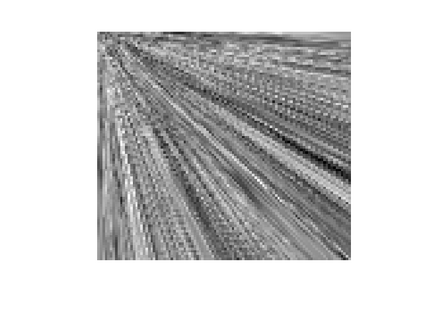

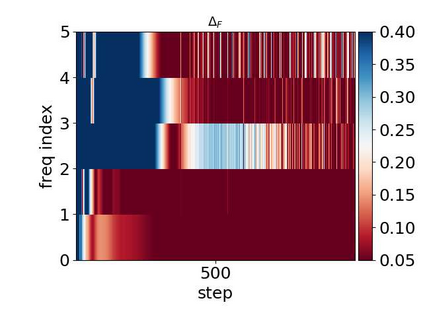

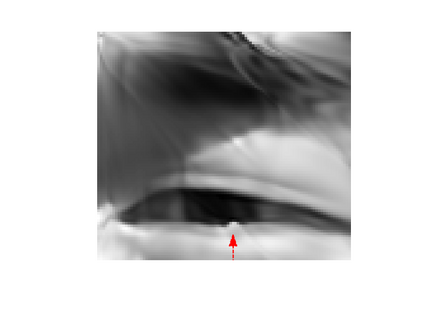

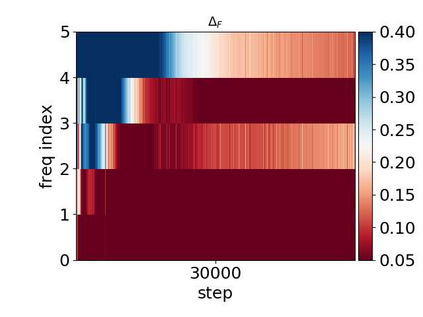

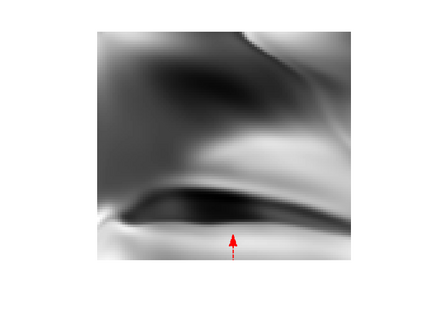

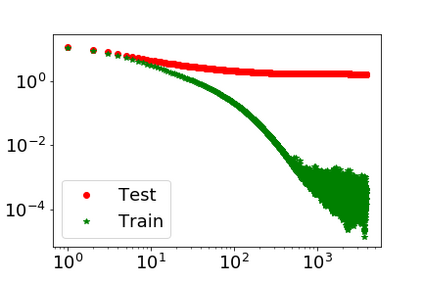

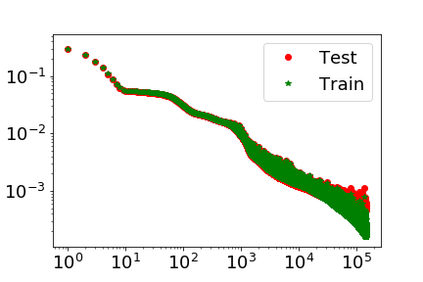

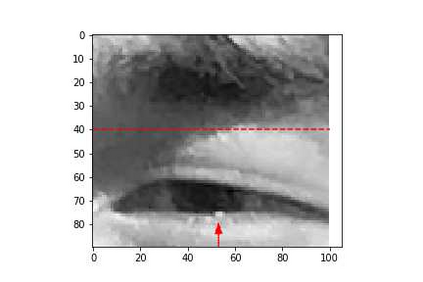

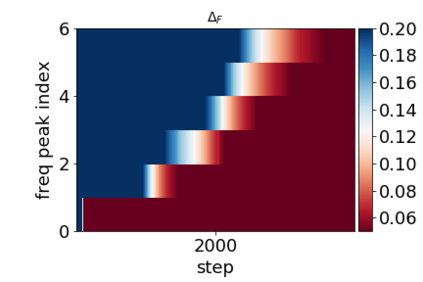

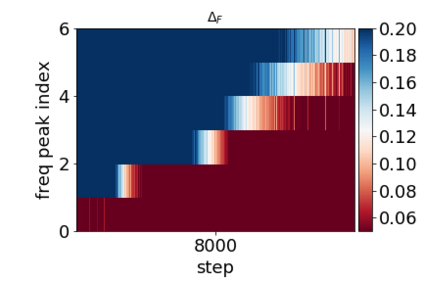

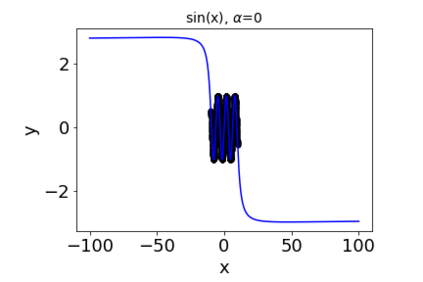

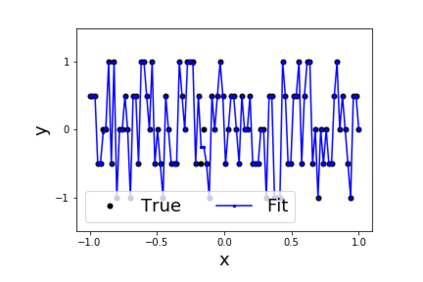

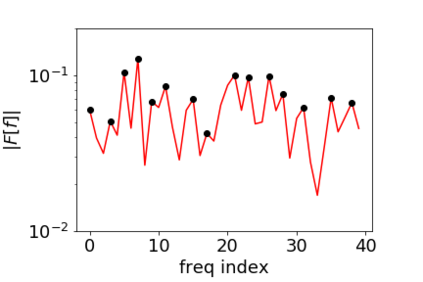

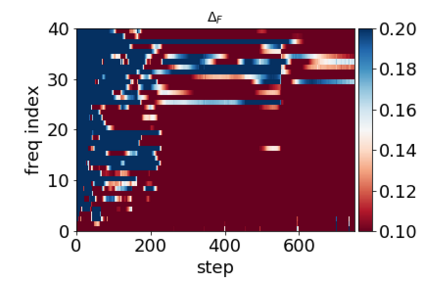

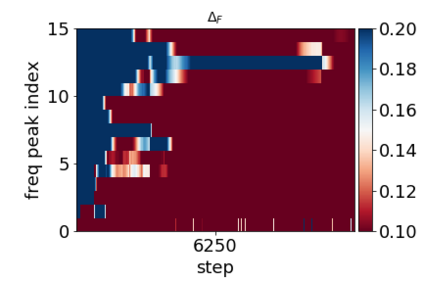

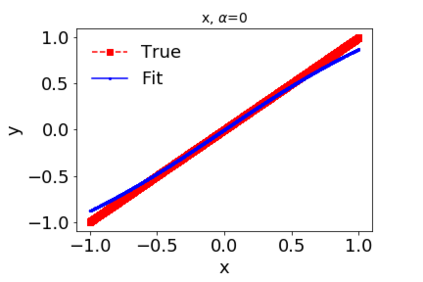

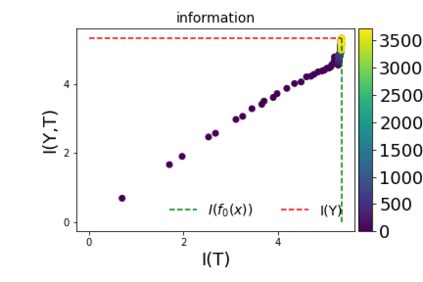

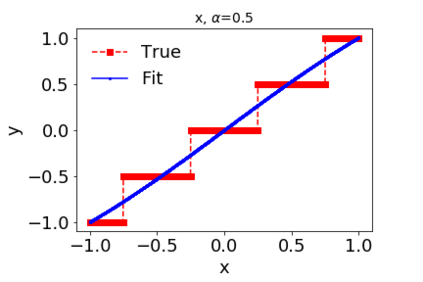

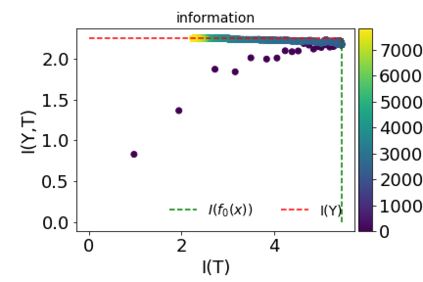

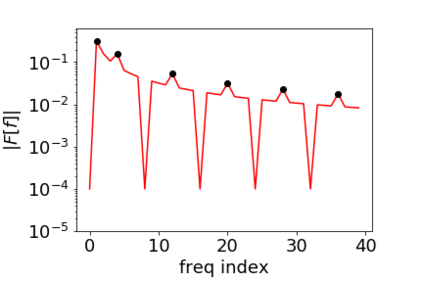

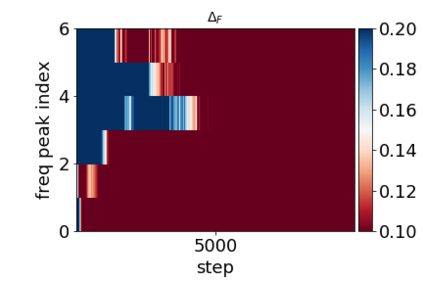

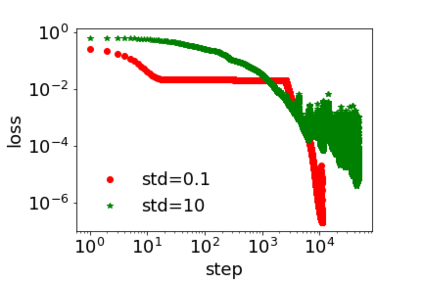

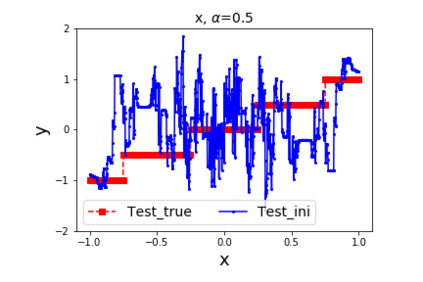

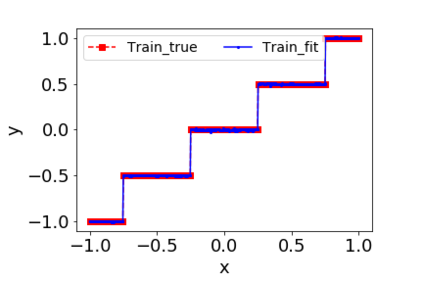

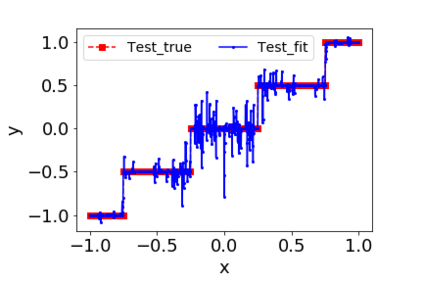

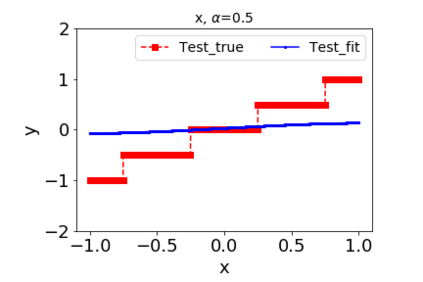

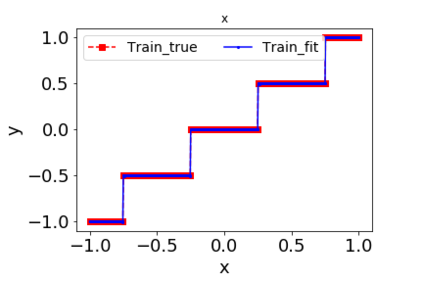

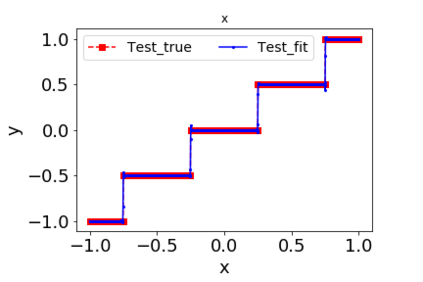

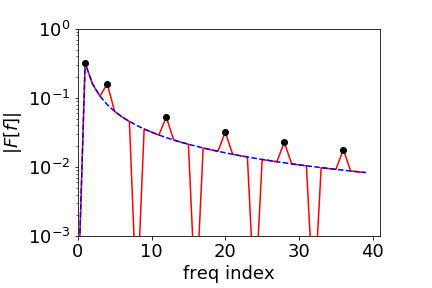

Why deep neural networks (DNNs) capable of overfitting often generalize well in practice is a mystery in deep learning. Existing works indicate that this observation holds for both complicated real datasets and simple datasets of one-dimensional (1-d) functions. In this work, for natural images and low-frequency dominant 1-d functions, we empirically found that a DNN with common settings first quickly captures the dominant low-frequency components, and then relatively slowly captures high-frequency ones. We call this phenomenon Frequency Principle (F-Principle). F-Principle can be observed over various DNN setups of different activation functions, layer structures and training algorithms in our experiments. F-Principle can be used to understand (i) the behavior of DNN training in the information plane and (ii) why DNNs often generalize well albeit its ability of overfitting. This F-Principle potentially can provide insights into understanding the general principle underlying DNN optimization and generalization for real datasets.

翻译:在深层神经网络(DNN)中,能够超常推广实践的深层神经网络(DNN)为何是一个深层学习的谜。现有工作表明,这一观察既包含复杂的真实数据集,也包含一维功能(1-d)的简单数据集。在这项工作中,对于自然图像和低频主要1d函数,我们从经验上发现,具有共同环境的DNN首先迅速捕捉了主要的低频组件,然后相对缓慢地捕捉了高频组件。我们称之为现象频率原则(F-原则)。在实验中,对不同激活功能、层结构和培训算法的各种DNNN设置,可以观察到F原则。F-原则可以用来理解(一) DNN在信息平面上的培训行为,以及(二)为什么DNNN通常广泛,尽管其能力过大。这一F原则有可能为理解DNN优化和对真实数据集概括的一般原则提供深入的了解。