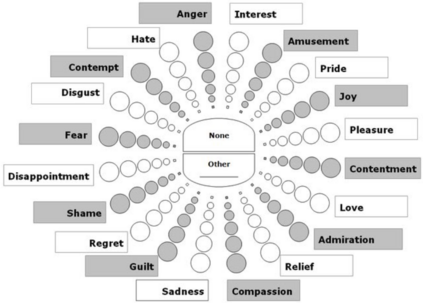

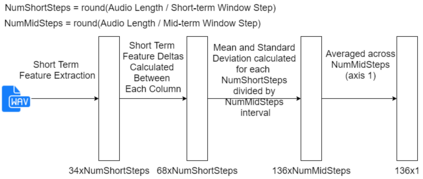

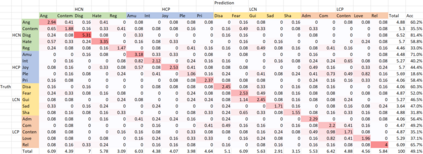

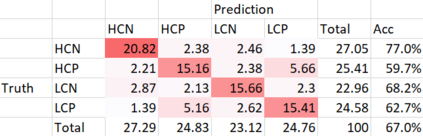

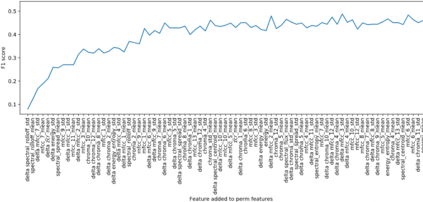

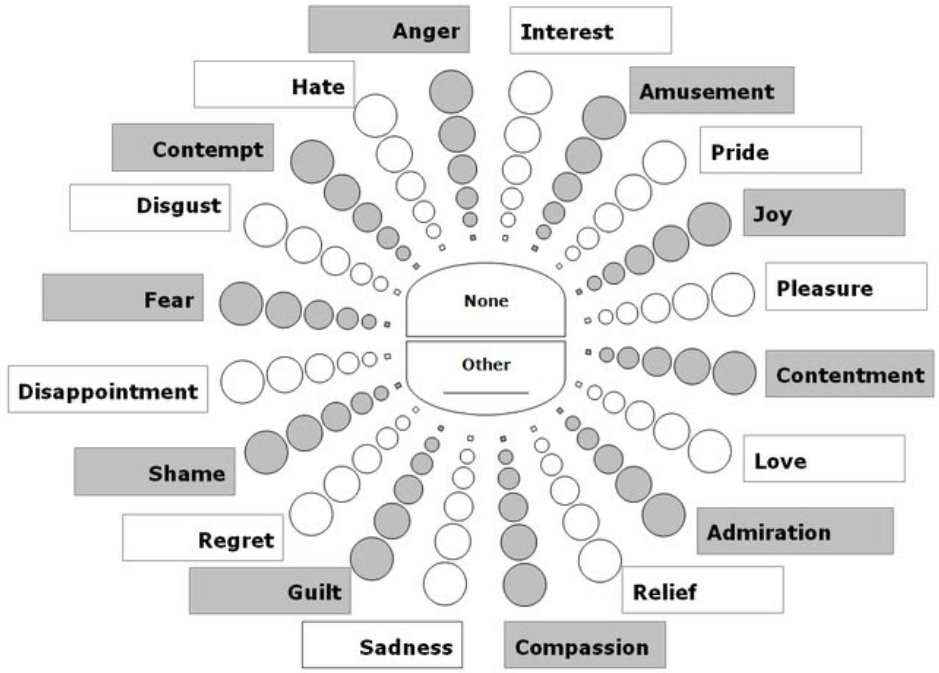

The task of classifying emotions within a musical track has received widespread attention within the Music Information Retrieval (MIR) community. Music emotion recognition has traditionally relied on the use of acoustic features, verbal features, and metadata-based filtering. The role of musical prosody remains under-explored despite several studies demonstrating a strong connection between prosody and emotion. In this study, we restrict the input of traditional machine learning algorithms to the features of musical prosody. Furthermore, our proposed approach builds upon the prior by classifying emotions under an expanded emotional taxonomy, using the Geneva Wheel of Emotion. We utilize a methodology for individual data collection from vocalists, and personal ground truth labeling by the artist themselves. We found that traditional machine learning algorithms when limited to the features of musical prosody (1) achieve high accuracies for a single singer, (2) maintain high accuracy when the dataset is expanded to multiple singers, and (3) achieve high accuracies when trained on a reduced subset of the total features.

翻译:在音乐轨道中对情感进行分类的任务在音乐信息检索社区受到广泛关注。音乐情感识别历来依赖于使用声学特征、语言特征和基于元数据的过滤。尽管有几项研究表明乐曲与情感之间有着密切的联系,但乐曲的作用仍未得到充分探讨。在本研究中,我们把传统机器学习算法的投入限制在音乐乐曲的特点上。此外,我们提出的方法以先前的方法为基础,利用日内瓦情感之轮将情感分类在扩大的情感分类中进行分类。我们使用一种方法收集声音家的个人数据,以及艺术家自己对个人地面的真相进行标签。我们发现,传统的机器算法在仅限于音乐演唱特征时(1) 达到一个单一歌手的高超能力,(2) 当数据集扩大到多个歌手时保持高度准确性,(3) 在就全部特征的一小部分进行培训时达到高能力。