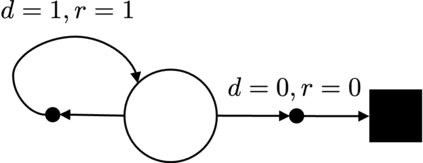

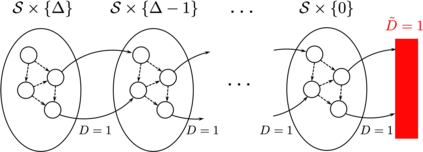

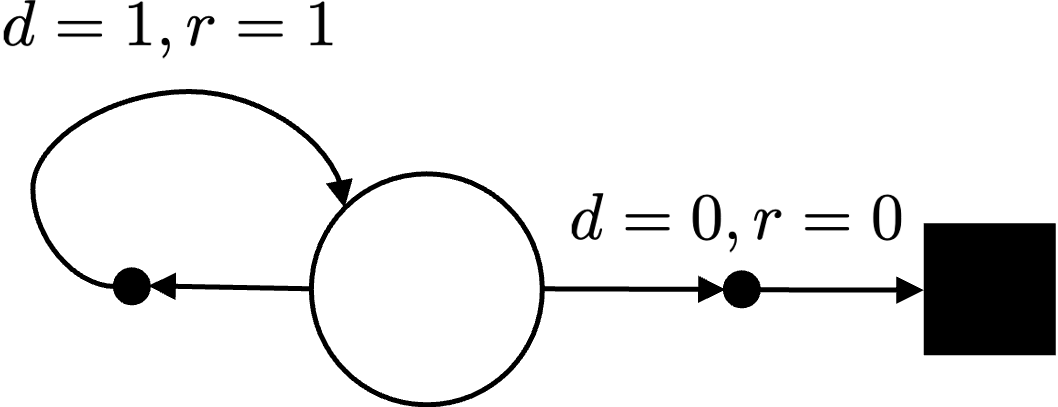

In this work we address the problem of finding feasible policies for Constrained Markov Decision Processes under probability one constraints. We argue that stationary policies are not sufficient for solving this problem, and that a rich class of policies can be found by endowing the controller with a scalar quantity, so called budget, that tracks how close the agent is to violating the constraint. We show that the minimal budget required to act safely can be obtained as the smallest fixed point of a Bellman-like operator, for which we analyze its convergence properties. We also show how to learn this quantity when the true kernel of the Markov decision process is not known, while providing sample-complexity bounds. The utility of knowing this minimal budget relies in that it can aid in the search of optimal or near-optimal policies by shrinking down the region of the state space the agent must navigate. Simulations illustrate the different nature of probability one constraints against the typically used constraints in expectation.

翻译:在这项工作中,我们在一种可能性的限制下,解决了为控制Markov决定程序寻找可行政策的问题。我们争辩说,固定政策不足以解决问题,而通过向控制者提供数量惊人的、所谓的预算,可以找到大量的政策,从而追踪控制者是如何接近违反限制的。我们表明,安全行动所需的最低预算可以作为像Bellman一样的操作者最小的固定点获得,对此我们分析其趋同特性。我们还表明,当不知道Markov决定程序的真正内核时,如何了解这一数量,同时提供样本的复杂性约束。了解这一最低限度预算的效用在于它能够帮助寻找最佳或接近最佳的政策,缩小该操作者必须航行的州空间面积。模拟说明了一种限制对于通常使用的预期限制的概率的不同性质。