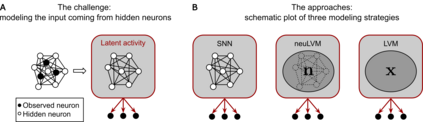

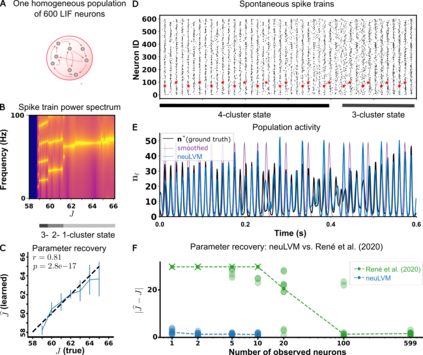

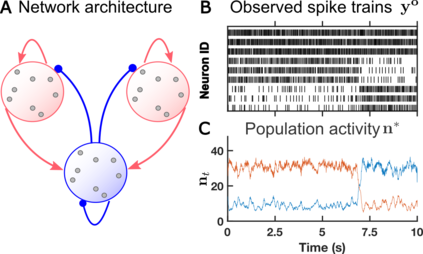

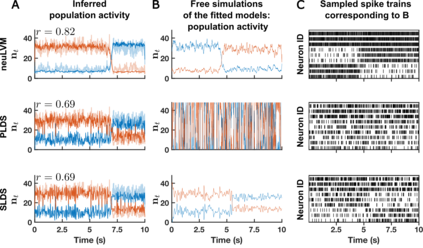

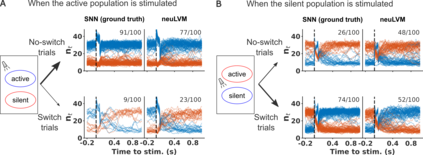

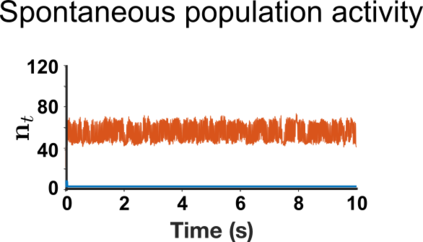

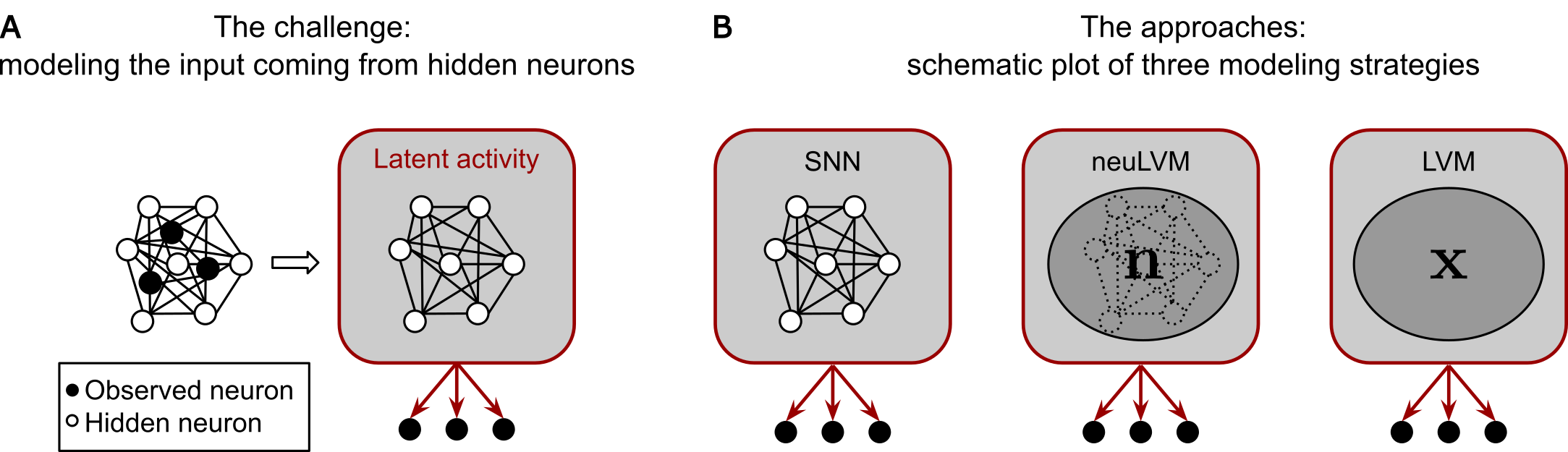

Can we use spiking neural networks (SNN) as generative models of multi-neuronal recordings, while taking into account that most neurons are unobserved? Modeling the unobserved neurons with large pools of hidden spiking neurons leads to severely underconstrained problems that are hard to tackle with maximum likelihood estimation. In this work, we use coarse-graining and mean-field approximations to derive a bottom-up, neuronally-grounded latent variable model (neuLVM), where the activity of the unobserved neurons is reduced to a low-dimensional mesoscopic description. In contrast to previous latent variable models, neuLVM can be explicitly mapped to a recurrent, multi-population SNN, giving it a transparent biological interpretation. We show, on synthetic spike trains, that a few observed neurons are sufficient for neuLVM to perform efficient model inversion of large SNNs, in the sense that it can recover connectivity parameters, infer single-trial latent population activity, reproduce ongoing metastable dynamics, and generalize when subjected to perturbations mimicking photo-stimulation.

翻译:我们能否使用神经神经网络(SNN)作为多中子录音的基因模型,同时考虑到大多数神经元未被观测到?用大量隐藏的喷雾神经元来模拟未观测到的神经元,导致严重缺乏控制的问题,很难用最大的可能性估计来解决这些问题。在这项工作中,我们使用粗微重力和中位近似值来得出一个自下而上、神经地基潜伏变异模型(NeuLVM),在这个模型中,未观测到的神经元的活动减少到低维中位中位图描述。与以往的潜伏变异模型相比,中位LVM可以明确地绘制成一个经常性的多人口型SNNN,给它提供透明的生物解释。我们在合成加压列列中显示,少数观察到的神经元足以使中位LVM进行高效的模型转换大型SNNNM,这意味着它可以恢复连接参数,推导出单层潜伏人口活动,复制持续的元动态,并在受摄像性模拟时进行一般化。