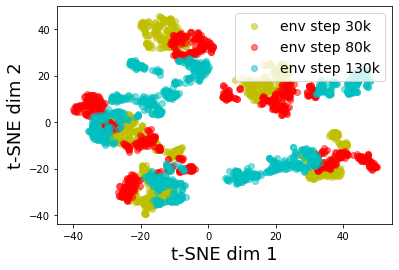

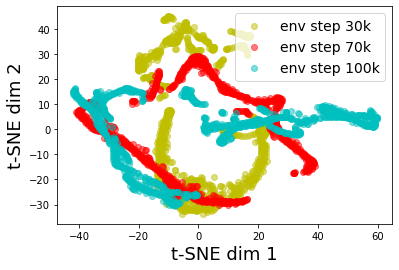

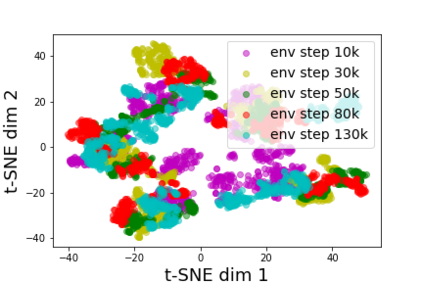

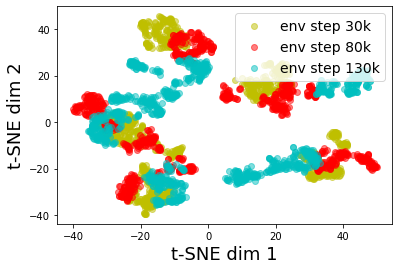

Model-based reinforcement learning (RL) often achieves higher sample efficiency in practice than model-free RL by learning a dynamics model to generate samples for policy learning. Previous works learn a dynamics model that fits under the empirical state-action visitation distribution for all historical policies, i.e., the sample replay buffer. However, in this paper, we observe that fitting the dynamics model under the distribution for \emph{all historical policies} does not necessarily benefit model prediction for the \emph{current policy} since the policy in use is constantly evolving over time. The evolving policy during training will cause state-action visitation distribution shifts. We theoretically analyze how this distribution shift over historical policies affects the model learning and model rollouts. We then propose a novel dynamics model learning method, named \textit{Policy-adapted Dynamics Model Learning (PDML)}. PDML dynamically adjusts the historical policy mixture distribution to ensure the learned model can continually adapt to the state-action visitation distribution of the evolving policy. Experiments on a range of continuous control environments in MuJoCo show that PDML achieves significant improvement in sample efficiency and higher asymptotic performance combined with the state-of-the-art model-based RL methods.

翻译:以模型为基础的强化学习(RL)在实践中往往比无模型的RL(RL)在实践上实现更高的抽样效率,通过学习一个动态模型来生成政策学习样本。以前的作品学习了一个符合所有历史政策的经验性州-行动访问分布的动态模型,即样本重放缓冲。然而,在本文件中,我们观察到,在分配\emph{all历史政策时,我们发现,在分配中匹配动态模型,不一定有利于对\emph{现行政策的模型预测,因为正在使用的政策正在不断演变。培训期间不断演进的政策将导致州-行动访问分布的变化。我们理论上分析历史政策的分配变化如何影响模式的学习和模型推出。我们随后提出了一个新的动态模型学习方法,名为\ textit{Polict-added Confirmaticalations Directives Modleining (PDML}) 。PDDML动态调整了历史政策混合分配模式,以确保学习过的模型能够持续适应正在演变的政策的州-行动访问分布。在MuJoco的连续控制环境中的一系列实验显示PDDML(PDDML)的样本和更高程度的模型性绩效。