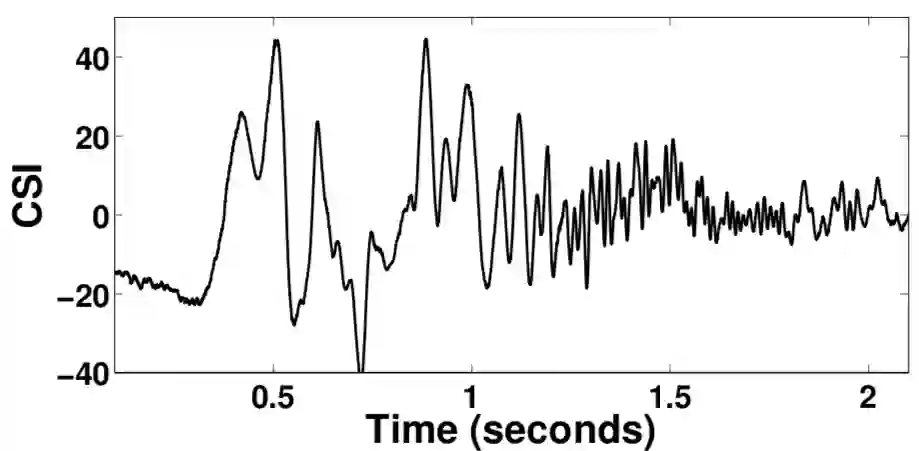

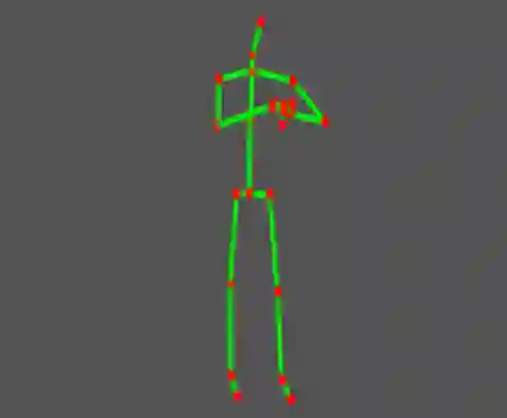

Human Action Recognition (HAR) aims to understand human behavior and assign a label to each action. It has a wide range of applications, and therefore has been attracting increasing attention in the field of computer vision. Human actions can be represented using various data modalities, such as RGB, skeleton, depth, infrared, point cloud, event stream, audio, acceleration, radar, and WiFi signal, which encode different sources of useful yet distinct information and have various advantages depending on the application scenarios. Consequently, lots of existing works have attempted to investigate different types of approaches for HAR using various modalities. In this paper, we present a comprehensive survey of recent progress in deep learning methods for HAR based on the type of input data modality. Specifically, we review the current mainstream deep learning methods for single data modalities and multiple data modalities, including the fusion-based and the co-learning-based frameworks. We also present comparative results on several benchmark datasets for HAR, together with insightful observations and inspiring future research directions.

翻译:人类行动认知(HAR)旨在了解人类行为,为每项行动指定一个标签,它具有广泛的应用,因此在计算机愿景领域日益引起注意。人类行动可以使用各种数据模式,如RGB、骨架、深度、红外线、点云、事件流、音频、加速、雷达和WiFi信号,这些模式汇集了不同有用但独特的信息来源,并视应用情景的不同而具有各种优势。因此,许多现有工作都试图以不同的方式对HAR的不同类型方法进行调查。在本文件中,我们根据输入数据模式的类型,对HAR在深层学习方法方面的最新进展进行了全面调查。具体地说,我们审查了当前单一数据模式和多种数据模式的主流深层学习方法,包括基于聚合和共同学习的框架。我们还对HAR的若干基准数据集提出了比较结果,同时提出了深刻的观察结果,并启发了今后的研究方向。