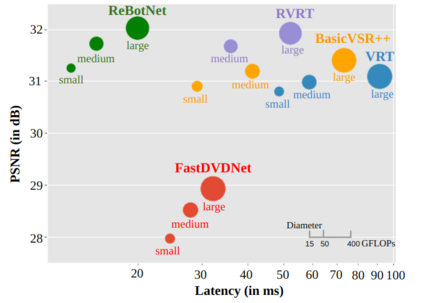

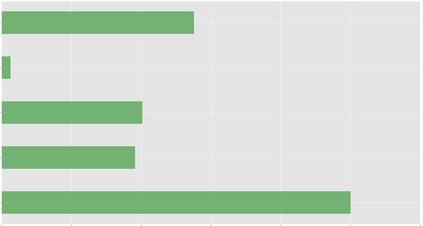

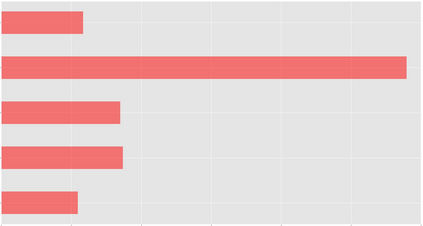

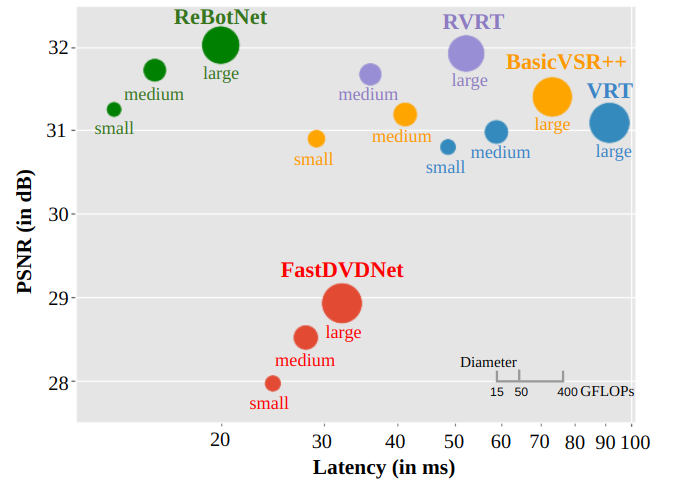

Most video restoration networks are slow, have high computational load, and can't be used for real-time video enhancement. In this work, we design an efficient and fast framework to perform real-time video enhancement for practical use-cases like live video calls and video streams. Our proposed method, called Recurrent Bottleneck Mixer Network (ReBotNet), employs a dual-branch framework. The first branch learns spatio-temporal features by tokenizing the input frames along the spatial and temporal dimensions using a ConvNext-based encoder and processing these abstract tokens using a bottleneck mixer. To further improve temporal consistency, the second branch employs a mixer directly on tokens extracted from individual frames. A common decoder then merges the features form the two branches to predict the enhanced frame. In addition, we propose a recurrent training approach where the last frame's prediction is leveraged to efficiently enhance the current frame while improving temporal consistency. To evaluate our method, we curate two new datasets that emulate real-world video call and streaming scenarios, and show extensive results on multiple datasets where ReBotNet outperforms existing approaches with lower computations, reduced memory requirements, and faster inference time.

翻译:大多数视频修复网络速度较慢,计算负荷高,无法用于实时视频增强。在本文中,我们设计了一种高效且快速的框架,用于执行实时视频增强,适用于实际应用场景,如实时视频通话和视频流。我们提出的方法称为循环瓶颈混合器网络(ReBotNet),采用双分支框架。第一个分支通过使用基于ConvNext的编码器将输入帧在时空维度上进行标记,并使用瓶颈混合器处理这些抽象标记,从而学习时空特征。为了进一步提高时间一致性,第二个分支对从单个帧提取的标记直接使用混合器。共同的解码器然后合并两个分支的特征以预测增强的帧。此外,我们提出了一种循环训练方法,利用最后一帧的预测结果有效地增强当前帧,同时提高时间一致性。为了评估我们的方法,我们制作了两个新数据集,模拟了实际的视频通话和流媒体场景,并在多个数据集上展示广泛的结果,其中ReBotNet以更低的计算、更少的内存要求和更快的推理时间优于现有方法。