【论文推荐】最新六篇目标跟踪相关论文—双重Siamese网络、判别性相关滤波、多目标跟踪、深度多尺度时空判别性、综述、显著性增强

【导读】专知内容组整理了最近六篇目标跟踪(Object Tracking)相关文章,为大家进行介绍,欢迎查看!

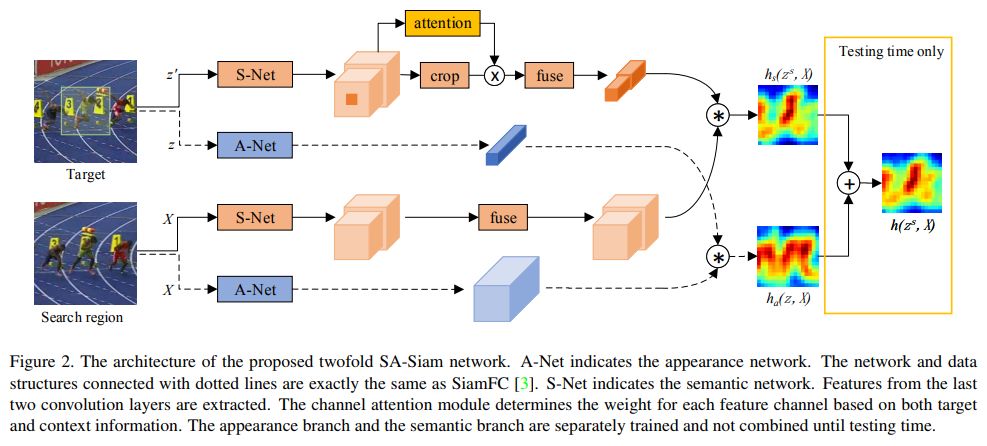

1. A Twofold Siamese Network for Real-Time Object Tracking(基于双重暹罗网络的实时物体跟踪)

作者:Anfeng He,Chong Luo,Xinmei Tian,Wenjun Zeng

摘要:Observing that Semantic features learned in an image classification task and Appearance features learned in a similarity matching task complement each other, we build a twofold Siamese network, named SA-Siam, for real-time object tracking. SA-Siam is composed of a semantic branch and an appearance branch. Each branch is a similarity-learning Siamese network. An important design choice in SA-Siam is to separately train the two branches to keep the heterogeneity of the two types of features. In addition, we propose a channel attention mechanism for the semantic branch. Channel-wise weights are computed according to the channel activations around the target position. While the inherited architecture from SiamFC \cite{SiamFC} allows our tracker to operate beyond real-time, the twofold design and the attention mechanism significantly improve the tracking performance. The proposed SA-Siam outperforms all other real-time trackers by a large margin on OTB-2013/50/100 benchmarks.

期刊:arXiv, 2018年2月24日, Accepted by CVPR'18

网址:

http://www.zhuanzhi.ai/document/d6bddb43beb558ca61e5a8c4fad507de

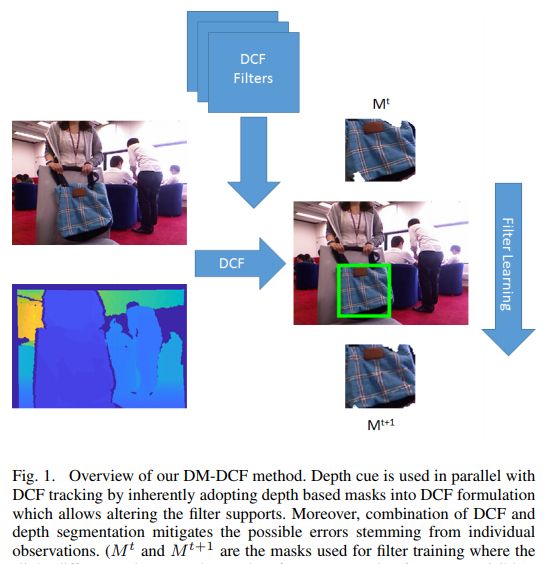

2. Depth Masked Discriminative Correlation Filter(基于深度掩码的判别性相关滤波)

作者:Uğur Kart,Joni-Kristian Kämäräinen,Jiří Matas,Lixin Fan,Francesco Cricri

摘要:Depth information provides a strong cue for occlusion detection and handling, but has been largely omitted in generic object tracking until recently due to lack of suitable benchmark datasets and applications. In this work, we propose a Depth Masked Discriminative Correlation Filter (DM-DCF) which adopts novel depth segmentation based occlusion detection that stops correlation filter updating and depth masking which adaptively adjusts the spatial support for correlation filter. In Princeton RGBD Tracking Benchmark, our DM-DCF is among the state-of-the-art in overall ranking and the winner on multiple categories. Moreover, since it is based on DCF, ``DM-DCF`` runs an order of magnitude faster than its competitors making it suitable for time constrained applications.

期刊:arXiv, 2018年2月26日

网址:

http://www.zhuanzhi.ai/document/77e120e0e7eda489c8056fe355dc7882

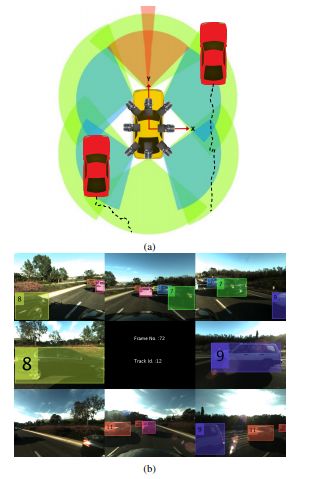

3. No Blind Spots: Full-Surround Multi-Object Tracking for Autonomous Vehicles using Cameras & LiDARs(没有盲点:利用摄像头和激光探测与测量装置在无人驾驶中进行全包围的多目标跟踪)

作者:Akshay Rangesh,Mohan M. TrivediAkshay Rangesh,Mohan M. Trivedi

摘要:Online multi-object tracking (MOT) is extremely important for high-level spatial reasoning and path planning for autonomous and highly-automated vehicles. In this paper, we present a modular framework for tracking multiple objects (vehicles), capable of accepting object proposals from different sensor modalities (vision and range) and a variable number of sensors, to produce continuous object tracks. This work is inspired by traditional tracking-by-detection approaches in computer vision, with some key differences - First, we track objects across multiple cameras and across different sensor modalities. This is done by fusing object proposals across sensors accurately and efficiently. Second, the objects of interest (targets) are tracked directly in the real world. This is a departure from traditional techniques where objects are simply tracked in the image plane. Doing so allows the tracks to be readily used by an autonomous agent for navigation and related tasks. To verify the effectiveness of our approach, we test it on real world highway data collected from a heavily sensorized testbed capable of capturing full-surround information. We demonstrate that our framework is well-suited to track objects through entire maneuvers around the ego-vehicle, some of which take more than a few minutes to complete. We also leverage the modularity of our approach by comparing the effects of including/excluding different sensors, changing the total number of sensors, and the quality of object proposals on the final tracking result.

期刊:arXiv, 2018年2月24日

网址:

http://www.zhuanzhi.ai/document/cd91388d123c0e249c1ec3ba3686c9e3

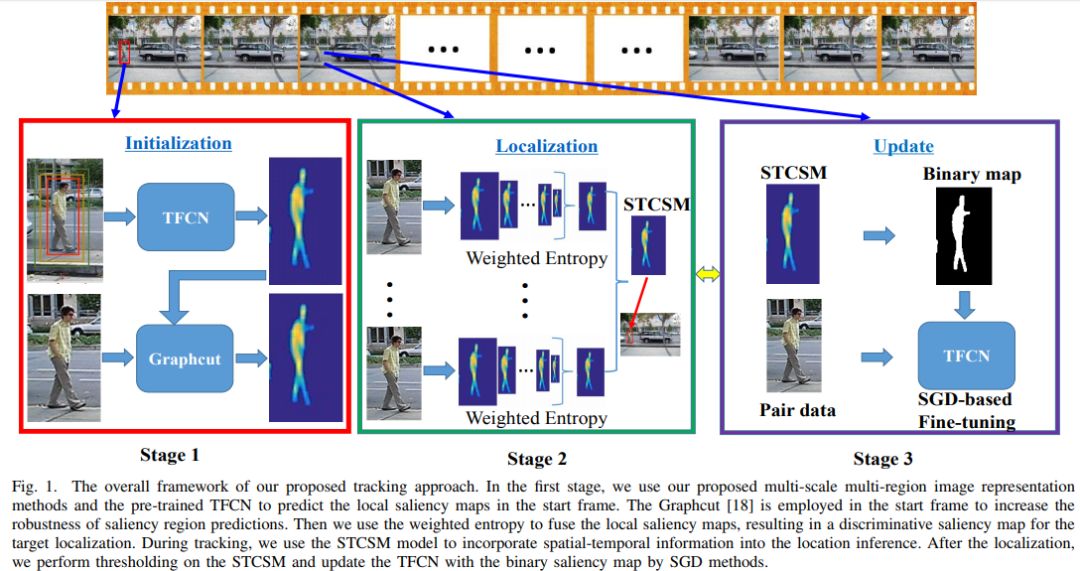

4. Non-rigid Object Tracking via Deep Multi-scale Spatial-Temporal Discriminative Saliency Maps(基于深度多尺度时空判别性显著图的非刚体物体跟踪)

作者:Pingping Zhang,Dong Wang,Huchuan Lu,Hongyu WangPingping Zhang,Dong Wang,Huchuan Lu,Hongyu Wang

摘要:In this paper we propose an effective non-rigid object tracking method based on spatial-temporal consistent saliency detection. In contrast to most existing trackers that use a bounding box to specify the tracked target, the proposed method can extract the accurate regions of the target as tracking output, which achieves better description of the non-rigid objects while reduces background pollution to the target model. Furthermore, our model has several unique features. First, a tailored deep fully convolutional neural network (TFCN) is developed to model the local saliency prior for a given image region, which not only provides the pixel-wise outputs but also integrates the semantic information. Second, a multi-scale multi-region mechanism is proposed to generate local region saliency maps that effectively consider visual perceptions with different spatial layouts and scale variations. Subsequently, these saliency maps are fused via a weighted entropy method, resulting in a final discriminative saliency map. Finally, we present a non-rigid object tracking algorithm based on the proposed saliency detection method by utilizing a spatial-temporal consistent saliency map (STCSM) model to conduct target-background classification and using a simple fine-tuning scheme for online updating. Numerous experimental results demonstrate that the proposed algorithm achieves competitive performance in comparison with state-of-the-art methods for both saliency detection and visual tracking, especially outperforming other related trackers on the non-rigid object tracking datasets.

期刊:arXiv, 2018年2月22日

网址:

http://www.zhuanzhi.ai/document/3bb3c56a31da729226162d614020790a

5. Tracking Noisy Targets: A Review of Recent Object Tracking Approaches(跟踪有噪声的目标:一个关于近期物体跟踪方法的综述)

作者:Mustansar Fiaz,Arif Mahmood,Soon Ki Jung

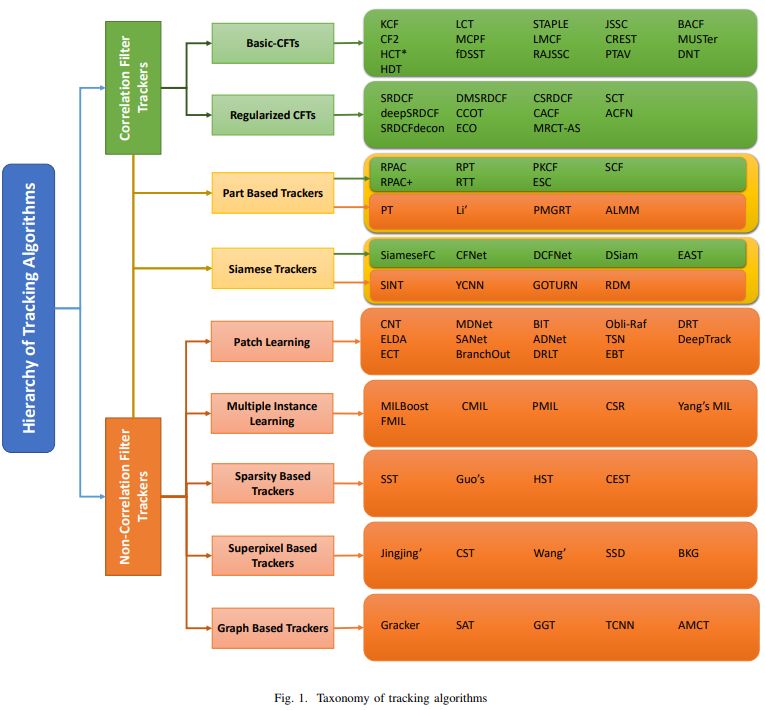

摘要:Visual object tracking is an important computer vision problem with numerous real-world applications including human-computer interaction, autonomous vehicles, robotics, motion-based recognition, video indexing, surveillance and security. In this paper, we aim to extensively review the latest trends and advances in the tracking algorithms and evaluate the robustness of trackers in the presence of noise. The first part of this work comprises a comprehensive survey of recently proposed tracking algorithms. We broadly categorize trackers into correlation filter based trackers and the others as non-correlation filter trackers. Each category is further classified into various types of trackers based on the architecture of the tracking mechanism. In the second part of this work, we experimentally evaluate tracking algorithms for robustness in the presence of additive white Gaussian noise. Multiple levels of additive noise are added to the Object Tracking Benchmark (OTB) 2015, and the precision and success rates of the tracking algorithms are evaluated. Some algorithms suffered more performance degradation than others, which brings to light a previously unexplored aspect of the tracking algorithms. The relative rank of the algorithms based on their performance on benchmark datasets may change in the presence of noise. Our study concludes that no single tracker is able to achieve the same efficiency in the presence of noise as under noise-free conditions; thus, there is a need to include a parameter for robustness to noise when evaluating newly proposed tracking algorithms.

期刊:arXiv, 2018年2月14日

网址:

http://www.zhuanzhi.ai/document/b3f483fca268e01fdbd89a2ae37eb111

6. Saliency-Enhanced Robust Visual TrackingSaliency-Enhanced Robust Visual Tracking(基于显著性增强的鲁棒视觉跟踪)

作者:Caglar Aytekin,Francesco Cricri,Emre Aksu

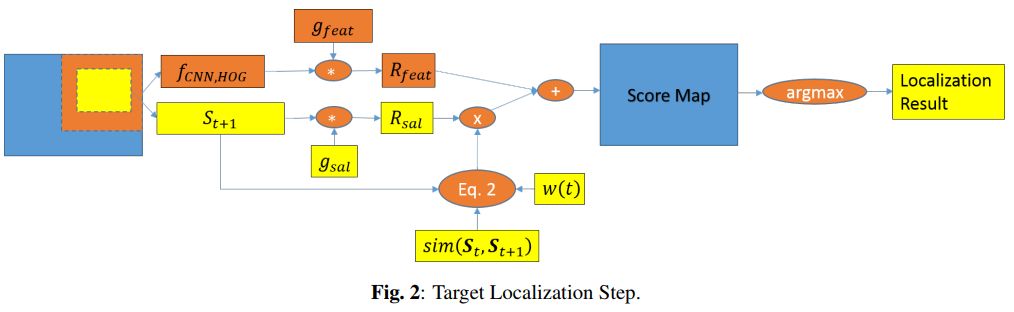

摘要:Discrete correlation filter (DCF) based trackers have shown considerable success in visual object tracking. These trackers often make use of low to mid level features such as histogram of gradients (HoG) and mid-layer activations from convolution neural networks (CNNs). We argue that including semantically higher level information to the tracked features may provide further robustness to challenging cases such as viewpoint changes. Deep salient object detection is one example of such high level features, as it make use of semantic information to highlight the important regions in the given scene. In this work, we propose an improvement over DCF based trackers by combining saliency based and other features based filter responses. This combination is performed with an adaptive weight on the saliency based filter responses, which is automatically selected according to the temporal consistency of visual saliency. We show that our method consistently improves a baseline DCF based tracker especially in challenging cases and performs superior to the state-of-the-art. Our improved tracker operates at 9.3 fps, introducing a small computational burden over the baseline which operates at 11 fps.

期刊:arXiv, 2018年2月8日

网址:

http://www.zhuanzhi.ai/document/e682340f1f9a9b0eee59e136642d8f85

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

同时欢迎各位用户进行专知投稿,详情请点击:

【诚邀】专知诚挚邀请各位专业者加入AI创作者计划!了解使用专知!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!

点击“阅读原文”,使用专知!