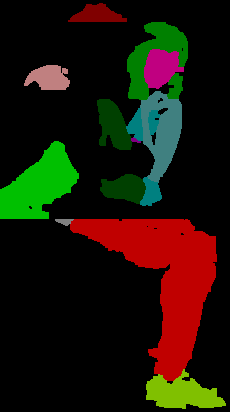

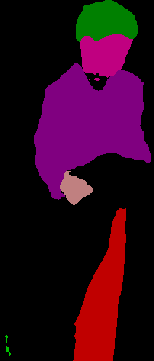

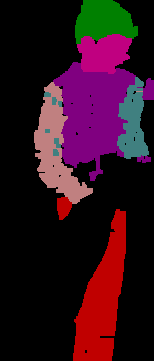

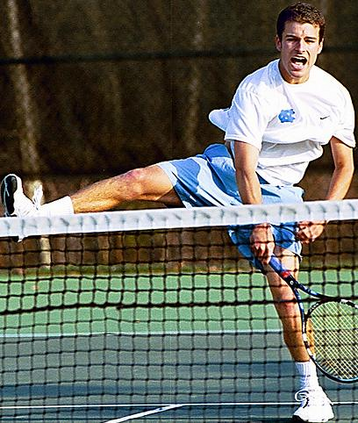

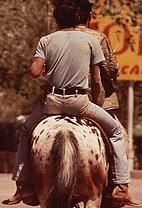

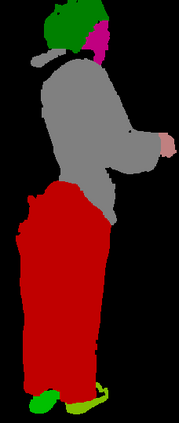

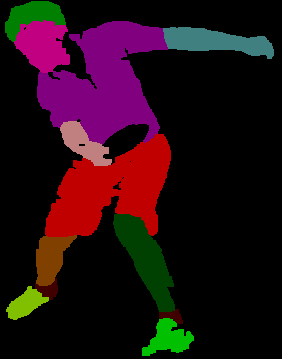

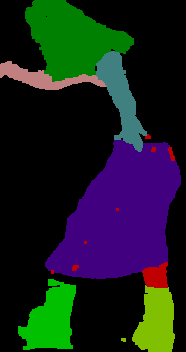

Labeling pixel-level masks for fine-grained semantic segmentation tasks, e.g. human parsing, remains a challenging task. The ambiguous boundary between different semantic parts and those categories with similar appearance usually are confusing, leading to unexpected noises in ground truth masks. To tackle the problem of learning with label noises, this work introduces a purification strategy, called Self-Correction for Human Parsing (SCHP), to progressively promote the reliability of the supervised labels as well as the learned models. In particular, starting from a model trained with inaccurate annotations as initialization, we design a cyclically learning scheduler to infer more reliable pseudo-masks by iteratively aggregating the current learned model with the former optimal one in an online manner. Besides, those correspondingly corrected labels can in turn to further boost the model performance. In this way, the models and the labels will reciprocally become more robust and accurate during the self-correction learning cycles. Benefiting from the superiority of SCHP, we achieve the best performance on two popular single-person human parsing benchmarks, including LIP and Pascal-Person-Part datasets. Our overall system ranks 1st in CVPR2019 LIP Challenge. Code is available at https://github.com/PeikeLi/Self-Correction-Human-Parsing.

翻译:用于细微分解任务(如人类分解)的标签像素级遮罩,对于细微分解任务(如人类分解)来说,仍然是一项艰巨的任务。不同语义部分和类似外观类别之间的模糊界限通常令人困惑,导致地面真相面具出现出乎意料的噪音。为了用标签噪音解决学习问题,这项工作引入了一个净化战略,称为“人体剖析自我校正”(SCHP),以逐步提高受监管标签和已学模式的可靠性。特别是,从经过不准确说明初始化培训的模式开始,我们设计了一个周期性学习计划,通过将当前学习的模式与以前的最佳模式同步地合并,在网上将更可靠的假体假体化。此外,那些相应校正的标签可以反过来进一步提升模型性能。这样,模型和标签在自我校正学习周期里会变得更稳健和准确。从SCHPHP的优势出发,我们在两个受欢迎的单人分解基准上取得最佳表现,包括LIP/Pascar-PervanLADLADS-CRIS/HRLASUNOLAG ASY ASUDLASVLATION1 ASTRIS LASAT ASTIONAL ASttol AS ASTIONAL AS ASTIONAL AS AS AS AS ASY ASY ASY ASY AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS ASY ASY ASY ASY AS AS AS ASY ASY ASY AS AS AS AS AS ASVLISLISLISLIS AS ASVLIS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS ASALLLLLLLIS AS AS AS AS AS AS AS