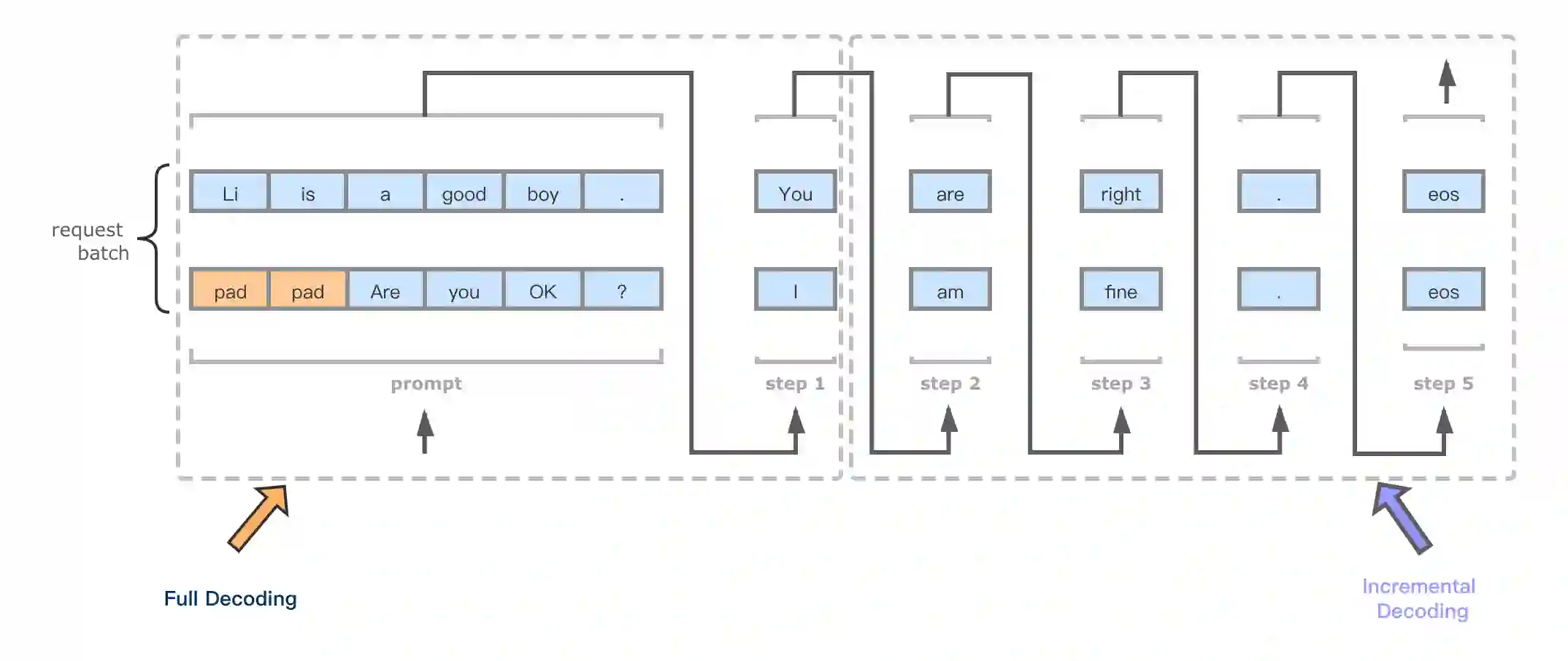

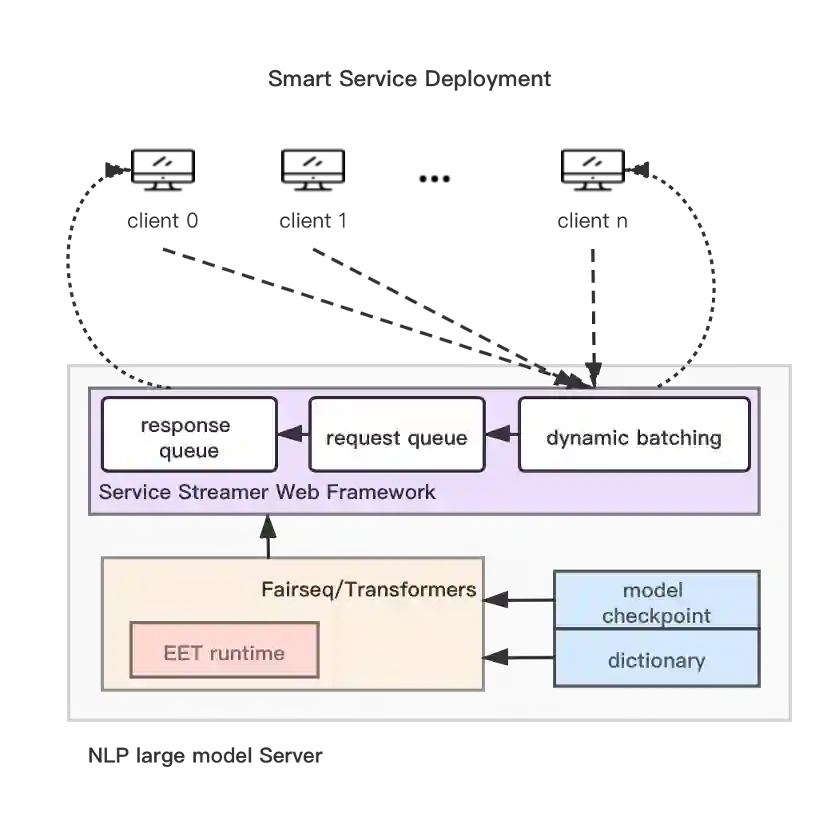

Recently, large-scale transformer-based models have been proven to be effective over a variety of tasks across many domains. Nevertheless, putting them into production is very expensive, requiring comprehensive optimization techniques to reduce inference costs. This paper introduces a series of transformer inference optimization techniques that are both in algorithm level and hardware level. These techniques include a pre-padding decoding mechanism that improves token parallelism for text generation, and highly optimized kernels designed for very long input length and large hidden size. On this basis, we propose a transformer inference acceleration library -- Easy and Efficient Transformer (EET), which has a significant performance improvement over existing libraries. Compared to Faster Transformer v4.0's implementation for GPT-2 layer on A100, EET achieves a 1.5-4.5x state-of-art speedup varying with different context lengths. EET is available at https://github.com/NetEase-FuXi/EET. A demo video is available at https://youtu.be/22UPcNGcErg.

翻译:最近,大型变压器模型已被证明对许多领域的各种任务有效,然而,将其投入生产非常昂贵,需要全面优化技术来降低推论成本,本文件介绍了一系列算法水平和硬件水平两方面的变压器推导优化技术,包括改进文本生成象征性平行的预版解码机制,以及设计用于非常长输入长度和大隐藏大小的高度优化内核。在此基础上,我们提议建立一个变压器加速推导图书馆 -- -- 简单高效的变压器(EET),该图书馆的性能比现有图书馆大有改进。与A100GPT-2层的“更快变压器”和“4.0”相比,EET实现了1.5-4.5x级的艺术加速,其长度不同。欧洲电子技术可在https://github.com/NetEase-FuXi/EET查阅。一个演示视频可在https://youtu.be/22UPGNGERg查阅。