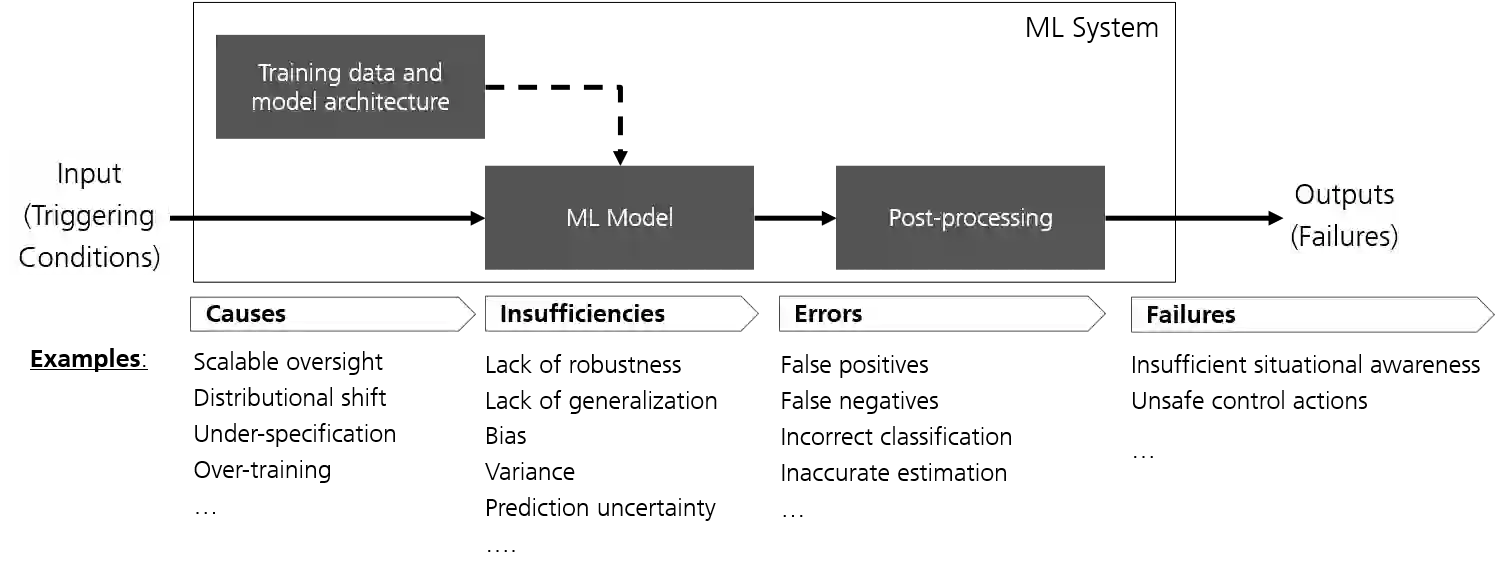

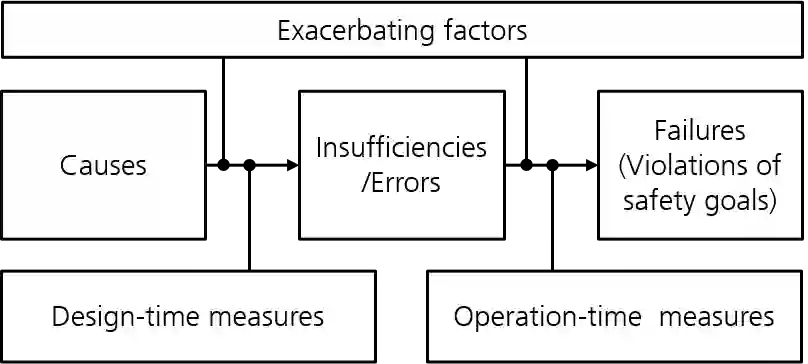

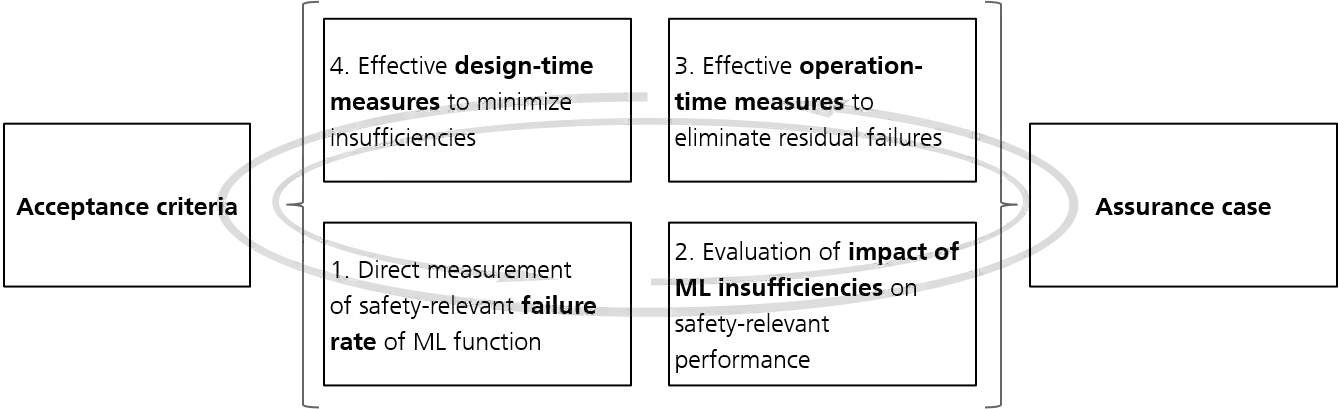

This paper proposes a framework based on a causal model of safety upon which effective safety assurance cases for ML-based applications can be built. In doing so, we build upon established principles of safety engineering as well as previous work on structuring assurance arguments for ML. The paper defines four categories of safety case evidence and a structured analysis approach within which these evidences can be effectively combined. Where appropriate, abstract formalisations of these contributions are used to illustrate the causalities they evaluate, their contributions to the safety argument and desirable properties of the evidences. Based on the proposed framework, progress in this area is re-evaluated and a set of future research directions proposed in order for tangible progress in this field to be made.

翻译:本文件提出一个基于因果安全模式的框架,在此基础上可以建立基于ML应用的有效安全保障案例;为此,我们以既定的安全工程原则以及以往为ML构建保证论据的工作为基础。本文件界定了四类安全案例证据和结构化分析方法,可以有效地将这些证据合并在一起,酌情将这些贡献的抽象形式化用于说明它们所评估的因果关系、它们对安全论据的贡献和证据的适当性质。根据拟议框架,对这一领域的进展进行了重新评估,并提出了一套未来研究方向,以便在这一领域取得切实进展。