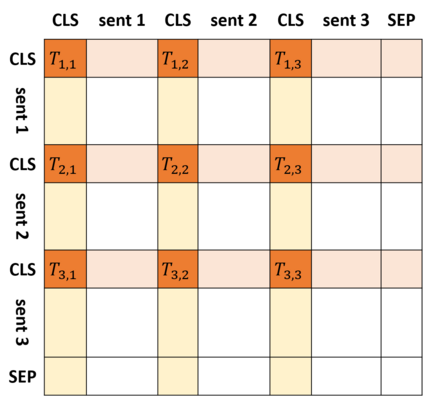

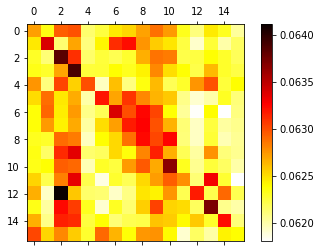

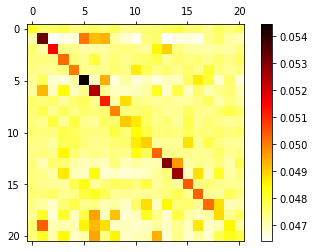

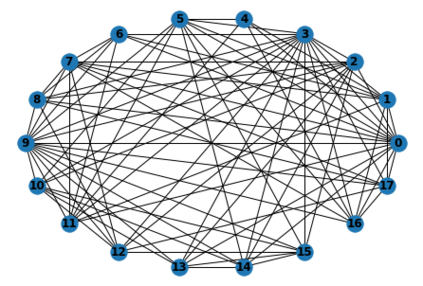

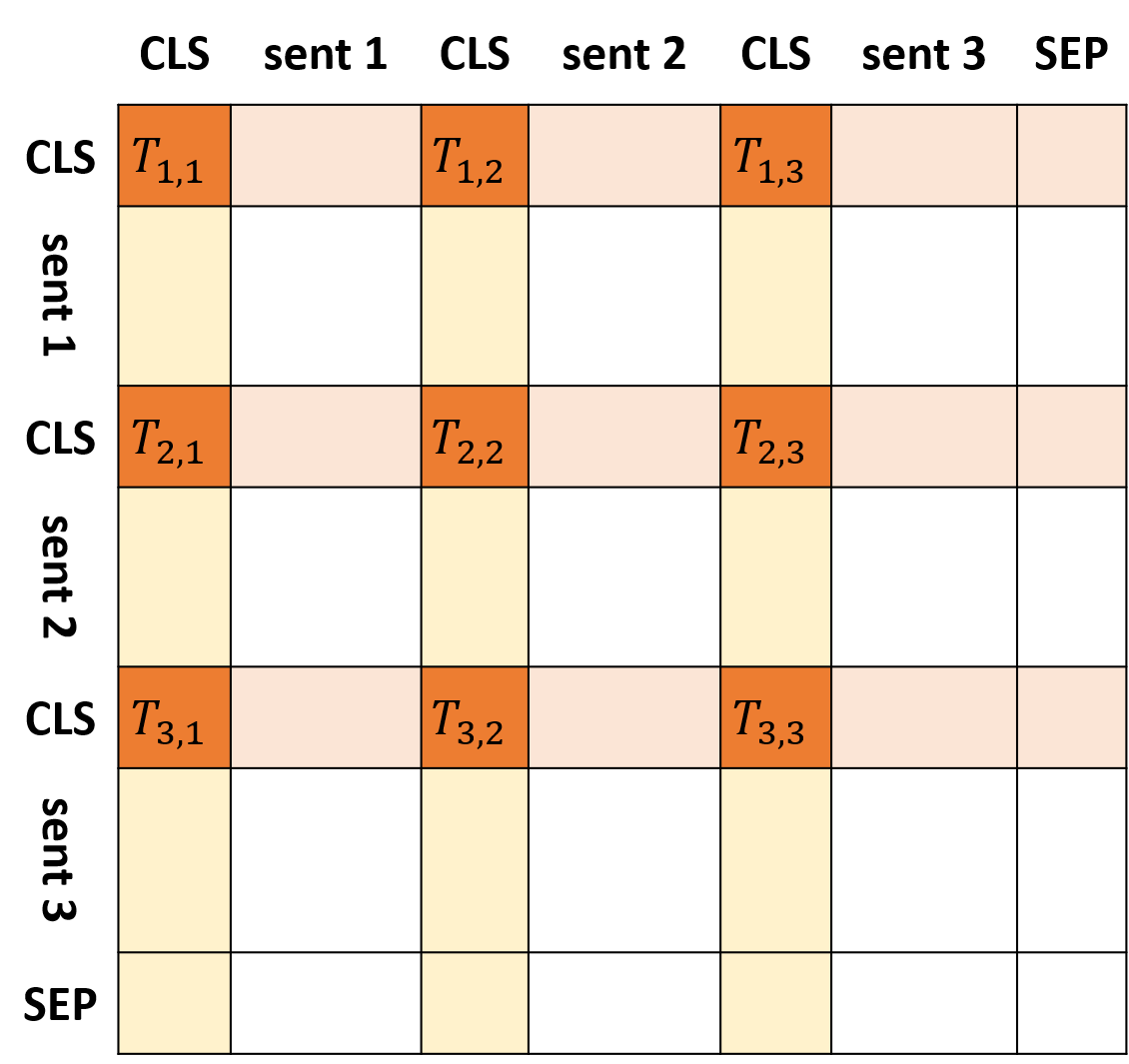

Representing a text as a graph for obtaining automatic text summarization has been investigated for over ten years. With the development of attention or Transformer on natural language processing (NLP), it is possible to make a connection between the graph and attention structure for a text. In this paper, an attention matrix between the sentences of the whole text is adopted as a weighted adjacent matrix of a fully connected graph of the text, which can be produced through the pre-training language model. The GCN is further applied to the text graph model for classifying each node and finding out the salient sentences from the text. It is demonstrated by the experimental results on two typical datasets that our proposed model can achieve a competitive result in comparison with sate-of-the-art models.

翻译:10多年来,一直对文本作为图解以获得自动文本摘要的文本进行了调查。随着自然语言处理(NLP)的注意度或变换器的发展,可以将图表与文本的注意结构联系起来。在本文件中,整个文本各句之间的注意矩阵被采纳为通过培训前语言模式制作的完全相连文本图的加权相邻矩阵。GCN进一步应用于文本图模型,对每个节点进行分类,并找出文本中的突出句子。通过两个典型数据集的实验结果,可以证明我们提议的模型能够取得与最先进的模型相比的竞争性结果。