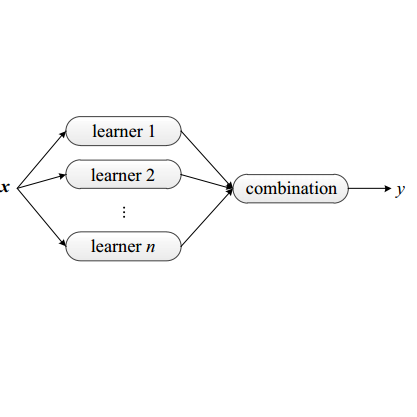

Model-free deep reinforcement learning (RL) has been successful in a range of challenging domains. However, there are some remaining issues, such as stabilizing the optimization of nonlinear function approximators, preventing error propagation due to the Bellman backup in Q-learning, and efficient exploration. To mitigate these issues, we present SUNRISE, a simple unified ensemble method, which is compatible with various off-policy RL algorithms. SUNRISE integrates three key ingredients: (a) bootstrap with random initialization which improves the stability of the learning process by training a diverse ensemble of agents, (b) weighted Bellman backups, which prevent error propagation in Q-learning by reweighing sample transitions based on uncertainty estimates from the ensembles, and (c) an inference method that selects actions using highest upper-confidence bounds for efficient exploration. Our experiments show that SUNRISE significantly improves the performance of existing off-policy RL algorithms, such as Soft Actor-Critic and Rainbow DQN, for both continuous and discrete control tasks on both low-dimensional and high-dimensional environments. Our training code is available at https://github.com/pokaxpoka/sunrise.

翻译:在一系列具有挑战性的领域,没有模型的深层强化学习(RL)取得了成功。然而,还存在一些问题,例如稳定非线性功能辅助器的优化,防止由于Q-学习中的Bellman备份而导致的错误传播,以及有效的探索。为了缓解这些问题,我们向SUNRISE介绍一种简单的统一组合方法,它与各种不受政策限制的RL算法兼容。SUNRISE综合了三个关键要素:(a) 带随机初始化的靴子,它通过培训多种物剂的组合来提高学习过程的稳定性;(b) 加权Bellman备份,它通过根据来自ensumbles的不确定性估计重新调整样本,防止Q-学习中的错误传播。 (c) 一种使用最高信任圈选择行动以高效勘探的推断方法。我们的实验显示,SUNRISE大大改进了现有的脱政策RL算法的性,例如Soft Ador-Critict和彩虹 DQN,它防止在连续和离心控制环境中进行持续和离心控制,在高维/MLAVLAD/O/O的高级/DUSULSUDDDDDDD的可操作环境上都有。