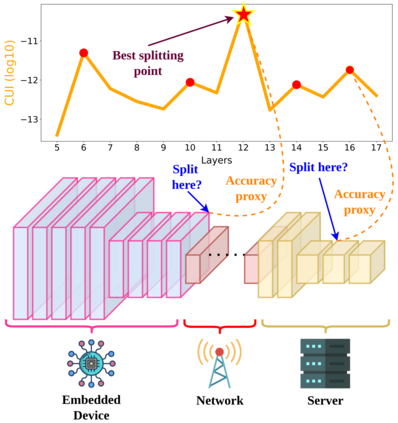

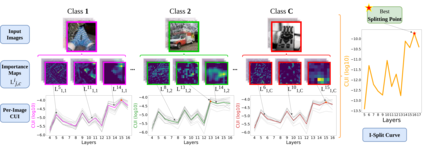

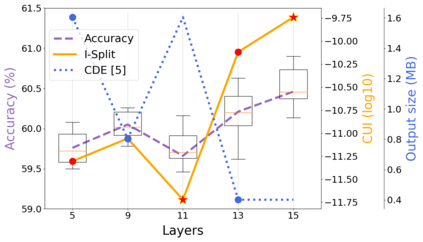

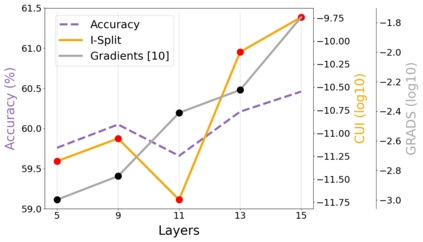

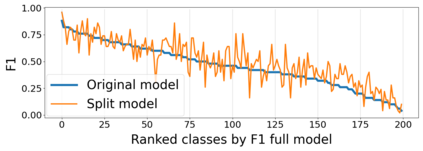

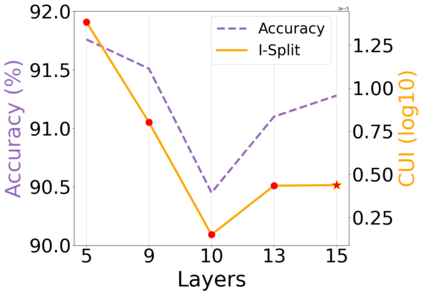

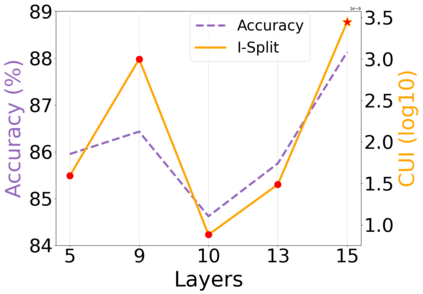

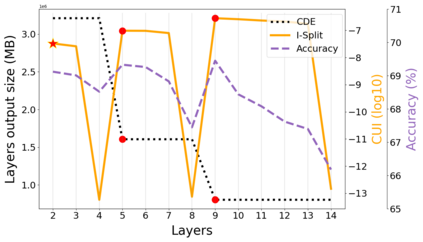

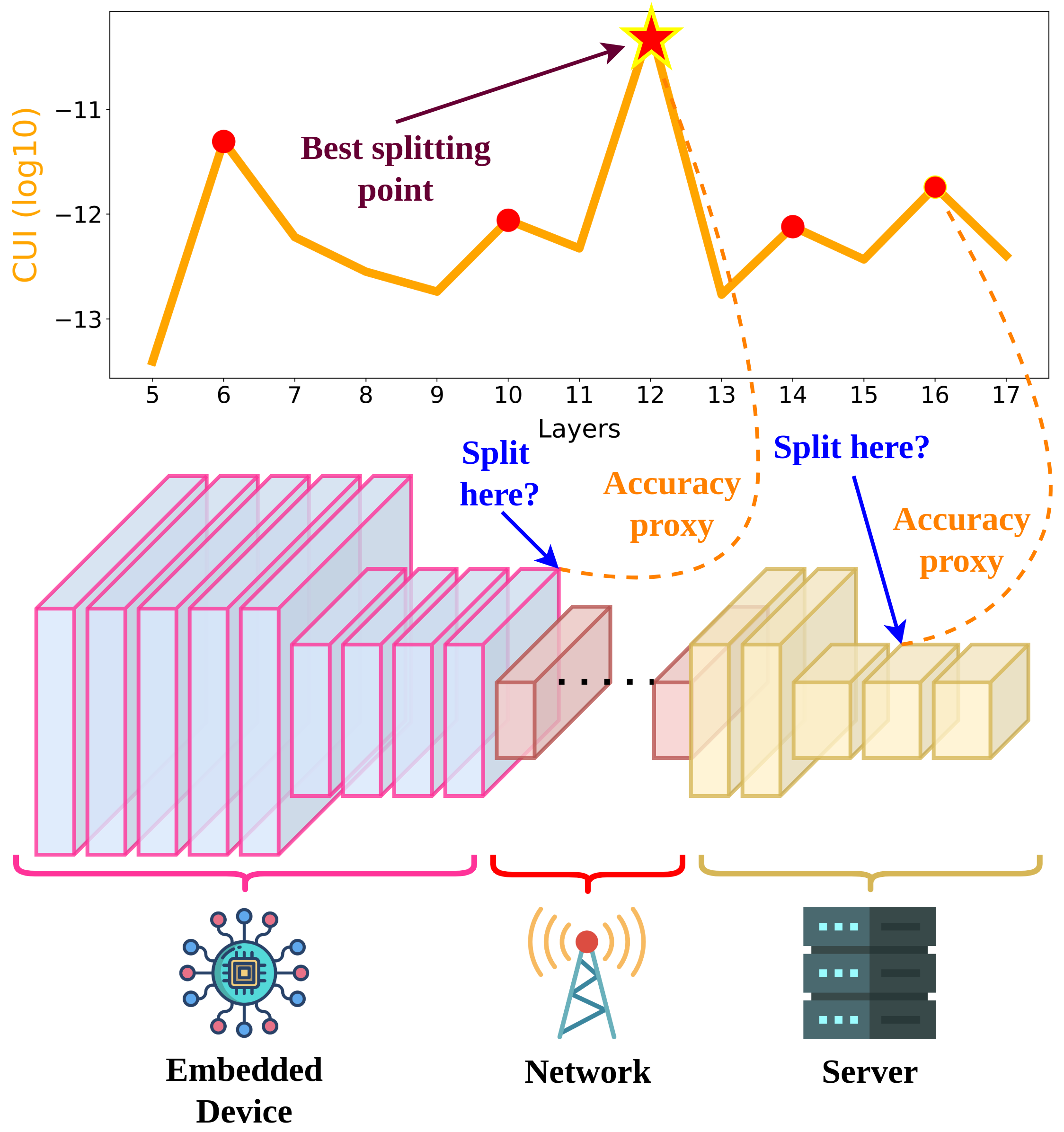

This work makes a substantial step in the field of split computing, i.e., how to split a deep neural network to host its early part on an embedded device and the rest on a server. So far, potential split locations have been identified exploiting uniquely architectural aspects, i.e., based on the layer sizes. Under this paradigm, the efficacy of the split in terms of accuracy can be evaluated only after having performed the split and retrained the entire pipeline, making an exhaustive evaluation of all the plausible splitting points prohibitive in terms of time. Here we show that not only the architecture of the layers does matter, but the importance of the neurons contained therein too. A neuron is important if its gradient with respect to the correct class decision is high. It follows that a split should be applied right after a layer with a high density of important neurons, in order to preserve the information flowing until then. Upon this idea, we propose Interpretable Split (I-SPLIT): a procedure that identifies the most suitable splitting points by providing a reliable prediction on how well this split will perform in terms of classification accuracy, beforehand of its effective implementation. As a further major contribution of I-SPLIT, we show that the best choice for the splitting point on a multiclass categorization problem depends also on which specific classes the network has to deal with. Exhaustive experiments have been carried out on two networks, VGG16 and ResNet-50, and three datasets, Tiny-Imagenet-200, notMNIST, and Chest X-Ray Pneumonia. The source code is available at https://github.com/vips4/I-Split.

翻译:这项工作在分裂计算领域迈出了一大步, 也就是说, 如何将深神经网络分成一个深神经网络, 以存放在嵌入的装置和服务器上的其余部分。 到目前为止, 已经确定了潜在的分裂地点, 利用了独特的建筑方面, 即基于层的大小。 在这个范式下, 只有在整个管道进行分割和再培训之后, 才能评估分解准确性的效果, 对所有可能的分解点进行彻底的评估, 从时间上来说, 令人望而却步。 我们在这里显示, 不仅层的结构重要, 并且其中所含神经元也重要。 如果其对于正确等级决定的梯度很高, 也就是说, 潜在的分解地点很重要。 根据这个模式, 分解的准确性( I- SP16 SP) 分解( I- SPLIT ) : 通过可靠地预测这一分解在分类准确性方面的效果如何, 在有效执行之前, 一个神经网络的重要性 。 一个神经网络对于正确的梯度很重要 。 一个重大的分解是, IMS 和 IMS 的分级 的分级 。