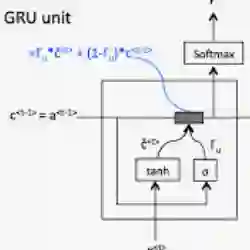

LSTMs and GRUs are the most common recurrent neural network architectures used to solve temporal sequence problems. The two architectures have differing data flows dealing with a common component called the cell state (also referred to as the memory). We attempt to enhance the memory by presenting a modification that we call the Mother Compact Recurrent Memory (MCRM). MCRMs are a type of a nested LSTM-GRU architecture where the cell state is the GRU hidden state. The concatenation of the forget gate and input gate interactions from the LSTM are considered an input to the GRU cell. Because MCRMs has this type of nesting, MCRMs have a compact memory pattern consisting of neurons that acts explicitly in both long-term and short-term fashions. For some specific tasks, empirical results show that MCRMs outperform previously used architectures.

翻译:LSTMS和GRUs是用来解决时间序列问题的最常见的常见经常性神经网络结构。两个结构有不同的数据流,涉及一个称为细胞状态(也称为记忆)的共同组成部分。我们试图通过我们称之为母亲契约经常记忆(MCRM)的修改来增强记忆力。MCRMS是一种嵌套式的LSTM-GRU结构,细胞状态是 GRU 隐藏状态。LSTM 的遗忘门和输入门互动的组合被认为是对 GRU 细胞的一种输入。由于MCRMS有这种类型的嵌套,MCRMS具有由神经组成的紧凑的内存模式,这些神经以长期和短期两种明确的方式运行。对于某些具体的任务,实验结果显示MCRMS超越了以前使用的结构。