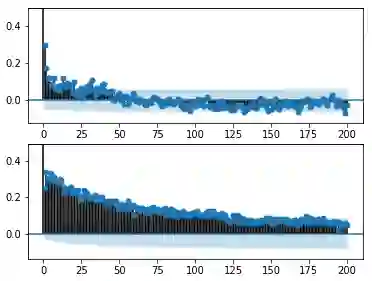

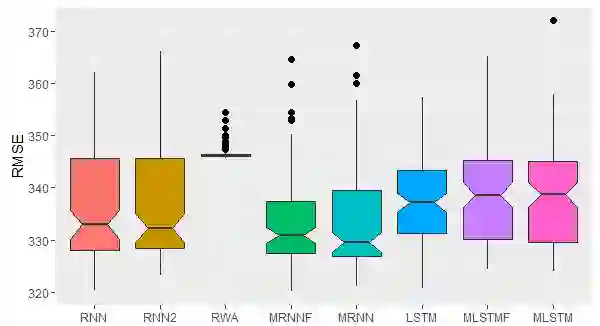

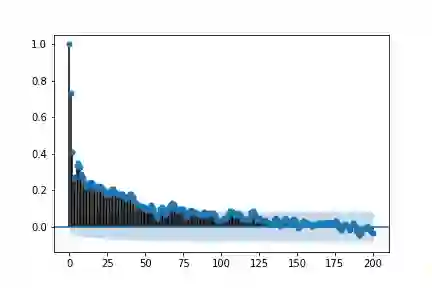

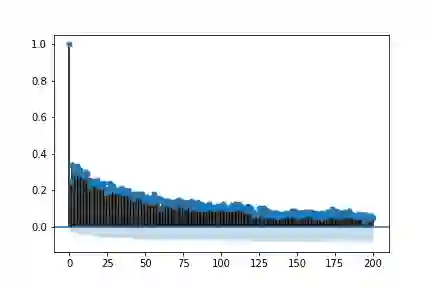

The LSTM network was proposed to overcome the difficulty in learning long-term dependence, and has made significant advancements in applications. With its success and drawbacks in mind, this paper raises the question - do RNN and LSTM have long memory? We answer it partially by proving that RNN and LSTM do not have long memory from a statistical perspective. A new definition for long memory networks is further introduced, and it requires the model weights to decay at a polynomial rate. To verify our theory, we convert RNN and LSTM into long memory networks by making a minimal modification, and their superiority is illustrated in modeling long-term dependence of various datasets.

翻译:LSTM网络旨在克服学习长期依赖性方面的困难,并在应用方面取得了显著进步。根据它的成功和缺点,本文提出了这样一个问题:RNN和LSTM是否有长期记忆?我们部分地通过从统计角度证明RNN和LSTM没有长期记忆来回答它。对长期记忆网络的新定义进一步引入,要求模型重量以多元速率衰减。为了核实我们的理论,我们通过微小的修改将RNN和LSTM转换为长期记忆网络,其优越性体现在各种数据集的长期依赖性模型中。