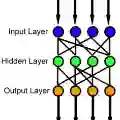

In this paper, we present a local geometric analysis to interpret how deep feedforward neural networks extract low-dimensional features from high-dimensional data. Our study shows that, in a local geometric region, the optimal weight in one layer of the neural network and the optimal feature generated by the previous layer comprise a low-rank approximation of a matrix that is determined by the Bayes action of this layer. This result holds (i) for analyzing both the output layer and the hidden layers of the neural network, and (ii) for neuron activation functions with non-vanishing gradients. We use two supervised learning problems to illustrate our results: neural network based maximum likelihood classification (i.e., softmax regression) and neural network based minimum mean square estimation. Experimental validation of these theoretical results will be conducted in our future work.

翻译:在本文中,我们提出一个局部几何分析,以解释进料神经网络如何从高维数据中提取低维特征。我们的研究显示,在本地几何区域,神经网络一个层的最佳重量和前层产生的最佳特征是由该层的拜斯动作所决定的矩阵的低端近似值。结果包括(一) 分析神经网络的产出层和隐藏层,和(二) 使用非衰弱梯度的神经激活功能。我们使用两个受监督的学习问题来说明我们的结果:以神经网络为基础的最大可能性分类(即软负回归)和以神经网络为基础的最低平均平方估计。这些理论结果的实验性验证将在我们今后的工作中进行。