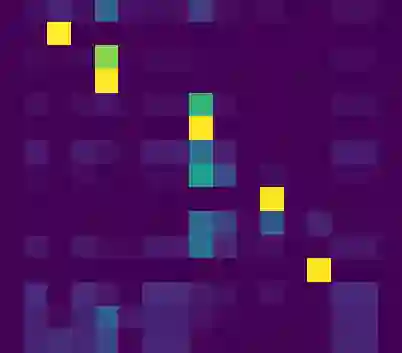

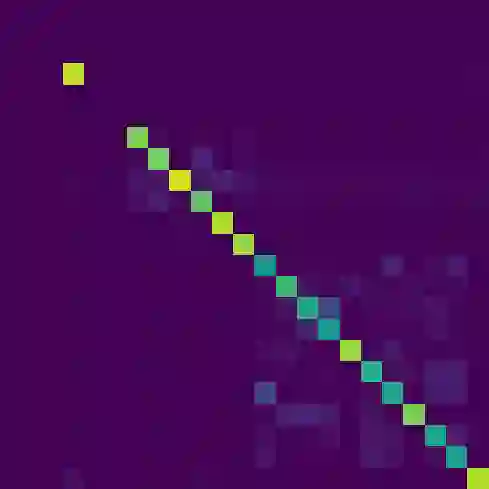

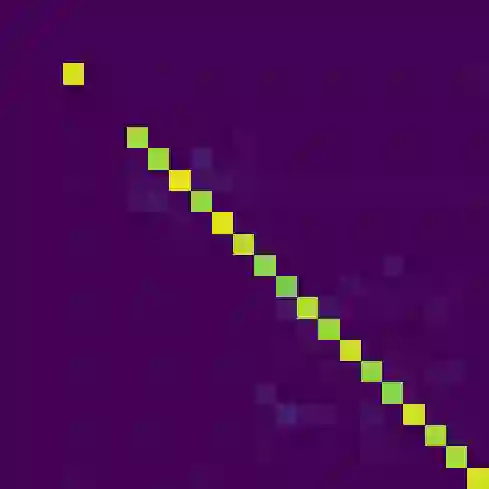

The Transformer architecture is shown to provide a powerful framework as an end-to-end model for building expression trees from online handwritten gestures corresponding to glyph strokes. In particular, the attention mechanism was successfully used to encode, learn and enforce the underlying syntax of expressions creating latent representations that are correctly decoded to the exact mathematical expression tree, providing robustness to ablated inputs and unseen glyphs. For the first time, the encoder is fed with spatio-temporal data tokens potentially forming an infinitely large vocabulary, which finds applications beyond that of online gesture recognition. A new supervised dataset of online handwriting gestures is provided for training models on generic handwriting recognition tasks and a new metric is proposed for the evaluation of the syntactic correctness of the output expression trees. A small Transformer model suitable for edge inference was successfully trained to an average normalised Levenshtein accuracy of 94%, resulting in valid postfix RPN tree representation for 94% of predictions.

翻译:变换器结构显示提供了一个强大的框架, 用于从在线手写手势上构建表达式树的端到端模型。 特别是, 关注机制被成功用于编译、 学习和执行以下表达式的基本语法, 以生成正确解码到精确的数学表达式树的潜在表达式, 以强固性来整合输入和看不见的晶体。 编码器第一次被注入了可能形成无限大词汇的spatio- 时空数据符号, 从而找到超出在线手势识别的应用程序 。 为通用笔迹识别任务的培训模型提供了新的在线笔迹手法手法手法手法手法标识数据集, 并为评价输出表达式树的合成正确性提出了新的衡量标准。 适合边缘引力的小型变异器模型被成功训练为平均正常的94% Leveshtein 精确度, 导致94%的预测结果为有效后fix RPN 树代表值。