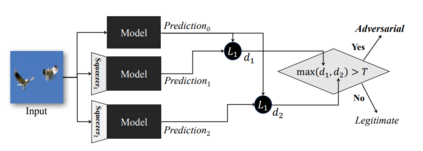

Machine learning algorithms are used to construct a mathematical model for a system based on training data. Such a model is capable of making highly accurate predictions without being explicitly programmed to do so. These techniques have a great many applications in all areas of the modern digital economy and artificial intelligence. More importantly, these methods are essential for a rapidly increasing number of safety-critical applications such as autonomous vehicles and intelligent defense systems. However, emerging adversarial learning attacks pose a serious security threat that greatly undermines further such systems. The latter are classified into four types, evasion (manipulating data to avoid detection), poisoning (injection malicious training samples to disrupt retraining), model stealing (extraction), and inference (leveraging over-generalization on training data). Understanding this type of attacks is a crucial first step for the development of effective countermeasures. The paper provides a detailed tutorial on the principles of adversarial machining learning, explains the different attack scenarios, and gives an in-depth insight into the state-of-art defense mechanisms against this rising threat .

翻译:机械学习算法用于为基于培训数据的系统构建数学模型。这种模型能够作出高度准确的预测,而没有明确的规划。这些技术在现代数字经济和人工智能的所有领域都有许多应用。更重要的是,这些方法对于迅速增加的安全关键应用至关重要,如自主车辆和智能防御系统。然而,新出现的对抗性学习攻击构成了严重的安全威胁,大大破坏了这种系统。后者分为四类:逃避(操纵数据以避免探测)、中毒(注射恶意训练样本以干扰再培训)、模式偷窃(引渡)和推断(过度使用培训数据)。了解这类攻击是发展有效反措施的关键的第一步。本文件详细介绍了对抗性操纵学习的原则,解释了不同的攻击情景,并深入了解了应对这种不断上升的威胁的最先进的防御机制。