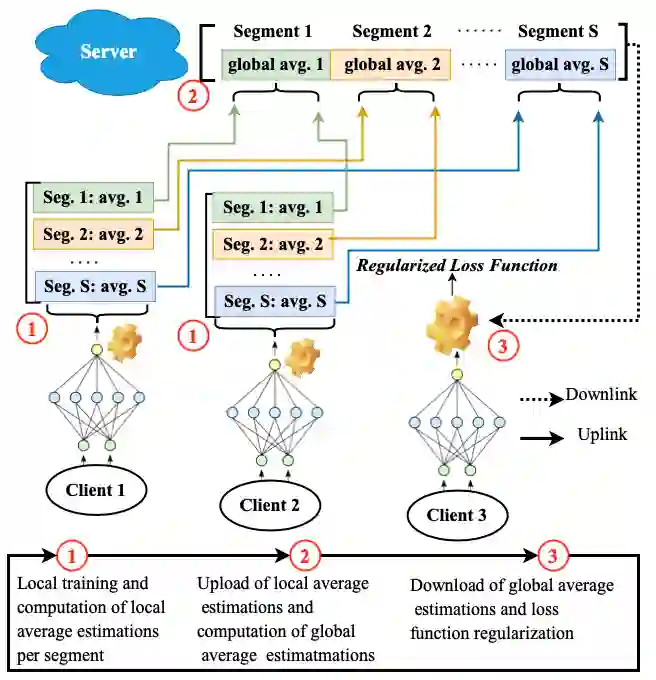

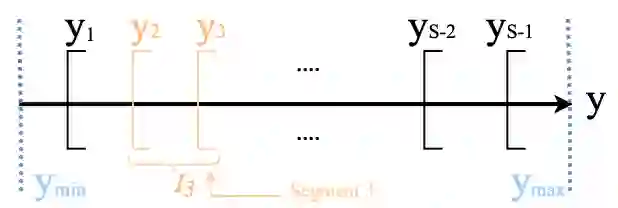

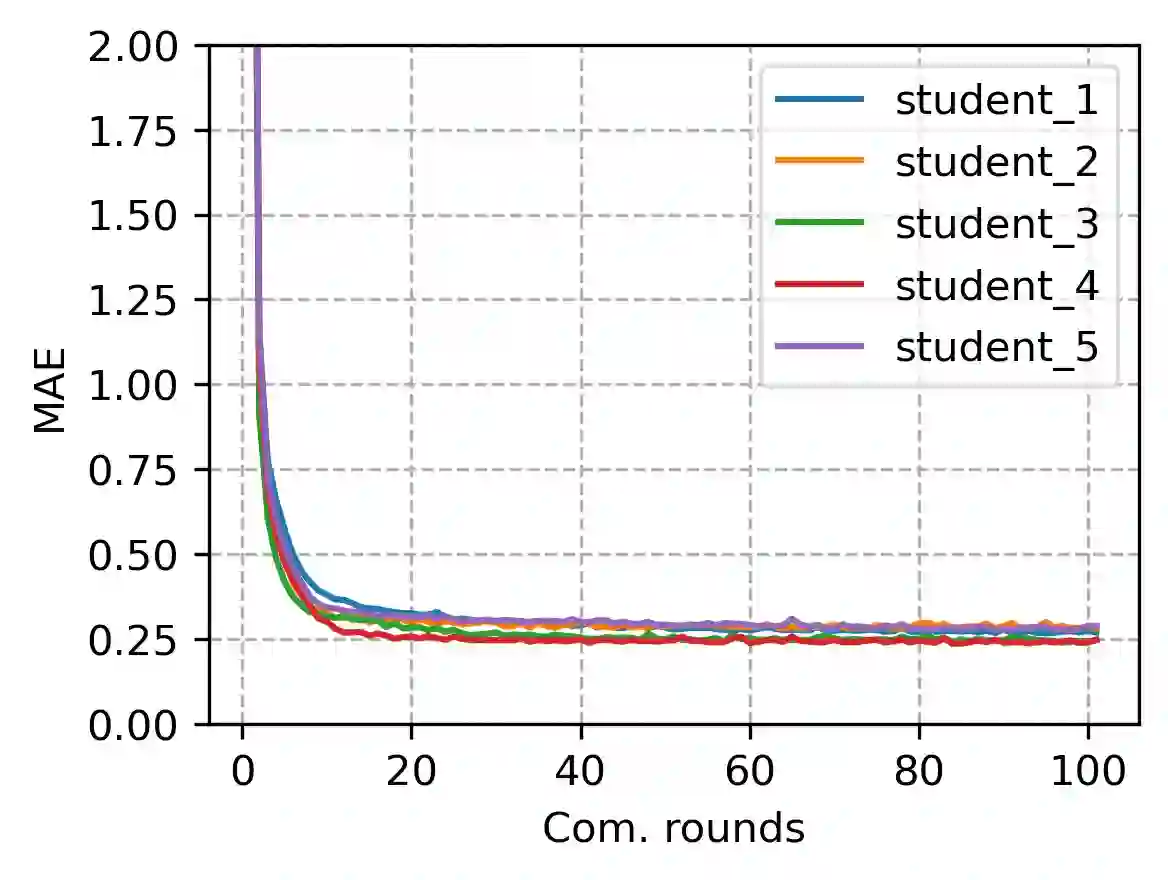

Federated distillation (FD) paradigm has been recently proposed as a promising alternative to federated learning (FL) especially in wireless sensor networks with limited communication resources. However, all state-of-the art FD algorithms are designed for only classification tasks and less attention has been given to regression tasks. In this work, we propose an FD framework that properly operates on regression learning problems. Afterwards, we present a use-case implementation by proposing an indoor localization system that shows a good trade-off communication load vs. accuracy compared to federated learning (FL) based indoor localization. With our proposed framework, we reduce the number of transmitted bits by up to 98%. Moreover, we show that the proposed framework is much more scalable than FL, thus more likely to cope with the expansion of wireless networks.

翻译:最近提出了联邦蒸馏(FD)范式,作为联合学习(FL)的一个有希望的替代方案,特别是在通信资源有限的无线传感器网络中,然而,所有最先进的FD算法都只针对分类任务设计,而较少注意回归任务。在这项工作中,我们提议了一个在倒退学习问题上适当运作的FD框架。随后,我们提出了一个使用案例,即提出一个室内本地化系统,显示良好的交易通信负荷相对于基于室内本地化的FL(FL)的精确度。我们提议的框架将传输的比分数减少98 % 。此外,我们表明拟议的框架比FL(FL)大得多,因此更有可能应对无线网络的扩展。