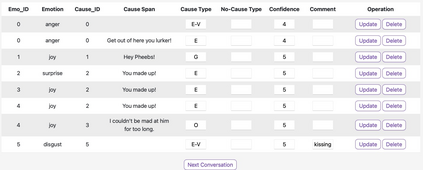

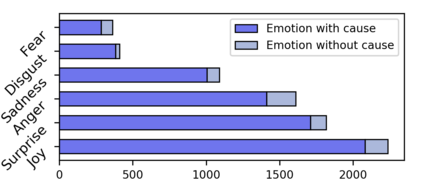

Emotion cause analysis has received considerable attention in recent years. Previous studies primarily focused on emotion cause extraction from texts in news articles or microblogs. It is also interesting to discover emotions and their causes in conversations. As conversation in its natural form is multimodal, a large number of studies have been carried out on multimodal emotion recognition in conversations, but there is still a lack of work on multimodal emotion cause analysis. In this work, we introduce a new task named Multimodal Emotion-Cause Pair Extraction in Conversations, aiming to jointly extract emotions and their associated causes from conversations reflected in multiple modalities (text, audio and video). We accordingly construct a multimodal conversational emotion cause dataset, Emotion-Cause-in-Friends, which contains 9,272 multimodal emotion-cause pairs annotated on 13,509 utterances in the sitcom Friends. We finally benchmark the task by establishing a baseline system that incorporates multimodal features for emotion-cause pair extraction. Preliminary experimental results demonstrate the potential of multimodal information fusion for discovering both emotions and causes in conversations.

翻译:情感原因分析近年来受到相当重视。前几次研究主要侧重于情感导致从新闻文章或微博客的文本中提取情感原因。在谈话中发现情感及其原因也很有意思。自然形式的对话是多式的。由于自然形式的对话是多式的,大量研究是在对话中进行多式情感认识,但对于多式情感原因分析仍然缺乏工作。在这项工作中,我们引入了一个新的任务,名为“多式情感-由Pair Explicationon in conversations ”,目的是从多种模式(文字、音像)的谈话中联合提取情感及其相关原因。因此,我们构建了一个多式对话情感导致数据集,即情感-友爱关系,其中包括9个72式多式情感原因配对,对坐友的13 509个言词附加说明。我们最后通过建立一个基线系统来确定这项任务的基准,将多式信息纳入情感-因对夫妇的提取。初步实验结果表明,多式联运信息有可能在对话中发现情感和原因。