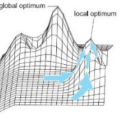

The ubiquity of Big Data and machine learning in society evinces the need of further investigation of their fundamental limitations. In this paper, we extend the ``too-much-information-tends-to-behave-like-very-little-information'' phenomenon to formal knowledge about lawlike universes and arbitrary collections of computably generated datasets. This gives rise to the simplicity bubble problem, which refers to a learning algorithm equipped with a formal theory that can be deceived by a dataset to find a locally optimal model which it deems to be the global one. However, the actual high-complexity globally optimal model unpredictably diverges from the found low-complexity local optimum. Zemblanity is defined by an undesirable but expected finding that reveals an underlying problem or negative consequence in a given model or theory, which is in principle predictable in case the formal theory contains sufficient information. Therefore, we argue that there is a ceiling above which formal knowledge cannot further decrease the probability of zemblanitous findings, should the randomly generated data made available to the learning algorithm and formal theory be sufficiently large in comparison to their joint complexity.

翻译:大数据和机器学习在社会中的普及表明需要进一步研究它们的根本限制。本文将“太多信息的行为类似于非常少的信息”现象扩展到了关于Lawlike宇宙和任意集合的可计算生成数据集的正式知识。这就引发了简易性泡沫问题,指的是一个学习算法配备了一个正式的理论,该理论可以被数据集欺骗,从而找到一个被认为是全局的局部最优模型。然而,实际的高复杂性全局最优模型会与找到的低复杂性局部最优解出人意料地分歧。"Zemblanity"是指在给定的模型或理论中揭示出基本问题或负面后果的不良但预期的发现,这在原则上是可以预测的,前提是正式理论包含足够的信息。因此,我们认为,如果随机生成的数据与学习算法和正式理论的联合复杂度相比足够大,则正式知识不能进一步降低泽布兰发现的概率。