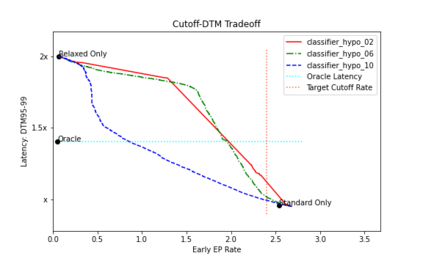

Current endpointing (EP) solutions learn in a supervised framework, which does not allow the model to incorporate feedback and improve in an online setting. Also, it is a common practice to utilize costly grid-search to find the best configuration for an endpointing model. In this paper, we aim to provide a solution for adaptive endpointing by proposing an efficient method for choosing an optimal endpointing configuration given utterance-level audio features in an online setting, while avoiding hyperparameter grid-search. Our method does not require ground truth labels, and only uses online learning from reward signals without requiring annotated labels. Specifically, we propose a deep contextual multi-armed bandit-based approach, which combines the representational power of neural networks with the action exploration behavior of Thompson modeling algorithms. We compare our approach to several baselines, and show that our deep bandit models also succeed in reducing early cutoff errors while maintaining low latency.

翻译:目前的终点检测(EP)解决方案在监督框架中学习,这不允许模型集成反馈并在在线环境中改进。此外,通常使用昂贵的网格搜索来查找终点检测模型的最佳配置。在本文中,我们旨在提供一种自适应终点检测解决方案,通过提出一种在在线环境下选择最优终点检测配置的有效方法,同时避免超参数网格搜索。我们的方法不需要地面真相标签,仅使用来自奖励信号的在线学习而不需要注释标签。具体而言,我们提出了一种深度上下文多臂赌博机方法,该方法将神经网络的表示能力与 Thompson 模型算法的行动探索行为相结合。我们将我们的方法与几个基线进行比较,并表明我们的深度赌博模型也成功地减少了早期截止误差,并保持低延迟。