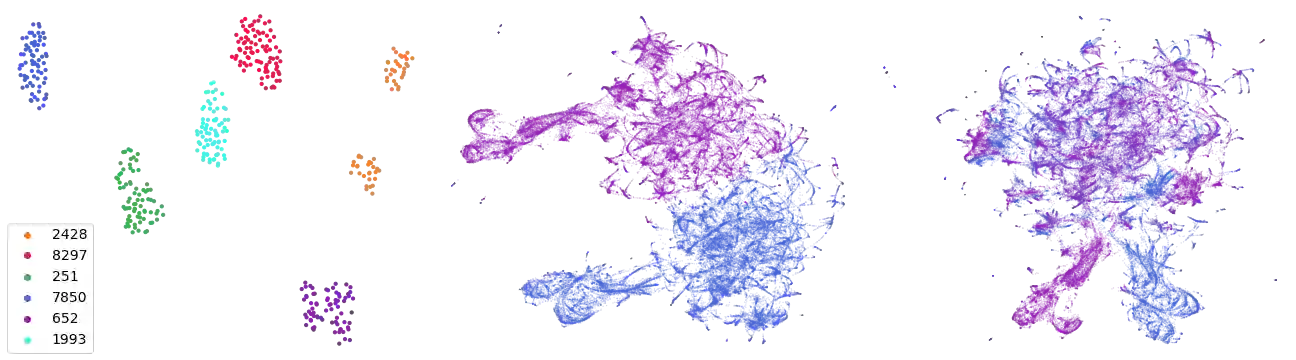

Contrastive predictive coding (CPC) aims to learn representations of speech by distinguishing future observations from a set of negative examples. Previous work has shown that linear classifiers trained on CPC features can accurately predict speaker and phone labels. However, it is unclear how the features actually capture speaker and phonetic information, and whether it is possible to normalize out the irrelevant details (depending on the downstream task). In this paper, we first show that the per-utterance mean of CPC features captures speaker information to a large extent. Concretely, we find that comparing means performs well on a speaker verification task. Next, probing experiments show that standardizing the features effectively removes speaker information. Based on this observation, we propose a speaker normalization step to improve acoustic unit discovery using K-means clustering of CPC features. Finally, we show that a language model trained on the resulting units achieves some of the best results in the ZeroSpeech2021~Challenge.

翻译:对比预测编码(CPC)的目的是通过区分未来的观察和一系列负面例子来了解演讲的表达方式。先前的工作表明,接受过关于CPC特征培训的线性分类人员能够准确预测语音和电话标签。然而,尚不清楚这些特征如何实际捕捉语音和语音信息,以及是否有可能将无关的细节(取决于下游任务)正常化。在本文中,我们首先显示,CPC特征的边际表示方式在很大程度上捕捉了演讲者的信息。具体地说,我们发现比较手段在演讲者核查任务上效果良好。接下来,测试实验表明,这些特征的标准化有效地删除了演讲者的信息。基于这一观察,我们建议采取演讲者正常化步骤,利用CPC特征的K- means群集改进声音单位的发现。最后,我们显示,在由此产生的单元上培训的语言模型在 ZeroSpeech2021~Challenge中取得了一些最佳结果。