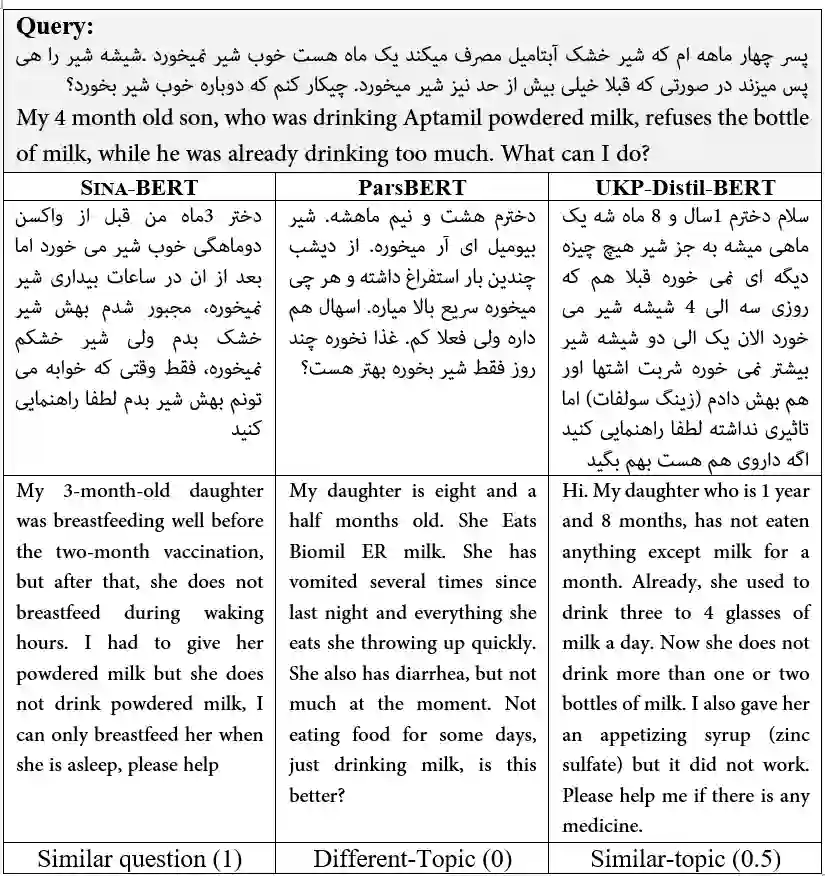

We have released Sina-BERT, a language model pre-trained on BERT (Devlin et al., 2018) to address the lack of a high-quality Persian language model in the medical domain. SINA-BERT utilizes pre-training on a large-scale corpus of medical contents including formal and informal texts collected from a variety of online resources in order to improve the performance on health-care related tasks. We employ SINA-BERT to complete following representative tasks: categorization of medical questions, medical sentiment analysis, and medical question retrieval. For each task, we have developed Persian annotated data sets for training and evaluation and learnt a representation for the data of each task especially complex and long medical questions. With the same architecture being used across tasks, SINA-BERT outperforms BERT-based models that were previously made available in the Persian language.

翻译:我们发布了Sina-BERT,这是BERT预先培训的语言模型(Devlin等人,2018年),以解决医疗领域缺乏高质量波斯语模型的问题;SINA-BERT利用关于大规模医疗内容的预先培训,包括从各种在线资源收集的正式和非正式文本,以提高与保健有关的任务的绩效;我们利用SINA-BERT完成以下具有代表性的任务:医疗问题分类、医疗情绪分析和医疗问题检索;我们为每项任务编制了附加说明的成套数据,用于培训和评估,并学习了每项任务中特别复杂和长期医疗问题数据的代表性;由于使用相同的结构,SINA-BERT超越了以前以波斯语提供的基于BERT的模式。