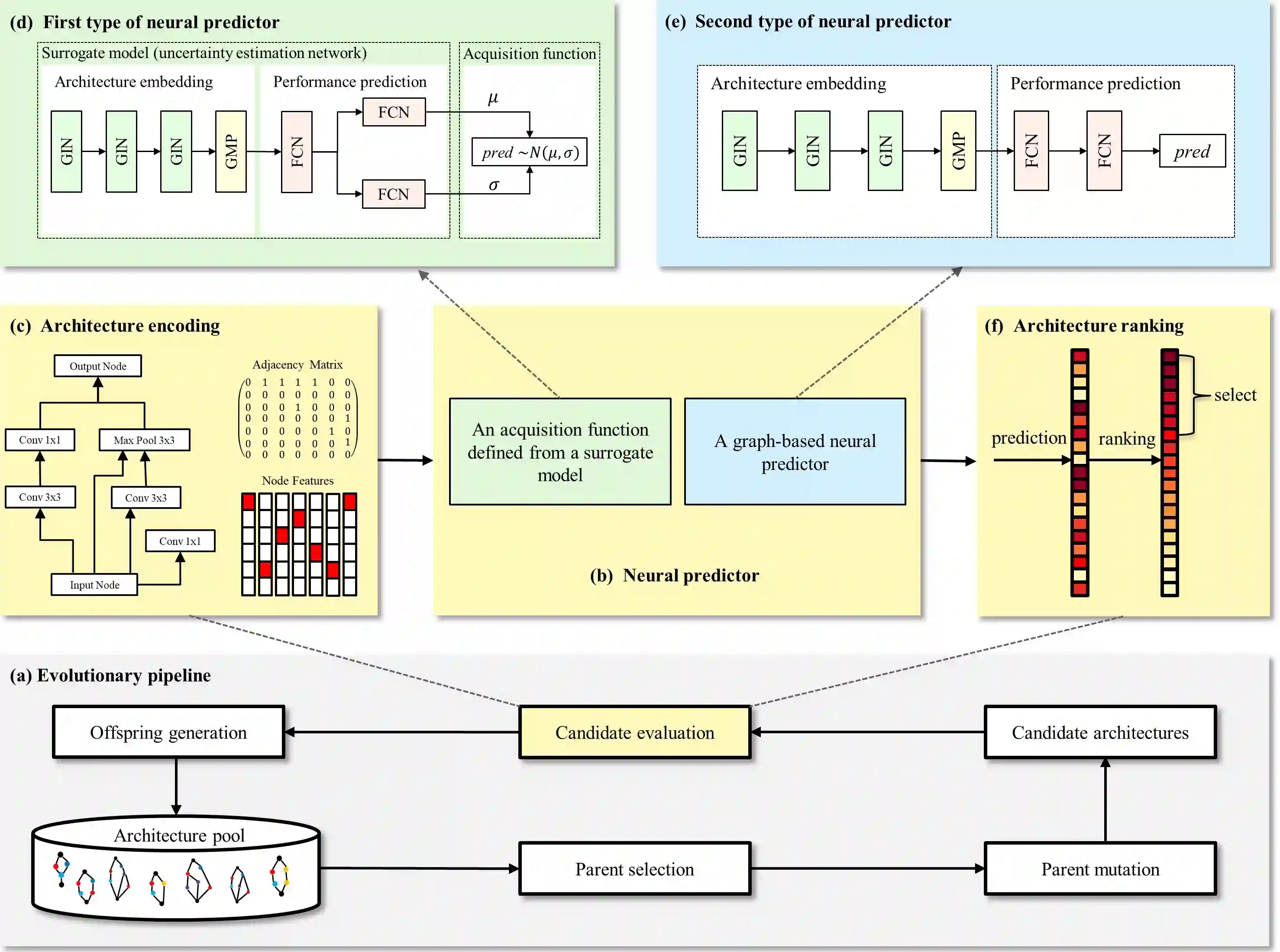

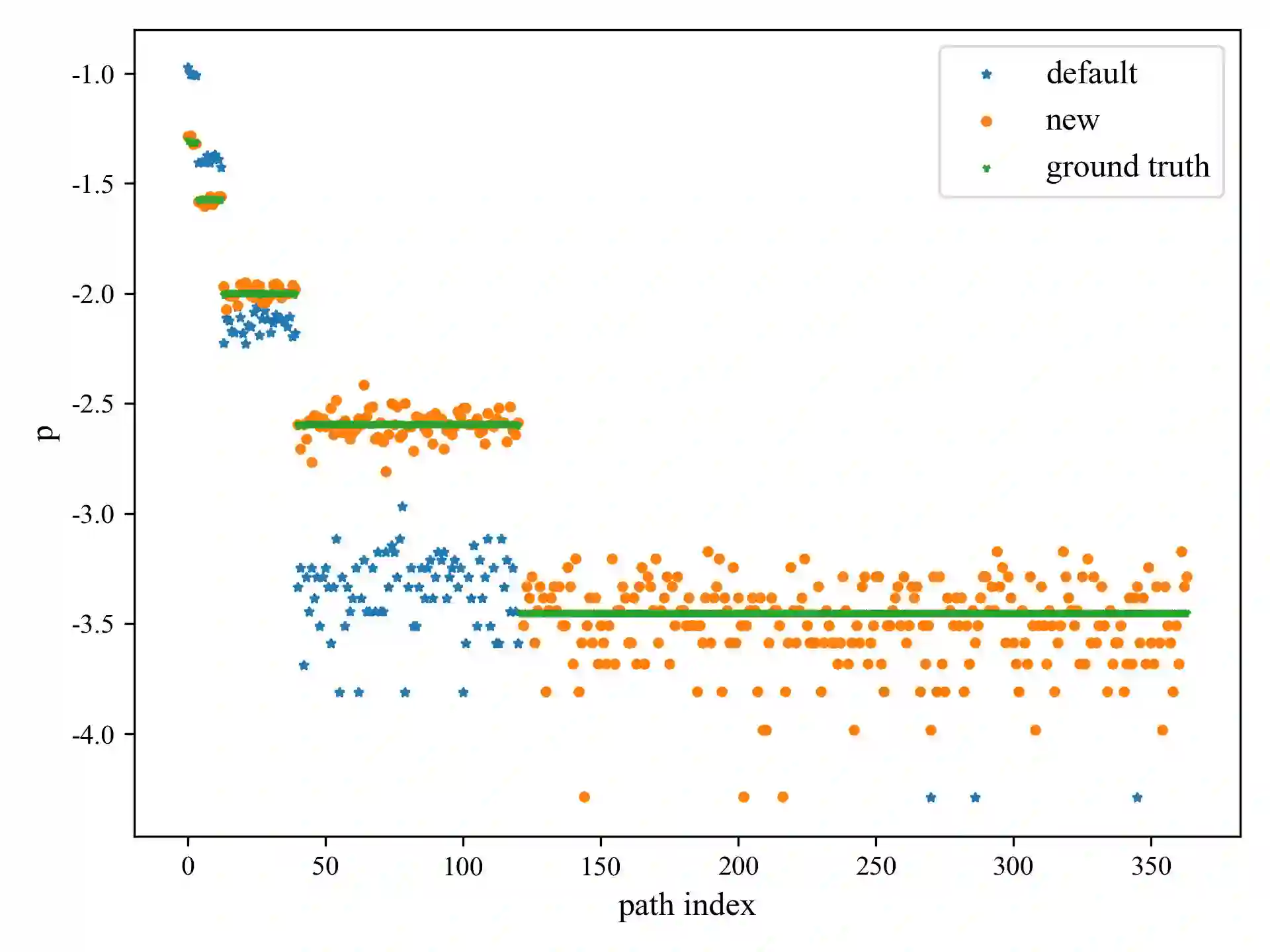

Neural architecture search (NAS) is a promising method for automatically design neural architectures. NAS adopts a search strategy to explore the predefined search space to find outstanding performance architecture with the minimum searching costs. Bayesian optimization and evolutionary algorithms are two commonly used search strategies, but they suffer from computationally expensive, challenge to implement or inefficient exploration ability. In this paper, we propose a neural predictor guided evolutionary algorithm to enhance the exploration ability of EA for NAS (NPENAS) and design two kinds of neural predictors. The first predictor is defined from Bayesian optimization and we propose a graph-based uncertainty estimation network as a surrogate model that is easy to implement and computationally efficient. The second predictor is a graph-based neural network that directly outputs the performance prediction of the input neural architecture. The NPENAS using the two neural predictors are denoted as NPENAS-BO and NPENAS-NP respectively. In addition, we introduce a new random architecture sampling method to overcome the drawbacks of the existing sampling method. Extensive experiments demonstrate the superiority of NPENAS. Quantitative results on three NAS search spaces indicate that both NPENAS-BO and NPENAS-NP outperform most existing NAS algorithms, with NPENAS-BO achieving state-of-the-art performance on NASBench-201 and NPENAS-NP on NASBench-101 and DARTS, respectively.

翻译:神经结构搜索(NAS)是自动设计神经结构的一个很有希望的方法。NAS采用一种探索战略,探索预先定义的搜索空间,以找到具有最低搜索成本的杰出性能结构。Bayesian优化和进化算法是两种常用的搜索战略,但它们受到计算成本昂贵、执行挑战或效率低下的勘探能力的影响。在本文中,我们提出一个神经预测或引导进化算法,以提高NASEA(NANANAAS)的勘探能力,并设计两种神经预测器。第一个预测器来自巴伊西亚优化,我们提议一个基于图表的不确定性估计网络,作为易于执行和计算效率的替代模型。第二个预测器是一个基于图表的神经网络,直接输出投入神经结构的性能预测。NPENAS使用两种神经预测器被分别记为NPENAS-BOBO和NPENAS-NAS-NSBA的测试能力。此外,我们采用了新的随机结构抽样方法,在NPENAS、NPARAS-NPAS和NBAS-NAS-NBAS-NBS-NBS-A上分别显示NAS-NBAS-NAS-NAS-NAS-NAS-NAS-NAS-NAS-NAS-NAS-NAS-NBAS-NAS-NAS-NABAS-NABABABS-S-SBSBSBSBAS和M-AM-AM-A上现有三个搜索空间的优势的优势业绩。