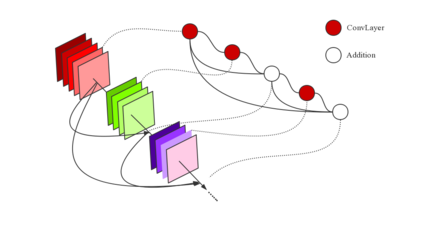

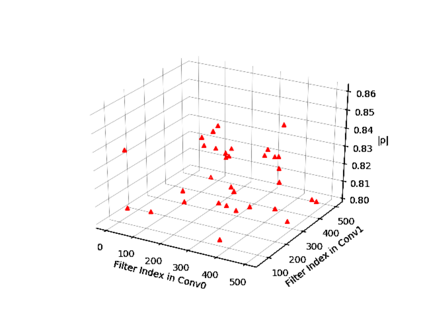

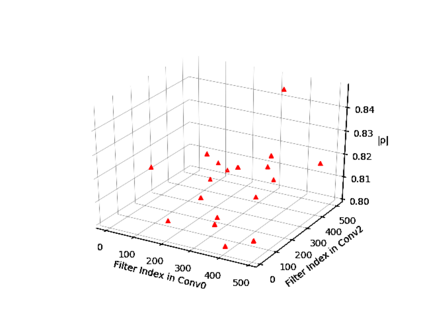

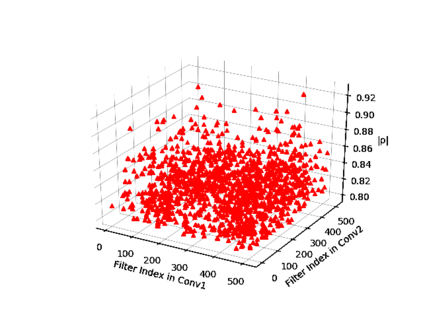

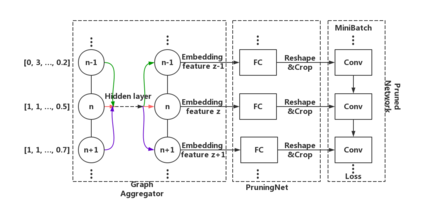

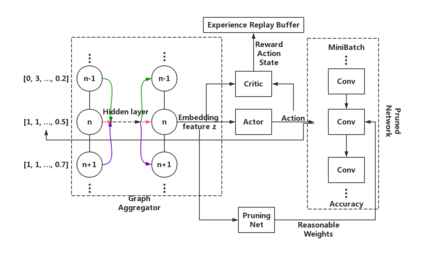

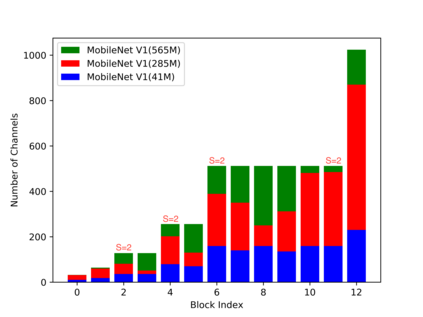

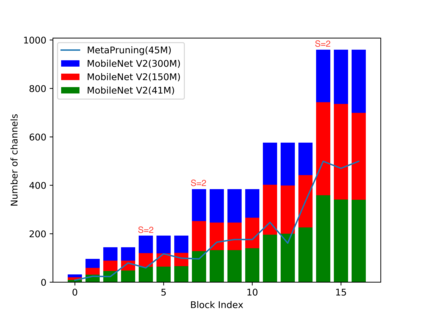

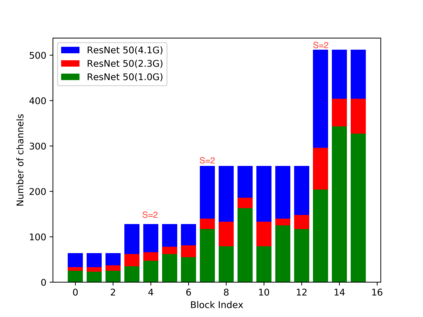

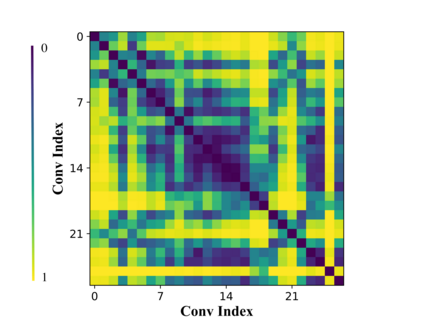

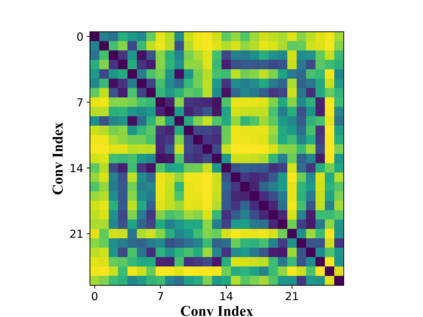

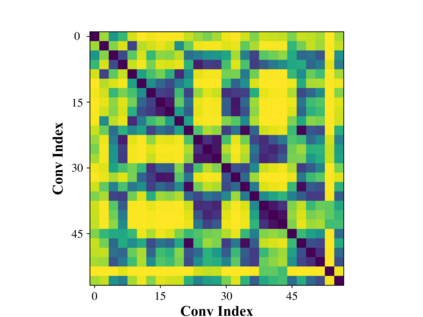

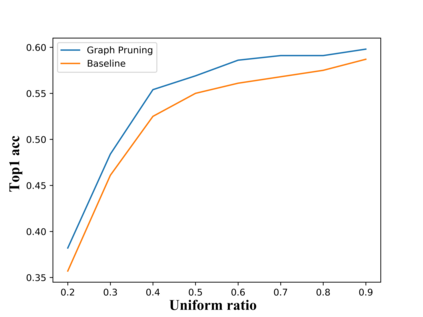

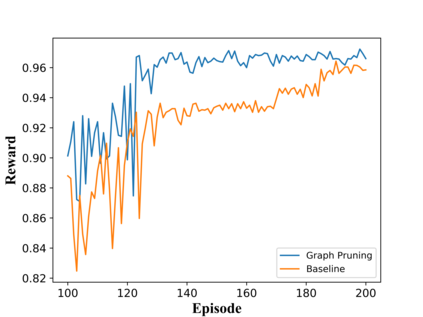

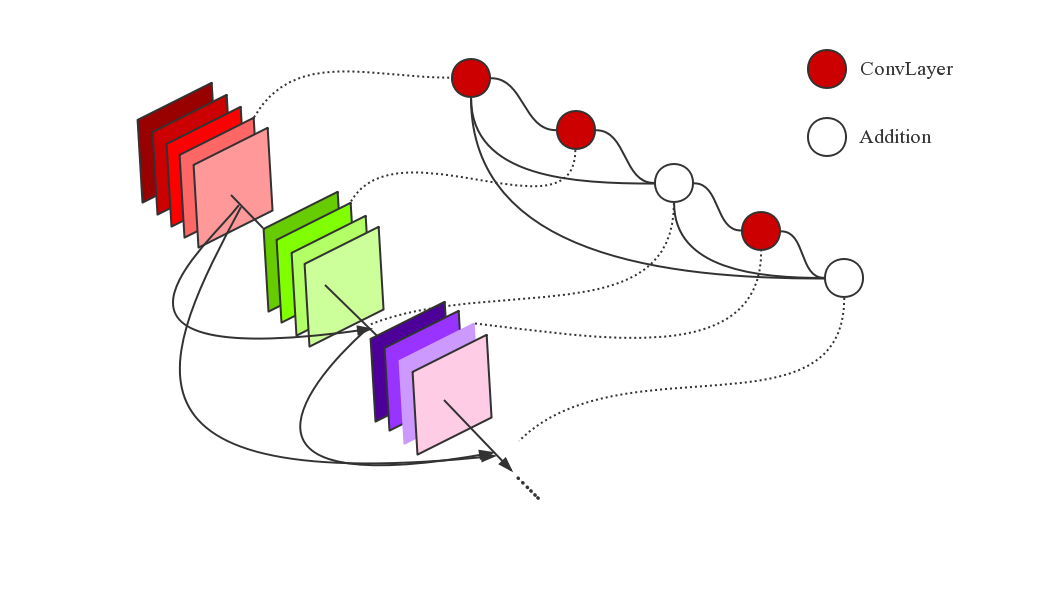

Previous AutoML pruning works utilized individual layer features to automatically prune filters. We analyze the correlation for two layers from the different blocks which have a short-cut structure. It shows that, in one block, the deeper layer has many redundant filters which can be represented by filters in the former layer. So, it is necessary to take information from other layers into consideration in pruning. In this paper, a novel pruning method, named GraphPruning, is proposed. Any series of the network is viewed as a graph. To automatically aggregate neighboring features for each node, a graph aggregator based on graph convolution networks(GCN) is designed. In the training stage, a PruningNet that is given aggregated node features generates reasonable weights for any size of the sub-network. Subsequently, the best configuration of the Pruned Network is searched by reinforcement learning. Different from previous work, we take the node features from a well-trained graph aggregator instead of the hand-craft features, as the states in reinforcement learning. Compared with other AutoML pruning works, our method has achieved the state-of-the-art under the same conditions on ImageNet-2012.

翻译:先前的 Automle 修剪工程使用单个图层特性来自动淡化过滤器。 我们分析不同区块中具有短切结构的两层的相对关系。 它显示, 在一个区块中, 深层有许多多余的过滤器, 可以由前层的过滤器来代表。 因此, 有必要将其它层的信息纳入修剪过程中的考虑范围。 在本文中, 提出了一个名为 GrapPrunning 的小修剪方法。 网络的任何序列都被视为一个图形。 要对每个节点的相邻特性进行自动汇总, 设计了一个基于图形相容网络的图形聚合器( GCN) 。 在培训阶段, 一个给出汇总节点特性的普鲁宁网络为子网络的任何大小生成了合理的重量。 随后, Prurrinened 网络的最佳配置会通过加固学习来搜索。 不同于先前的工作, 我们从经过良好训练的图形分隔器中选择节点特性, 而不是手动特性, 与正在加固学习的状态相比。 与其他 AutML prunning prend- art press- com- lat 在相同的条件下, 我们的方法已经实现了状态- 2012 。